About Me

Michael Zucchi

B.E. (Comp. Sys. Eng.)

also known as Zed

to his mates & enemies!

< notzed at gmail >

< fosstodon.org/@notzed >

Pox buster

Had a day off yesterday and although I seemed to spend a lot of time hacking I didn't really get much done. I had a few possibly good thoughts though.

OpenCL/GPU layers

The layer changes I made a few days ago should almost make GPU layers fairly easy to do without having to rewrite everything ... almost. I added layer-specific damage tracking toward that end, but that's about as far as I got. Considering writing a SampleModel which can write directly to the OpenCL buffers (which go through the nio buffer types) which would let the tool layer sit mostly on the gpu too (although there are other options which might be easier), but that's as far as I got.

Undo

I've done undo a few times before for various applications and it's something I don't particularly like doing. I guess I should get over it since it isn't really that difficult.

I was contemplating a sort of fancy undo that recorded what you did as well as the data changed so as well as go backwards you could selectively 'turn off' or edit bits you've done and re-execute them and also using that as a basis of a macro recording system - e.g. being able to run the undo history on a different layer or image. But I think I might hold off on that/or just do that as well but separately from the undo mechanism and make undo simpler.

To implement undo you need to save the changed bits, and for redo you can either save the result bits or details of the operation performed. For most drawing operations this will really just mean that the result bits are also saved. As a first cut I will probably store an exclusive-or or subtracted image as the delta limited by the bounds of the change since that will let me go either way from whatever position i'm at in the undo history. It might be compressible too and I could compress lazily if that works out to be worth doing.

I think I can fit the delta generation into the composition routine that is used solely to apply tool writes to the target layer - that's one point where both the result and original are available transiently automatically without having to save the before image separately. Then again that's only in float form.

Tiles

A thought I had whilst not being able to sleep early this morning. I don't really want to do a whole tile-based system ... but it might make sense for the tool layer and mask layer. One issue I have is that clearing/creating big layers for images simply takes a long time, but for the compositor it simplifies things greatly if any tool layers are the same size. With the get/setLine() interfaces I can easily hide the fact the backing store is a sparse set of tiles so at least the rest of the application doesn't need to know. The real issue is whether I can create a Java2D compatible image which dynamically allocates tiles as you write to them ... which I think should be possible by implementing a new SampleModel and DataBuffer. If I can get that sorted then this actually opens up a lot more possibilities such as supporting very very large images by virtualising the storage as well.

PoxyBox

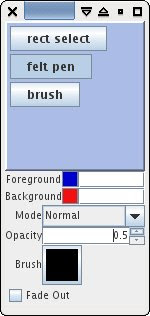

A couple of issues I have with The Gimp - it's hard to find the toolbox if you have a big screen, other shit running, or a couple of windows open, and it's difficult to work out what the tool options are going to look like without trying them. Opening up all the toolboxes just makes it worse and doesn't scale to low-resolution displays either.

Enter the `PoxyBox' toolbox!

Initially my idea was to have the whole toolbox show/hide right where the mouse is based on pressing the space bar. But then I thought it would have to be too big/cluttered to fit everything so I will probably use those keys that never get any wear and tear that every keyboard still has and were made just for this purpose - the function keys.

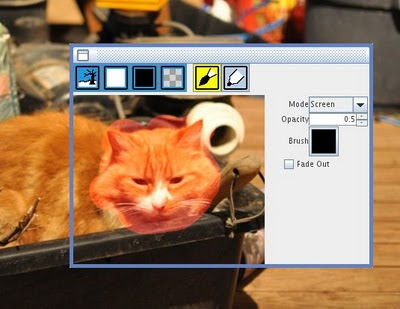

I can split the tools into logical groups such as painting, selection, affine transform and so forth so you don't have to put up with the clutter of the unconnected tools when you pop the one you're after up. They are not modal so you can still work on the rest of the image if you want a reference handy/space is too far to go, but only one can be shown at a given time.

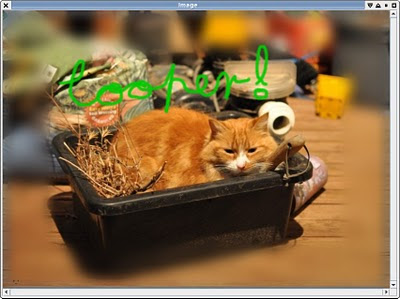

Has Cooper got the pox?

For the painting PoxyBox for example I want to have a scratch-pad area where you can actually try the tool options you've set before trying it on the image. Sure undo works for this but it's a bit clumsy. And as seen with the bluish buttons, you can take a snapshot of the image under the window to poke at, or background/foreground colour, transparent, or perhaps the current layer. Then you hit space or the function key and it goes away and leaves you to do what you were doing without filling the screen with clutter. Perhaps the function key can open up a specific one/change the current tool and space can just revisit the most recently selected. I should probably put the list of layers in there too. The scratchpad makes a mess of the design I created for connecting tools to an options setting widget but i'm sure I can sort that out somehow.

For the affine transform PoxyBox I will have all the details of the affine transform should you wish to enter the details numerically (somewhat like the 'numeric' function in Blender) - so it doesn't have to pop up another window just to show you this. The selection PoxyBox might have a preview of the feathering settings which I always find very hit and miss to judge.

The InternalFrame isn't really what I wanted to use, but I couldn't get normal panes to show up on the frame's LayeredPane so far, and it works for now. A separate top-level window may be the go anyway and it could practically be a bit bigger too. But then it might get confusing switching between drawing surfaces or finding a way to make it behave consistently with multiple pictures open.

(The name is in indeference[sic] to the IMHO not so awesome "Awesome Bar" from Firefox, and a little more obliquely to the fact that the idea reduces toolbox-pox and popup-pox).

So that's the second rewrite.

I find I always have to rewrite things at least once and usually 3 times to get it pretty well right, at least for problems I haven't encountered before.

So, after writing the post last night (and forgetting to post it) I had a thought about two of the later points - supporting different data types, and implementing line-by-line compositing.

At first I just sat down to put some ideas down - how would it run in the degenerate case of only processing a line at a time - but ended up writing a whole new compositor. Then I did some timings and it looked pretty reasonable - a tad faster than the other compositor but at least it wasn't slower, and now I had something I could throw at threads with abandon. And then I realised I was composing 8 bit data which has a fair hit of data conversion and I found it was actually 50% faster for the same case. Nice. Particularly considering it's doing a whole extra memcpy for every data layer, and a lot (lot) more hit tests (although they are simpler) (and this is always tricky with hotspot, maybe it noticed they were always true in my micro-benchmark and compiled them out ...).

So the code supports more data formats, executes faster, uses a lot less temporary memory, is trivially easy to convert to run using multiple threads, ... and is about 1/2 the total lines of code. Yes I will give myself a pat on the back. Hmm, that felt odd.

I'm borrowing a few ideas from the way GPU's execute and from some of the research I did on CELL - processing loops are much more efficient when data is accessed in a native format as the data conversion costs quickly overwhelm simple computations. If you're doing something more than once, it's almost always cheaper to do the data conversion in a separate step let alone the effort required to write specialised code for every case. So all the algorithms just work with floats as they did before - I didn't need to touch the blending kernels. I just added some batch interfaces to retrieve or store the data line by line into a pre-allocated buffer. This is the only point at which data and format conversion needs to take place. It's might not as fast as technically possible but it's quite quick, it's a lot less code to write and it's all simpler code as well - and compilers like simple code. Likely to be more cache friendly too, which cpu's also like. They like that a lot.

Then I spent a good chunk of this afternoon (had some time off work) and evening converting all the rest of the code to use this new compositor, and actually to redo the whole 'layer' object. Filter and effects now have to work differently too - they can either work with the specific types, work generically on data line-by-line, or make whole copies. A lot of operations are easy to convert to line based operations and doing so adds a couple of benefits. Firstly anything requiring temporary memory might only need to store a line of it at a time, and secondly if the work broken into lines is independent or locally independent then the work can be split to run across multiple threads.

With little effort I added a thread dispatching frontend to the compositor and now it's using all the cores on this machine which never otherwise seem to get much work to do. I haven't yet converted the gaussian blur but that will save a lot of memory as I had to extract the packed pixels to padded planes anyway and now I don't need to do that as a separate step.

The drawing tools didn't need any changes - they're just working with BufferedImage's wrapping the data - I tried changing the temporary layer to a 4-byte format and the paint tools worked just fine and somewhat quicker. I tried to leverage a bit more information from the WritableRaster and the SampleModel, but I don't really have much I need to get. I'm limiting the code to a couple of specific image formats for which I know the layout so the code can go straight to the array rather than through accessors to reduce any required address arithmetic which adds up pretty fast (or perhaps, doesn't add up fast enough).

Code is piling up quickly, hit 8KLOC already although there's a bit of stale stuff i'm keeping around since nothing is in version control. I had some pretty nasty experiences with Mercurial over the last week for work so that's fallen heavily out of favour. Heavily. I'm even considering cvs - I know it's quirks and at least it knows how to fucking merge properly which is only about the most fucking important thing for a fucking source management tool and the only thing it really has to fucking get right (fucking). Not that I need to do any merging with myself. I may rant more on that later, but maybe i've already wasted enough time with it.

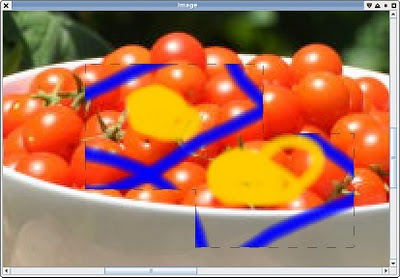

Blurragain

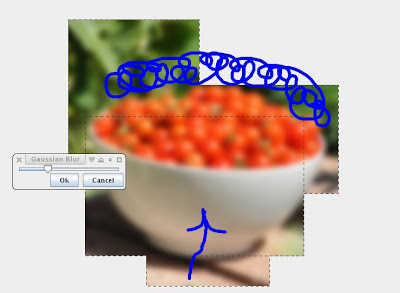

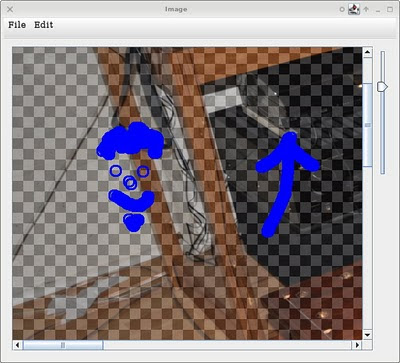

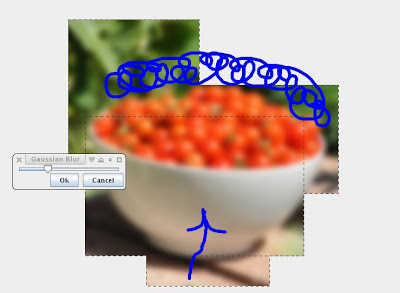

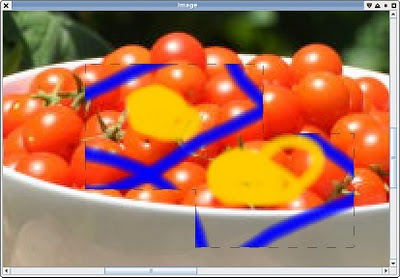

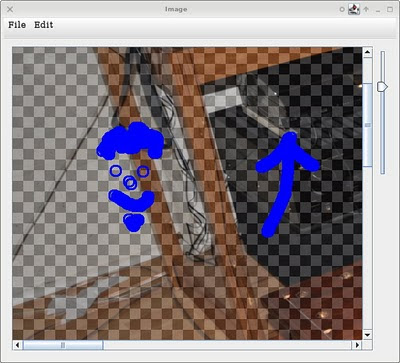

Poked away a bit more at the selection mask and compositing code, and for fun I added the 10 lines of code required to add the various selection mathematics in based on the modifier keys (i.e. union, intersection, exclusive or, subtraction and replace).

I still have some issues with the compositing not working quite right for the tool layer when it is active with a mask in replace-mode, but i think that's the only bit left now. I fixed the feathering too - it renders the selection mask to a correctly sized image, blurs it and then pastes it back to the actual selection mask. For the blurring I extracted the multi-threaded blur code from the blur tool into a re-usable object and made it support single channel data and they both now use it.

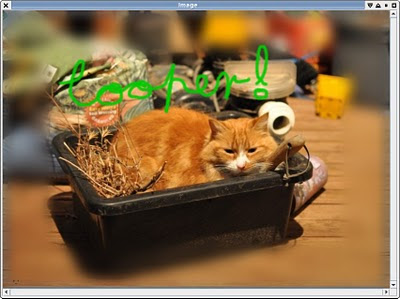

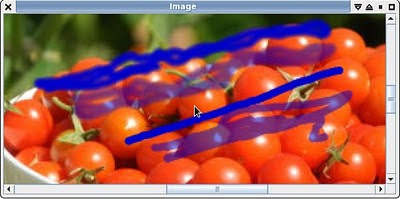

Enough of the tomatoes, time for the cat

I'm surprised I can even remember cursive let alone write it with a mouse on my first attempt.

I started poking at cleaning up the application state - right now it's all going through the toolbox object which is a singleton. Not happy with what i've come up with so far, and actually I'm starting to wonder if I want that type of single toolbox anyway. But I guess I will stick with a single something-or-other-object to route the state around to the required parts, i.e. current window, current layer, current tool, etc.

Hmm, with that sorted maybe I can start thinking about different backends.

Optimistic?

I may have been a bit overoptimistic with the desire to stick to RGBA float images. I did the maths and it gets a bit out of hand very fast - a full sized image from my camera is over 40MB in uncompressed RGBA/8 format, in float that's pushing 200MB. Add another for the tool layer and another for the compositing buffer and a single-channel image for the selection mask and suddenly you're well on your way to gig country. There's no real reason for the compositing buffer to be so big; it could be as little as a single line (or a tile, I should make it multi-threaded), but breaking up the tool layer would be 'tricky'. Even just clearing that much data is a bit of a task (but surprisingly perhaps it still runs reasonably interactive speed) although again it could limit the area cleared fairly easily.

So I guess I will have to keep other data types in mind after-all but I will probably not bother implementing them for the time being. OpenCL images can be stored in various formats but be read from memory directly as floats, so there I could probably do it relatively transparently, at least for 4-channel images. If I ever get there.

Blurdom

Mucked about with a blur tool tonight, a couple of interesting things.

I'm not sure yet if I want to embed the various tools into a panel or into a window, so at the moment the tools all run in their own panel. This actually turned out quite tidy because I was able to create a very simple 'standard requester' for popping up the tools which adds an ok/cancel button, and handles the various window events, and then just tells the tool if it's been cancelled or to apply it via a simple interface. It's pretty ugly to look at for now but that isn't important.

Then I got into creating a working blur tool. Because it's a bit slow and I want it to apply to the live image I wanted to run it in a thread. One problem I couldn't quite work out is how to handle finishing off (i.e. cancel) if the thread is busy without blocking the GUI thread. Once it's cancelled it has to return it's resources - the tool layer at least - before another tool can activate. And it can't do that if it's still using it ...

For now I decided to leave it running synchronous but to have it execute on multiple threads - this box is a 6 core dual threaded machine so there's a lot of unused cpu going on. Pretty easy actually, just ask the system how many threads there are, then break the image up into bands and let each one run amok on it's own band. Use of a few judiciously placed calls to a CyclicBarrier and it's all hunky dory. After all the stuff with trying to maximise concurrency in OpenCL this is a piece of piss.

That sped it up somewhat, but it still needs to run unhinged from the GUI thread so it doesn't bog things down on the large blur radii. I haven't fixed the edges yet either so it's not really that useful for anything as it stands.

I should probably just snarf the ImageJ blur code, which is quite fast and has a few tricks for scaling such as interpolating down and running a smaller blur kernel after a certain point.

Then I tried it along with the selection tool - the blur applies properly only to the selected area, but obviously I haven't got the compositor working properly yet since it isn't showing areas outside of the selection mask during a 'replace target' mode. I guess that'll need another visit, damn it - I thought i'd worked all that out. Also i'm just blurring the whole image and then applying the mask, but it could presumably just process the selection bounds.

It's more these fiddly details i'm trying to bed down rather than the tools themselves at this point but I had the code there already so I thought i'd add another menu item.

Late last night I was playing with it on my laptop to see how the performance and memory use was (not that the laptop is completely gutless). At one point I noticed it was starting to have trouble keeping up with the mouse by the time you had 16 layers. Oh well big deal, it wasn't that bad. Then I remembered i'd run the JVM in interpreter mode to see how the memory usage faired ... so given that it wasn't so bad really.

Finally had a bit of warmth in the sun today. Been hanging out for that. Sat outside for a while cooking and eating a steak on the bbq with a bottle of beer getting some sunlight. I think it was still fairly cold 'outside' but its a sheltered spot so was quite pleasant.

Too cold.

Bit tired(er than the usual tired as hell that i always am), was a long week last week (a few really late nights). And I've got a deadline to meet Thursday so potentially this week as well although I feel i'm pretty on-top of things unless something unexpected turns up.

I manage to find a few hours over the weekend to hack on ImageZ again to start filling out some of the UI and basic guts. I'm still staying away from the gpu acceleration until I get a few more basic bits working.

I've implemented more of and hooked up some of the toolbox.A bunch of blend-modes taken from this page on photoshop blending maths. Although i've only done about half. I keep mucking about with the layer compositor and breaking things so they don't all work right now although since I did have them working at one point I presume it's the compositor's fault this time.

I've written a layer list including icons which update on another thread although I still haven't got it displaying transparent areas properly. Actually I haven't got them displaying properly in general - along the way of fixing the blending modes I broke it.

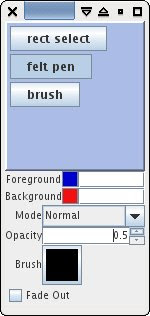

I even did some fugly `programmer designed' icons - you have to start somewhere. The coloured buttons are just an experiment and to make it a look a bit gaudy on purpose.

More than a passing resemblance to The Gimp I guess, but at this stage I don't have a lot of other ideas and it isn't terribly important. Although i'm starting to have a few, at least wrt keeping things simpler and trying to get rid of all those stacks of popups that riddle your screen or your every action. The hidden main menu i'm liking more and more too.

Behind the scenes I spent a lot of time trying to get the blending modes right - I just kept stuffing up up the maths since i'm doing pre-multiplied alpha. I redid the compositor a couple of times, first so I could clean up the way the tool layer works and make it more useful, and then to add the selection mask. And the last time I think i stuffed up the blending mechanics again so some of the modes don't work. It's one of those first-simple-thing-you-wrote things that turns into the hairy hoary crux of the whole application.

For each layer you can request a single temporary layer which becomes the tool layer. You draw to that, and the image code will automagically blend that temporary layer into the image as it composites, either as an application on the current layer (e.g. for a drawing tool) or as a replacement against the current layer (e.g. for filter operation). When the tool is done with it it then either gets automatically applied/merged to the layer or dropped, and the temporary layer is put back in a small cache and cleared. This way it doesn't have to allocate/clear a big image-sized layer every time you hit the mouse button, and you're unlikely to notice any delays it might add when you release the button.

As can be seen above, the selection mask works, although I broke the code which does the feathering when I added the mask to the rendering pipeline (I changed it from being just big enough to matching the image size to simplify the compositing). A few more lines of code and i'll have all the various selection merging modes working (union, intersection, difference, etc) as well as all the polygonal shapes (ellipse, free-hand, etc). Select by colour/etc may be a bit trickier, at least in the drawing a box around it department (the mask is a mask is a mask is the same). I will probably resort to some sort of bitblit operation for that (i.e. threshold then xor a shifted version of the thresholded mask against itself).

Too cold

I'm sick of this shithouse freezing weather, it's just so cold I don't want to leave the house. I really need to get out and do some exercise - put on a few kilos in the last few weeks because i've spent so much time sitting infront of a keyboard only exercising my brain.

Probably time for a break, although i just turned the TV on and it just reminded me why I've been doing so much hacking lately!

Hungover, wild storms, ...

... so what better to do than spend the whole weekend hacking on code.

So since last time I have kept poking at my 'graphics editing' programme, and a few things are finally starting to come together. I think enough even to give it a name, for which I decided ImageZ worked well.

Say Hello, ImageZ

I had another try at the Java2D compositing system, but this time I wrote my own alpha compositor designed just for the float format i'm using. Much much faster, still slower than the custom code but it might be an option although I have other plans going forward. I also fixed my alpha blending code - I kept finding the tool-layer blend darkened when I applied it to the target layer. Not pre-multiplying alpha properly, and not applying alpha properly. Now it works nicely.

I got the paint applicator tool working - it just steps along the drawing line and puts a dab of paint every time it's travelled a certain distance. This allowed me to very easily write a `texta tool' and a 'fuzzy brush' tool in half a dozen lines of code each - just by changing the Paint applied to the dot, one just has a radial texture. Since the paint is applied itself using a Shape, it can be anything so this covers a fair whack of the drawing end of things. And it wont take much to add pen jitter implement an airbrush, or bitmap brushes.

I have the backend but not the front end for the selection tool. It can select arbitrarily shaped regions with all the boolean operators applied to each sub-selection (including exclusive or), along with feathering. I just used the Area class to implement most of the logic, and then spent way too much time trying to get a gaussian blur running properly. I'm not sure how i'll go about displaying the selection. The gimp draws lines around individual pixels which is sort of interesting, although it doesn't really work well at showing anti-aliasing or feathering. I can use the path object to draw lines for the square, ellipse and 'free select' tools, but that wont work for select-by-colour and so on.

Selection contents - union of two rectangular regions with feathering.

I had a look at saving files in a way which preserves the layers. I was just going to write a zip file with the layers stored in separate `standard' file formats. Unfortunately I couldn't get anything to save out the float images (i'm not sure what the TIFF saver expects or if I need to get JAI for that), so i'm not sure what to do there. There are quite a few Java image libraries so I probably just have to look around. I had a go at writing an OpenEXR loader since that is a pretty simple format that supports floats. The file format is nice and easy to parse and after a few hours I had something which parsed pretty much a whole file. But unfortunately i couldn't work out the format of the line chunks - I was getting something out but the stride was out - the image was offset and squashed and stretched and nothing I tried worked. Not sure the C++ code I grabbed for doing the half float conversion translated to Java 100% correctly either (should be, assuming the Float functions are taking the same format for ints). Since I wasn't making much progress and I was getting really tired I thought I'd better move onto something easier before it got me too bogged down. So no saving for now.

I also had a go at adding a frequency convolution mechanism. The speed is ok - visually within 1-2x the speed of the Gimp for a gaussian blur and I think it's using 2 threads for the FFT most of the time (about 0.5s for an RGBA image about 800x600). But with big blur factors or big motion blur you get the edges bleeding in (although it still runs at the same speed), so I need to pad the data first (extend pixels I guess?). The mathematical neatness of it is nice though and it allows for some interesting things that can't be done with a spatial-domain convolution kernel.

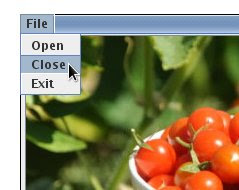

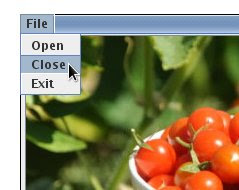

Then today I got really side-tracked. I really really hate having menu's attached to every window. Just such a huge waste of space, ugly and so on - and the 'animated slide to hide' crap just shits me off no end since it just gets in the way. So I had a go at trying to work out how to display AmigaOS style menus for Swing applications. They're hidden till you hit the menu button, then they work like any other - but it allows that part of the screen to be used for other things. After a very long journey of dead-ends I finally have something that works remarkably well. I had to add a mouse-listener to the glass pane of every window and I have to manually track and route mouse events. Basically I created simple sub-class of JMenuBar that uses a PopupFactory to present it as a popup menu instead and close it once the selection has been made. I can position that anywhere on the screen - e.g. at the top a-la-AmigaOS, although for now i'm sticking to putting it on the top-left of the window because top-left on a dual-screen display is a bit of distance away. It is no doubt rather hacky and almost certainly not portable but it's still bloody fucking cool.

So that's where the menu went!

I even hacked up the JFileChooser so that it opens in 'details mode' and a lot taller (why do they open it up so unusably small by default?). For that I have to walk the widget tree and then programmatically 'press' the details button. This makes it look basically the same as the ASL file requester (AmigaOS again) although unfortunately it isn't nearly as nice to use - the ASL one let you navigate easily from the keyboard without having to tab around to every single gadget (e.g. key the up or down cursor whilst the filename gadget has focus and it moves the selected item in the file list whilst dropping it into the file entry for editing, hit return on a drawer and it opens it rather than giving your application a `file' it can't use). It also ran asynchronously much better (the GNOME one is getting dreadfully slow).

I had a bit of a time trying to work out how to get the image window to open the right size. revalidate() is the key here. Although on big images they're opening bigger than the screen now :( And no matter what 'setMaximumSize()'s I used it makes no difference. Known bug.

I got zoom working. A lot easier than I thought it would be in the end. I just had to add an AffineTransform to the drawImage() call, do a little bit of scaling so the flattened image updates properly based on paint events and visa versa, and finally scale the mouse events for the tools. Then it just worked. Simple. Really fast too - basically instantaneous - since the only thing scaled is the backing image on its way to the screen. I have it hooked up to the keys 1-8 for now although I don't have it centring nicely when you zoom yet.

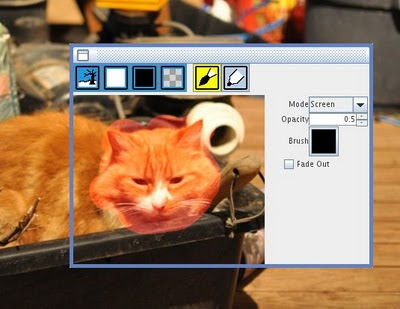

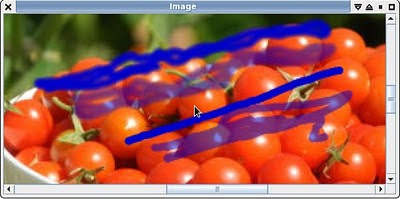

2x Zoom, with the two paint tools so far and different opacity settings.

I have a small toolbox and was fighting with Netbeans earlier in the night trying to get the widgets laid out nicely. Not entirely successful there. There's some painful stuff when you try getting any of the various layouts like GridBagLayout's to size to their content, and Netbeans doesn't let you set glue in BoxLayout's. I will probably just resort to hand coding the widgets, I guess there isn't really that much that needs doing anyway. I don't have it all hooked up, and I only have 'normal' blending mode in that menu, but I do have enough backend to implement the options shown.

Yes, the toolbox has a menu too (hidden).

I'm quite pleased with the progress so far - I have had some extremely late nights so it's sucked up quite a few hours (and i've been doing plenty of hours for work too; I work to forget). I'm not that happy with the way the layer window is implemented and how the tools are interacting with the image. I probably need a 'tool layer' as part of the image somewhere and not as part of the tools. And likewise the 'toolbox is the state' isn't really clean - although that's more a matter of re-factoring into some other static state class.

I'm also pretty pleased with the performance, considering I haven't exactly done much in the way of optimisation and everything apart from the FFT is only using one thread. I'm throwing around float images around like nobodies business and apart from using a keg of memory it's all nice and snappy (it's not really fair to compare a full app to a tech demo but certain things like the image window is noticeably snappier when magnified, probably because it's not bothering with tiles and Java does a bunch of multi-threading behind the scenes and doesn't need to deal with event polling all the time either). That's a little surprising since i'm using a non-standard format which is at least an order of magnitude slower for Java2D than a standard one. Part of the reason for playing with this was to have something I could accelerate using OpenCL, but at least right now it seems barely necessary (until I get some complex filters going). I'm kind of in two minds now - whether I just take out the float stuff and see how well it can go when using a supported backend format, or whether I look at moving most of the pipeline to OpenCL for the fun of it (or OpenGL I suppose, but that misses the point of what i'm trying to do). I wasn't originally going to support different data formats, but perhaps if I think about it a bit more I can find a way to experiment with multiple pipelines without adding a whole pile of support framework. I will keep most of the 'tool layer' or structured graphics layers (text, etc) CPU side regardless so it might be fairly easy to do.

Got a work deadline in under two weeks, so I might be a bit busy for a little while :-(

Java2D and Float Images

So much for a day off, well I didn't get too wrapped up in work nor too wrapped in coding but I did dabble a bit. It was one of those crappy cold days - no wind, just no sun and a seeping cold that gets into your bones and turns your toes numb and fingers stiff.

I did finally work out one thing, or maybe re-worked it out; how to create Java BufferedImage's backed by a floating point buffer (and i'll get the details out of the way first).

{

int width = 1024, height = 768;

float [] data = new float[width * height * 4];

ColorSpace cs = ColorSpace.getInstance(ColorSpace.CS_sRGB);

ColorModel cm = new ComponentColorModel(cs, true, false,

ColorModel.TRANSLUCENT, DataBuffer.TYPE_FLOAT);

SampleModel sm = new ComponentSampleModel(DataBuffer.TYPE_FLOAT, width,

height, 4, bounds.width * 4, new int[]{0, 1, 2, 3});

DataBufferFloat db = new DataBufferFloat(data, data.length);

WritableRaster wr = Raster.createWritableRaster(sm, db, null);

BufferedImage bimage = new BufferedImage(cm, wr, false, null);

}

Each pixel is then stored in the array data[] in the order R G B, A. With the backing array image ops can work directly on the float data (FFT convolution anyone?), or you can get a Graphics2D from the bimage and work with that.

I was poking at a layered image system, using floating point buffers in RGBA to store everything. I load the image, convert it to the buffers, and run a really crap, really simple multi-pass compositing system to blend them into the display. So I have an image I can scroll around and set the alpha of, but what about drawing?

I recall trying to get float backed buffers working before and not having much luck, so I was going to look at using java2d to write to a byte or short buffer and then just converting that over (good enough for what i want). But I did finally work out the float buffers so I don't need to do that - and that's despite the documentation saying that 'TYPE_FLOAT' is just a placeholder. Actually it's even better since I can just attach a BufferedImage to any arbitrary layer's float array and then use the nice Java2D API to write to directly - there goes most of a 'drawing application'. It only needs a little bit of damaged-area tracking to get this onto the screen efficiently.

Currently i'm still converting the composited float image to INT_RGBA since that is a bit faster than drawing the float-backed image itself, but it isn't a huge difference.

Tada ... 2 layered image, the photo is about 70% opacity by the slider on the right with the 'background' showing through, and the top layer was drawn to using Java2D (I forgot to turn on anti-aliasing, but that's trivial to add). Actually java has it's own compositing mechanism so I can probably throw those few lines of code away too. Update: Tried this. Way too slow. Nevermind.

Tada ... 2 layered image, the photo is about 70% opacity by the slider on the right with the 'background' showing through, and the top layer was drawn to using Java2D (I forgot to turn on anti-aliasing, but that's trivial to add). Actually java has it's own compositing mechanism so I can probably throw those few lines of code away too. Update: Tried this. Way too slow. Nevermind.

It doesn't do much, but then it didn't take a lot of code to do it either.

XBMC beagle, GSOC 2010

Well I 'promised' an update on the beagleboard gsoc 2010 xbmc whatsit, and since we've just had the 'mid-terms' and I have some spare time it seems like a good point to poke it out.

The good news of the day is that Tobias passed the midterms well - although I haven't had a huge amount of time to devote to it, he has thankfully worked very well independently. He's been working well with both the xbmc and beagleboard communities, finding relevant experts to aid the task which has let me off the hook quite a bit. He's had to spend a lot of time just on the beagleboard environment which was an unavoidable pain since the hardware arrived late - and xbmc is a mammoth bit of code that takes an age and a half to compile. But most of the code to this point has been changing the rendering system from a game-like render-all loop to a damage-based system - which could be done on a pc. Still bugs, but it's getting there. The patches look nice, and he keeps the commited code building (just as well - it takes hours to build on the target).

He's started on the video overlay system now, so i'm expecting some big improvements. Some initial timing suggests it's spending nearly 60% of it's time in the 'gpu' doing YUV conversion (i'm not sure what resolution he's running it at). The video overlay will do that for free, and more in that it reduces the memory bandwidth requirements significantly.

XBMC basically 'runs' on the beagleboard now, but can only play quite low-resolution video and there's a few issues with missing text, but it does run. With a simpler theme and the video overlay work i'm hoping it will at least be at the SD-video media player level. The XM might even manage 720p for simpler video formats like mpeg2. Although out of scope for this stage of the project, there's also the DSP sitting idle at the moment so the hardware is capable of quite a bit more yet.

Copyright (C) 2019 Michael Zucchi, All Rights Reserved.

Powered by gcc & me!