About Me

Michael Zucchi

B.E. (Comp. Sys. Eng.)

also known as Zed

to his mates & enemies!

< notzed at gmail >

< fosstodon.org/@notzed >

XBMC BeagleBoard GSOC '10 Wrap Up

GSOC 2010 is coming to an end and the final assessments have been made, so it's about time I posted an update on the result and my experiences.

The Story So Far ...

XBMC had been compiled to run on the BeagleBoard but wasn't really practical for use. The menu's and video ran poorly at under 10fps even for low-resolution video and in general it wasn't usable.

Part of the reason is because the rendering system is written as a game loop - everything is drawn every frame and it relies on relatively powerful video hardware to ensure it runs at a reasonable rate.

The Goal

To speed up the menu's and video playback to a point it might be usable, or at least demonstrate it could be possible.

The Approach

The initial aim was to try to reduce load on the graphics rendering subsystem by reducing the amount of work it was given. Ideally working towards an event driven widget system but at least not drawing things that haven't changed from frame to frame. Although this was much of the initial proposal and most of the time spent, the resultant improvements were only modest. The menu's did speed up some but the full-screen video wasn't markedly changed since usually there are no graphics to draw at the same time anyway.

Two other suggestions became key to improving the performance. One was to use the video overlay hardware in the beagleboard to perform the video to rgb conversion and image scaling. The other was to come up with a more modest theme which didn't tax the system so much - reducing animations and large background images.

The Results

The good news first. You can now play small videos smoothly and navigating the menu's on their own is also responsive enough to be usable. Just on this alone the project was clearly a success - 11 weeks is really a very short amount of time to do anything much and certainly not enough time to debug someone else's software.

Whilst playing a video in the background the menu's still have some issues - things slow down quite badly and the video overlay (well, under-lay) doesn't work terribly well. I think the custom theme should help this though.

The main menu in the default theme.

Unfortunately I hit a pretty major bug when trying the custom theme made for GSOC, so I had to leave it with the (mostly) original theme. This appears to be some issue inside the deep lower-bowels of XBMC.

Default theme problems

Although even the default theme had some major problems. The above is the video source menu - but it's only showing the text and you cannot see the mouse when it is stationary, and the keyboard does not highlight the current menu item.

Not being an XBMC user i'm not terribly familiar with the controls - which makes it a bit difficult to use when you can't see them much of the time. I seem to hit a lot of stability problems when trying to quit a video and try another one. And if things don't shutdown cleanly you can be left with a frame of video or a blank screen covering everything.

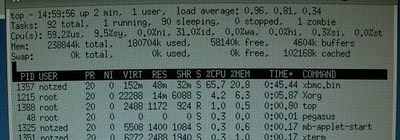

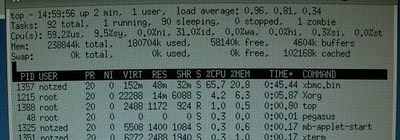

Playing at least a low-resolution video from local media is quite fine - there is no tearing, the scaling looks quite acceptable, and the video and audio generally keep pretty good sync. The above screenshot is whilst playing a 640x480 24 fps mpeg-1 video, and at least it's got enough headroom to keep the video playing.

Playing music seems to have an unusually high cpu usage - but still under 50%. And it plays quite well across the network from a mediatomb server I have.

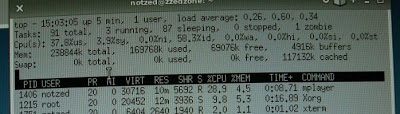

So for comparison here is my favourite reliable video player mplayer's top results on the same video - so there's still obviously some way to go on the video pipeline front since they're both using ffmpeg for the decoding (afaict). Although mplayer tears noticeably so isn't terribly great either.

Also for comparison, mplayer is able to play 576p (720x576x25) recordings from a digital tuner (mpeg2) without dropping frames - and under 90% cpu utilisation. And I believe that is only using the CPU (and presumably Xvideo overlays). XBMC cannot keep up with this and doesn't degrade terribly gracefully (audio stutters, video remains black, the menu's become unresponsive).

Where to now?

Obviously there is still a lot of work to be done before it's practical as a media player. If the issues with the themes and some general stability problems were fixed it could at least be used in a limited way - e.g. as a remote player for a small screen or an analogue tv (and the lower resolution is a big help for performance of the front end). With more work it could certainly make it as an SD media player and a HD one (720p) for the Beagleboard XM (the next-gen one that was just released a few days ago). The DSP is also sitting idle too so the hardware is capable of quite a bit more.

From a very limited amount of profiling I did, it appears the XBMC codebase is littered with snippets of less than ideal code which eventually adds up. For example the background images are scaled using a simple double for loop and put/get pixel routines - which gets very slow as the resolution of the interface is scaled up (simple fix here is theme tweaking). The audio is being remixed when I believe it doesn't need to be since the audio bitrate is flexible, and so on and so forth.

Reports from Tobias suggest there are opportunities for tuning of the calls to the graphics library to improve performance. e.g. splitting up large textures into smaller segments and so on. Some of the GL library is still in software so ideally you'd avoid those code-paths. The XM board also has updated hardware which implements more of the API directly so has different tuning characteristics and just runs faster.

And from the way it runs I gather the media loop is still a bit too tightly coupled to front-end. Given that the video is being rendered on a separate surface to the menu's there's the potential for them to be completely de-coupled although apparently that isn't going to be easy.

XBMC is a very big piece of software that appears a muddled together from a lot of separate pieces tied together with python. Although a lot of the code seems pretty decent I wonder if it isn't straining a little under it's own weight, particularly on a machine with limited capabilities. And well, python ...

My own experiences

GSOC was I guess an 'interesting' experience. I think what made it most strange for me was it was for software of which I am not a maintainer (or even contributor or user). Not having intimate knowledge of the software and specific internal goals for it made it hard to judge the direction things should move in. Fortunately Tobias had a better idea of that than me, was good at finding things out on his own, and took directions well. And I know a thing or two about GUI programming, and a bit about video, so I still had some important tips to offer. I got side-tracked with work and other projects as well and the timezone difference (~10 hours) didn't help so I didn't really have as much time as I thought I might when I put my name down initially - but I don't think that had any effect on Tobias's progress and I made a point to keep up with the few mail conversations we had.

I also had a strange problem for which I still haven't identified the source of - all of a sudden the code just wouldn't run any more. Odd errors about pixel shaders not compiling and bogus messages in the log file. I tried recompiling multiple times, updating the os, upgrading the os - in the end changing to another spare board fixed it. I'm not convinced it's a hardware issue since everything else was working ok but haven't had the time to track it down.

The BeagleBoard/TI fellows were decent and quite helpful. Jason deserves special mention trying to manage this all for the first time and doing a pretty good job. Others helped chairing the IRC meetings when he was unavailable. Which can't have been that fun as nobody seemed terribly attentive at them.

The Google side of things wasn't exactly inspiring - not a great deal of direction on things such as grading (although again, a project one was more involved in would help), and an utterly dreadful piece of software they use to manage it. It was almost enough to want to give up before it even started but thankfully after using it constantly during that time, you only had to use it a couple more times after that. As a general mentor (not the boss-man) there wasn't really any direct interaction with Google anyway.

GSOC Tips

For students - make sure you're doing something you're actually interested in. And you really should have some free software experience under your belt already and ideally with the project in question. Experience with source control, email and IRC and remote development is pretty important. I can't imagine too many mentors would like me merely do it because I could and out of curiosity - they probably want a real outcome and hopefully a long-term contributor to their pet project. And make sure you really have the time to dedicate to it - university, family , or other personal commitments can very quickly eat into the limited amount of time you have available.

For mentors - choose wisely. From the sounds of it the beagleboard projects all went pretty well, and there was certainly a lot of deliberation over which projects to choose. Also, don't waste time on students who can't even be bothered to submit a full proposal to start with or who have ideas a little too crazy (Beagleboard seemed to get a lot of these - I think because it had a hardware/software component and only a few specifically targeted software projects). I'd personally avoid anyone who looks like they're just doing it for school, or the pittance of money on offer.

I'm kind of in two minds whether the whole thing itself is a terribly good idea. If you need such encouragement to discover the joy of hacking then maybe it isn't for you - most projects are looking for programmers all the time so it isn't hard to find something to play with. Although I guess they aren't always all terribly welcoming. Perhaps on the last point it has some merit since it forces organisations to get their shit together a bit wrt novice contributors although I imagine for many of them it isn't actually worth the effort.

Bits and pieces

Finally a day with a bit of sunshine ... did a backlog of washing, pulled weeds for 3 hours, mowed the nature strip and started emptying out a garden shed I need to move. All in all a productive day.

I spent most of the rest of the week since the last post hacking away on ImageZ, although I took it a bit easier with not so many late nights, and had room to fit in an extended binge drinking session with a couple of mates. I guess next week I'll be back to work again.

Tough life for some ...

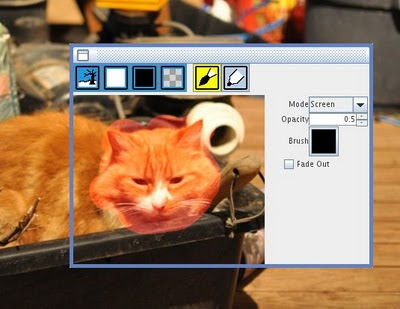

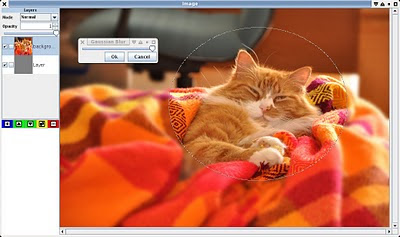

Toolbar

I bit the bullet and put a 'tool bar' in. I kinda hate those things ... but the alternative was lots of popup windows so I don't really think I had much choice. Thing is if you're working with layers you really need them handy so it seemed the obvious place to put it. Also means i can link them with the document and that simplifies a few things both for the code and using it. At least you can show/hide the whole thing with a single keypress. Still may change it to an internal window too. Not sure what else I will put there - I suppose something about the current tool may as well go there as well. Which might mean less need for popup tool selectors ...

Ellipse Select

I added ellipse selection - very easy. And hooked up a few menu's for select all/clear/invert selection. I started looking at editing the 'current' ellipse/rectangle but I haven't gotten very far yet - it adds two invisible hit-boxes at the start/end point that lets you drag it around so the mechanics are there, I just need to present it somehow. Since I need similar facilities for structured objects I may be able to re-use code or at least how it works.

State tracking

I fixed most of the state tracking issues - now the window that contains the drawing surface is listening to mouse events and re-routing them to the current tool, rather than the tool listening itself.

Found a workable method for the menu item actions to find out which window invoked them, so I added accelerator keys to them, and added all the tools to a menu item too.

Blend Modes

They `broke' again when I added the blend mode and opacity to the layer viewer (yeah I hooked those up too). Turns out I wasn't pre-multiplying alpha for the result (which wasn't necessary for the tool layer). So this has hopefully fixed all those problems up ... until the next lot come along. Oh also I had started with a checker-board pattern as the base data before starting the composition ... which was a bit mistake even though it looks the same for normal blend modes. Now I just blend the result into a checker-board pattern once I have a composed result.

Undo

Moved the undo tracking to the image itself rather than have a global one. I'm still tossing up whether I use the Swing UndoManager (which lets me track state changes from other swing objects), or stick to my own which is simpler ...

Backends, performance

Made a couple of other plain and sparse-tile backends for different data-types. Unfortunately the sparse int layer isn't any faster than the sparse float once since it has to go through the same generic code-paths, although I guess it uses less memory. Memory usage is a bit of problem - it uses a lot. I guess with the GC though you can't do much about that and it's the price you pay for it running quickly. The 'native' int-based backend is very quick though. I still need to do a 64-bit backend (16 bit elements) but that's relatively simple. I stuffed up a bit and my layers are RGBA instead of ARGB - it doesn't really matter since I data-convert anyway ... but maybe it makes that less efficient. It is what OpenCL supports though.

Threads

Played with a lot of different ideas to do with threading. Right now drawing is on the event thread - if it gets too much to draw it starts dropping mouse events. I played with running the layer composite rebuild on another thread, and running the tool rendering on another thread. Hmm, various trade-offs here and I can't say i've settled on anything yet. Given that the custom image types are so slow to draw to using Graphics2D, I may have to consider using one of the built-in 8-bit types as a tool layer - for most operations this is more than adequate (the only real place I can think of it not being is with fine gradients).

Text layer

Actually I want more of a 'structured graphics' layer. Well, ... that's really a whole project in itself. I just played a bit with the low-level text api's which can do word-wrap and so on. Text is always a bit of a pain and this isn't any exception. I'll surely be able to do everything I want, but there's a lot of api's to learn first and adding the right level of front-end is the hard bit.

Ultimately I want there to be a layer type that contains more than text - possibly multiple text and graphics objects. Yeah i'm dreamin here ...

I/O

Still no real save (I have some test code but it isn't hooked up). I came across the OpenRaster format, which is pretty much what I was going to do anyway - except the XML bit. So I'll probably use that for compound images. Friggan XML.

`Light' version

Still thinking about possibly doing a light version of the interface. Maybe drop out the layers and just support 8 bit RGBA (for memory use and simpler i/o). Mostly so I can test out ideas without being bogged down in complexity and try to get something I can use.

Clipboard, screen capture

Well after writing all the above and forgetting to post I looked into first capturing the screen and then when I found out that was so simple I moved onto the clipboard.Capturing the whole screen is one line of code, but trying to find where a given window is seems to be beyond Java. So I cheated a bit and invoke xwininfo and read the content from it's stdout. That pops up a cross suspiciously like the one that GIMP uses to select frames for grabbing, and then spits out some window details. A simple loop that parses the lines and I extract the window bounds and now I can grab windows too, at least on GNU systems. I still need to add a little requester asking for some details but that's a piece of piss.

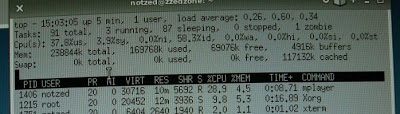

Merged ellipse fuzzy select pasted into everyone's favourite free image editor.

Clipboard support turned out to be almost as easy, so I added that too. I've done it before using gtk+ and basically it works the same way - negotiated content using mime types - but all the details are done for you and you end up with a BufferedImage if that's what you're after in a line of code.

I did come across one issue (bug?) if I alpha-select something in GIMP it pastes fine, but if I alpha select something in ImageZ, paste to GIMP and then copied from GIMP again it comes back with no alpha by default. Looks like the image has been converted to low-quality JPEG as well. If I explicitly ask for a PNG file it works fine, but then I suspect it will force all selections to 8 bit even with internal copy/paste ... (assuming it isn't already). I guess nothing's perfect.

Well I guess that's enough for one weekend, off for a long ride in the hills to try to burn off some of this winter/hacking fat (although with beer, grog and pizza possibly at the end of it, it may not go far toward that end).

Mistakes and milestones

I had a bit more of a poke around the SampleModel and WritableRaster classes last night and worked out it wasn't too difficult to add some optimised code-paths for the sparse tile data-buffer. With that in place it's pretty comparable on small images to a simple flat array and there's still a few tweaks left. So today I filled that stuff out a bit and wrote some better getLine() code for the compositor and did a bit of profiling and playing.

Then I thought i'd tackle undo - just for the image edits. Turned out to be very simple and pretty easy (in hindsight perhaps not so surprising - it isn't like editing a tree where you have complex data-structures to manipulate, it's just a rectangle of bits). I just made a new version of the tool layer composition routine and had it calculate a delta as it went - and that delta is just stored in another sparse layer. Because the compositor is not sparse-aware I added a check in the sparse layer setLine() call to see if a non-existent tile was being written with a row of zeros and it does nothing if it was ... yeah it's not terribly efficient! The delta along with some pointers to the relevant objects is then just pushed onto a stack. Undoing or redoing the delta is a simple matter of applying an addition or subtraction to the target region. Again it isn't sparse-aware but it could be made thusly without a lot of work. I'm just doing the edit undo globally for the application (which isn't right!) but that is easy to change. Lastly I just tested to see how well the deltas compress. I added a step to compress (and discard) the sparse layer delta every time it is saved to the undo stack. For very small edits it's very good - from 5% or so, for lager single-colour paints up to about 20% of the original size. A full-sized (1024x700) wide-radius gaussian blur was more like 70% ... but it's probably still worth it. I'll have to find a way to lazily do it in the background from the undo manager though as it can start to take a while to run as the data size gets bigger.

I got a bit sidetracked trying to fit a colour selector and layer list into the paint pox ... the Java one is quite large. And so now the window is getting a bit big (maybe not too big though). I started working on my own version but i'm not sure i'ts a path I really want to go down. I'm also not sure what to do with the layer list - it's sort of something you need handy from every tool but I want to avoid having a separate window for it that is always around. Might be a job for an iframe ... maybe. Having one central layer list is a little clumsy if you use focus-follows-mouse like I do so it might make sense to have it per image somehow.

But I've already found the pox idea works a lot better on my laptop (only 1024x768 screen) than having a separate toolbox which is too easily lost or hidden.

I do need to rethink the way the tools track the current document. Right now there is a central model which tracks the application state. When the mouse enters a window it notifies the model of the change - but this is really broken (e.g. drag a paintbrush outside the current window over another ... nasty things happen). I did have it based on focus but that didn't seem very reliable either. I probably need to manually re-route window messages to the current tool rather than having the tool listen to the current window (which is clumsy as hell anyway). Menu items are still a bit of a pain since i'm using a single menu across all windows and the actions have no direct context to go by when they fire (although now i think about it, since i'm manually popping them up I already know where they came from).

Now i've pretty much got the 'guts' I need as a baseline it's probably time to fix these niggling issues and bed it down a bit more solidly. Which probably means things will shift into low-gear for a while since that stuff can get tedious. Not a huge amount to fix though.

10KLOC

Ahh the milestone. Broken 10KLOC, at least according to wc. I suppose that's ok for 4 weeks of spare time ... must be slipping in my old age.

Sparse Images and Poxy Boxes

Had a bit of a break over the weekend and yesterday but back into it this evening.

I've sorted out the toolbox ideas enough to get something working. It's a little ... odd, I guess. The flow i envisaged isn't quite as clean as i'd hoped although I imagine it's something you could get used to fairly easily.

Basically F1 and F2 bring up the current Paint or Grab windows, or toggle a given one. If you switch between them the other is hidden so only one is ever visible. If they're visible when you do it the location sticks, otherwise it pops up under the mouse. Space hides whatever is visible or shows the last one hidden. Fairly straightforward at this point.

Now things get a bit weird. When you bring up a given window, the last tool you used on that window is automatically selected. I didn't do this at first and I kept popping up the paint box and expecting to be able to draw in the scratch pad so it seemed like an obvious solution. But i'm still not entirely happy with the flow when you switch for example between selection and drawing. Although perhaps accelerator keys for the tools themselves will simply make this issue go away. And if I do that then space should probably pop up the poxy of the currently selected tool.

Ahh well, things to try. I have at least killed the 'master toolbox' entirely now, so upon launch you're simply presented with an empty image window to start with from which you can 'new' or 'load' to make it un-empty. Well apart from a bug that seems to open a new window fairly often instead. I'm not tracking the windows yet so if you close all the windows it leaves the application lying around, but I guess that wont be terribly difficult to sort out.

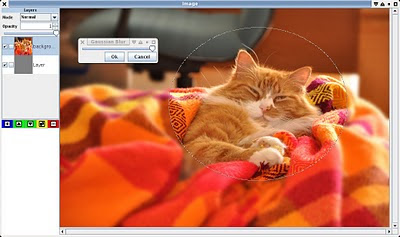

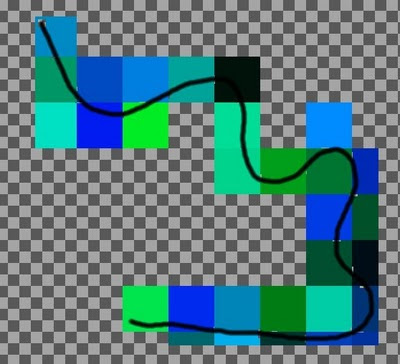

Sparse Images

I also had a quick look at sparse images. Basically the image is backed by an array of tiles and tiles are only created when you write to them. Otherwise they read as cleared pixels (or whatever else is handy I suppose).

Showing the blocks created during the doodle sequence.

Pretty simple stuff - although because of the way the Java graphics system works the address arithmetic is a little messy. It accesses the data storage as a 1D array, so it has to go through a lot of excess arithmetic - flatten a 2d access into a 1d access, then unflatten the 1d access to calculate which tile it's in, and finally re-flatten the tile access itself.

For the bulk transfer functions I've tacked on for the compositor this isn't too bad since they access it via 2d coordinates anyway - apart from doing a whole row at once (once i've written it properly). Perhaps for Java2D I can write my own WritableRaster and/or SampleModel class to avoid some of these calculations and some of the indirection.

But what I have works (so far) and was pretty easy.

This could open up some interesting possibilities. For starters using it as the 'incremental tool layer' should help with interactive start-up/shut-down speed on big images - no longer does it need to clear or allocate potentially multi-megabyte arrays at any given point. And since most drawing tools only touch a bit of the image at a go it should (hopefully) be fast enough even without optimising. Then for certain file types something similar could be used to implement very very large images (although functions like 'zoom to fit' might be bad). The fact that it's abstracted into a pretty small bit of code that nothing else has to directly deal with is the nicest bit.

I was reading a bit about VIPS last night and that gave me plenty of ideas about handling very large images ... although perhaps for now I should stick to what I have rather than think about parallel pipeline tile based batch image processing. It does sound pretty interesting mind you.

I also had a bit of a look at fotoxx ... which has some quite nice filters and processing abilities. The UI is a little strange though (looks who's talking I guess) but what really takes the cake is the code - about 30KLOC of c inside 2 c++ source files. Somewhat impressive for a GUI driven multi-threaded application. Very light on memory but a few things were a bit clunky and I unfortunately got it to hang trying to flip through a few pages of 10Mb images.

Hmm, just about time for sleepy bobo's I think. I have the final evaluation for the GSOC I will probably do tomorrow (I should post again on that actually), and i've been sleeping a bit poorly lately - I get reasonable hours down but just wake up exhausted.

JavaGE

Well being into Java lately I can't not comment on the Oracle/Google lawsuit. Which to me is just another lawsuit between a couple of big companies who made the laws for themselves anyway.

I find it most disingenuous that Google are mounting an obvious PR campaign to somehow turn this into an anti `open sauce' crusade by Oracle. We all know Android is a non-standard knock-off of Java and that Sun always took a very dim view of the whole thing. And Android is not really about 'open sauce' in terms of an open hand-held computing platform that we all hope for, but is really just a way for handset vendors to get a free operating system.

Google know they had to either make a complete JavaSE based system which allows them to take advantage of the classpath exception, or (as I understand it from a few news reports ...) abide by the GPL and make a complete JavaME based system where all software it uses must also be GPL compatible, or license a complete JavaME based system. But no they wanted to go their own route and make a gimped version of Java (JavaGE - Gimped Edition) to lock people into their platform whilst biting their thumb at Sun to their face. It's pretty much what Microsoft tried but without any licence to start with.

Maybe the Oracle case has no merit, but that's for the courts to decide now. It's a pity lawyers had to get involved at all - they simply represent a wealth transfer from all of us to a few of them - since it is we who ultimately have to pay for all these shenanigans.

But regardless of the merits of the case, this has absolutely nothing to do with 'open sauce' or free software. That Google (and their hangers on - many no doubt with a financial interest) are pushing this line when they are most definitely not a free software or 'open sauce' company by any moderate measure is a little offensive. Oracle are greedy money-grabbing cunts too, but at least they're proud of it and don't try to hide the fact that they are.

Pox buster

Had a day off yesterday and although I seemed to spend a lot of time hacking I didn't really get much done. I had a few possibly good thoughts though.

OpenCL/GPU layers

The layer changes I made a few days ago should almost make GPU layers fairly easy to do without having to rewrite everything ... almost. I added layer-specific damage tracking toward that end, but that's about as far as I got. Considering writing a SampleModel which can write directly to the OpenCL buffers (which go through the nio buffer types) which would let the tool layer sit mostly on the gpu too (although there are other options which might be easier), but that's as far as I got.

Undo

I've done undo a few times before for various applications and it's something I don't particularly like doing. I guess I should get over it since it isn't really that difficult.

I was contemplating a sort of fancy undo that recorded what you did as well as the data changed so as well as go backwards you could selectively 'turn off' or edit bits you've done and re-execute them and also using that as a basis of a macro recording system - e.g. being able to run the undo history on a different layer or image. But I think I might hold off on that/or just do that as well but separately from the undo mechanism and make undo simpler.

To implement undo you need to save the changed bits, and for redo you can either save the result bits or details of the operation performed. For most drawing operations this will really just mean that the result bits are also saved. As a first cut I will probably store an exclusive-or or subtracted image as the delta limited by the bounds of the change since that will let me go either way from whatever position i'm at in the undo history. It might be compressible too and I could compress lazily if that works out to be worth doing.

I think I can fit the delta generation into the composition routine that is used solely to apply tool writes to the target layer - that's one point where both the result and original are available transiently automatically without having to save the before image separately. Then again that's only in float form.

Tiles

A thought I had whilst not being able to sleep early this morning. I don't really want to do a whole tile-based system ... but it might make sense for the tool layer and mask layer. One issue I have is that clearing/creating big layers for images simply takes a long time, but for the compositor it simplifies things greatly if any tool layers are the same size. With the get/setLine() interfaces I can easily hide the fact the backing store is a sparse set of tiles so at least the rest of the application doesn't need to know. The real issue is whether I can create a Java2D compatible image which dynamically allocates tiles as you write to them ... which I think should be possible by implementing a new SampleModel and DataBuffer. If I can get that sorted then this actually opens up a lot more possibilities such as supporting very very large images by virtualising the storage as well.

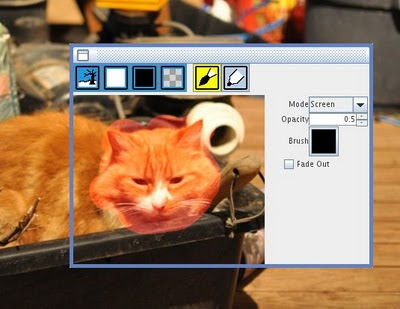

PoxyBox

A couple of issues I have with The Gimp - it's hard to find the toolbox if you have a big screen, other shit running, or a couple of windows open, and it's difficult to work out what the tool options are going to look like without trying them. Opening up all the toolboxes just makes it worse and doesn't scale to low-resolution displays either.

Enter the `PoxyBox' toolbox!

Initially my idea was to have the whole toolbox show/hide right where the mouse is based on pressing the space bar. But then I thought it would have to be too big/cluttered to fit everything so I will probably use those keys that never get any wear and tear that every keyboard still has and were made just for this purpose - the function keys.

I can split the tools into logical groups such as painting, selection, affine transform and so forth so you don't have to put up with the clutter of the unconnected tools when you pop the one you're after up. They are not modal so you can still work on the rest of the image if you want a reference handy/space is too far to go, but only one can be shown at a given time.

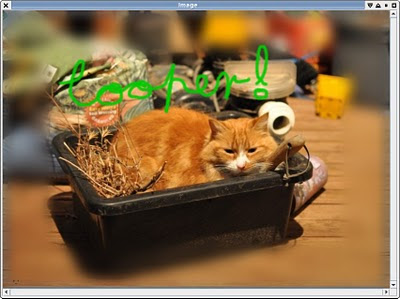

Has Cooper got the pox?

For the painting PoxyBox for example I want to have a scratch-pad area where you can actually try the tool options you've set before trying it on the image. Sure undo works for this but it's a bit clumsy. And as seen with the bluish buttons, you can take a snapshot of the image under the window to poke at, or background/foreground colour, transparent, or perhaps the current layer. Then you hit space or the function key and it goes away and leaves you to do what you were doing without filling the screen with clutter. Perhaps the function key can open up a specific one/change the current tool and space can just revisit the most recently selected. I should probably put the list of layers in there too. The scratchpad makes a mess of the design I created for connecting tools to an options setting widget but i'm sure I can sort that out somehow.

For the affine transform PoxyBox I will have all the details of the affine transform should you wish to enter the details numerically (somewhat like the 'numeric' function in Blender) - so it doesn't have to pop up another window just to show you this. The selection PoxyBox might have a preview of the feathering settings which I always find very hit and miss to judge.

The InternalFrame isn't really what I wanted to use, but I couldn't get normal panes to show up on the frame's LayeredPane so far, and it works for now. A separate top-level window may be the go anyway and it could practically be a bit bigger too. But then it might get confusing switching between drawing surfaces or finding a way to make it behave consistently with multiple pictures open.

(The name is in indeference[sic] to the IMHO not so awesome "Awesome Bar" from Firefox, and a little more obliquely to the fact that the idea reduces toolbox-pox and popup-pox).

So that's the second rewrite.

I find I always have to rewrite things at least once and usually 3 times to get it pretty well right, at least for problems I haven't encountered before.

So, after writing the post last night (and forgetting to post it) I had a thought about two of the later points - supporting different data types, and implementing line-by-line compositing.

At first I just sat down to put some ideas down - how would it run in the degenerate case of only processing a line at a time - but ended up writing a whole new compositor. Then I did some timings and it looked pretty reasonable - a tad faster than the other compositor but at least it wasn't slower, and now I had something I could throw at threads with abandon. And then I realised I was composing 8 bit data which has a fair hit of data conversion and I found it was actually 50% faster for the same case. Nice. Particularly considering it's doing a whole extra memcpy for every data layer, and a lot (lot) more hit tests (although they are simpler) (and this is always tricky with hotspot, maybe it noticed they were always true in my micro-benchmark and compiled them out ...).

So the code supports more data formats, executes faster, uses a lot less temporary memory, is trivially easy to convert to run using multiple threads, ... and is about 1/2 the total lines of code. Yes I will give myself a pat on the back. Hmm, that felt odd.

I'm borrowing a few ideas from the way GPU's execute and from some of the research I did on CELL - processing loops are much more efficient when data is accessed in a native format as the data conversion costs quickly overwhelm simple computations. If you're doing something more than once, it's almost always cheaper to do the data conversion in a separate step let alone the effort required to write specialised code for every case. So all the algorithms just work with floats as they did before - I didn't need to touch the blending kernels. I just added some batch interfaces to retrieve or store the data line by line into a pre-allocated buffer. This is the only point at which data and format conversion needs to take place. It's might not as fast as technically possible but it's quite quick, it's a lot less code to write and it's all simpler code as well - and compilers like simple code. Likely to be more cache friendly too, which cpu's also like. They like that a lot.

Then I spent a good chunk of this afternoon (had some time off work) and evening converting all the rest of the code to use this new compositor, and actually to redo the whole 'layer' object. Filter and effects now have to work differently too - they can either work with the specific types, work generically on data line-by-line, or make whole copies. A lot of operations are easy to convert to line based operations and doing so adds a couple of benefits. Firstly anything requiring temporary memory might only need to store a line of it at a time, and secondly if the work broken into lines is independent or locally independent then the work can be split to run across multiple threads.

With little effort I added a thread dispatching frontend to the compositor and now it's using all the cores on this machine which never otherwise seem to get much work to do. I haven't yet converted the gaussian blur but that will save a lot of memory as I had to extract the packed pixels to padded planes anyway and now I don't need to do that as a separate step.

The drawing tools didn't need any changes - they're just working with BufferedImage's wrapping the data - I tried changing the temporary layer to a 4-byte format and the paint tools worked just fine and somewhat quicker. I tried to leverage a bit more information from the WritableRaster and the SampleModel, but I don't really have much I need to get. I'm limiting the code to a couple of specific image formats for which I know the layout so the code can go straight to the array rather than through accessors to reduce any required address arithmetic which adds up pretty fast (or perhaps, doesn't add up fast enough).

Code is piling up quickly, hit 8KLOC already although there's a bit of stale stuff i'm keeping around since nothing is in version control. I had some pretty nasty experiences with Mercurial over the last week for work so that's fallen heavily out of favour. Heavily. I'm even considering cvs - I know it's quirks and at least it knows how to fucking merge properly which is only about the most fucking important thing for a fucking source management tool and the only thing it really has to fucking get right (fucking). Not that I need to do any merging with myself. I may rant more on that later, but maybe i've already wasted enough time with it.

Blurragain

Poked away a bit more at the selection mask and compositing code, and for fun I added the 10 lines of code required to add the various selection mathematics in based on the modifier keys (i.e. union, intersection, exclusive or, subtraction and replace).

I still have some issues with the compositing not working quite right for the tool layer when it is active with a mask in replace-mode, but i think that's the only bit left now. I fixed the feathering too - it renders the selection mask to a correctly sized image, blurs it and then pastes it back to the actual selection mask. For the blurring I extracted the multi-threaded blur code from the blur tool into a re-usable object and made it support single channel data and they both now use it.

Enough of the tomatoes, time for the cat

I'm surprised I can even remember cursive let alone write it with a mouse on my first attempt.

I started poking at cleaning up the application state - right now it's all going through the toolbox object which is a singleton. Not happy with what i've come up with so far, and actually I'm starting to wonder if I want that type of single toolbox anyway. But I guess I will stick with a single something-or-other-object to route the state around to the required parts, i.e. current window, current layer, current tool, etc.

Hmm, with that sorted maybe I can start thinking about different backends.

Optimistic?

I may have been a bit overoptimistic with the desire to stick to RGBA float images. I did the maths and it gets a bit out of hand very fast - a full sized image from my camera is over 40MB in uncompressed RGBA/8 format, in float that's pushing 200MB. Add another for the tool layer and another for the compositing buffer and a single-channel image for the selection mask and suddenly you're well on your way to gig country. There's no real reason for the compositing buffer to be so big; it could be as little as a single line (or a tile, I should make it multi-threaded), but breaking up the tool layer would be 'tricky'. Even just clearing that much data is a bit of a task (but surprisingly perhaps it still runs reasonably interactive speed) although again it could limit the area cleared fairly easily.

So I guess I will have to keep other data types in mind after-all but I will probably not bother implementing them for the time being. OpenCL images can be stored in various formats but be read from memory directly as floats, so there I could probably do it relatively transparently, at least for 4-channel images. If I ever get there.

Copyright (C) 2019 Michael Zucchi, All Rights Reserved.

Powered by gcc & me!