About Me

Michael Zucchi

B.E. (Comp. Sys. Eng.)

also known as Zed

to his mates & enemies!

< notzed at gmail >

< fosstodon.org/@notzed >

Mailing Lists

I just set up some mailing lists for jjmpeg and socles.

I can't tell from google-code if there is much interest in the projects, but it seems a better idea to set up a mailing list than to receive direct emails about them.

These are still slow long-burn projects i'm working on when I feel inspired, and inspiration varies greatly from week to week.

Bullies, liars, and arseholes.

Hmm, so my sister in law just got sacked from one of her cleaning jobs. After quite a bit of bullying from a fellow employee and what can only be considered racism/discrimination from upper management (e.g. complaining about her diminutive stature in her first week) they finally found enough of an excuse to fire her. A sham 'explain yourself' meeting that went on for hours, followed by a letter saying that she simply lied about everything in the meeting (which is simply not true).

Filthy liars.

I know hardly anyone reads my blog and even fewer locally, but for those, perhaps complain about the lack of cleanliness next time you're in the central market, or maybe just spit on the floor!

I probably wont bother ever going back there myself - not that I was a regular customer anyway.

Playing with Web Start

After a lot of frobbing around I got a simple java webstart demo working for jjmpeg.jjmpegdemos.jnlp

Assuming you have Java Web Start installed this should launch the application - and after a lot of 'this is untrusted' errors should end up with the application running. It lets you run very simple music player demonstration. It uses JOAL for the audio output and jjmpeg for the decoding.

You also need to have the ffmpeg shared libraries installed. A recent version. Which probably means this wont work with microsoft platforms yet - although I suppose if the ffmpeg librariesthat are available here: http://ffmpeg.zeranoe.com/builds/ are in the path it might work.

On GNU/Linux it will depend on compatible libavcodec/etc versions, I'm using using Fedora 13 and 14, and it worked fine on both with ffmpeg-libs from rpmfusion. I also tested x86 and amd64 platforms.

Anyway this is really just an experiment - I doubt it will work in general on every platform.

jjmpeg - microsoft windows 64

Well i've been a bit quiet of late. I wrote a few blog entries but never got around to publishing them - the mood had changed by the time I finished or I couldn't get my words arranged in a readable manner ...

I also had a flu for a week, visitors and other distractions, and writers block for the last few weeks - I hitting some problems with work which paralleled some of the problems I was hitting with my hobby code and everything ground to a bit of a halt. Well such is the way of things. The flu is mostly gone now and I resolved the deadlock with my work code, so perhaps I will get back to hacking again soon.

So today I had a couple of spare hours and the motivation to making jjmpeg work on windows - maybe if I have that working i can drop xuggle [for my work stuff] which is getting a bit out of date now. Actually the main problem is that it's too much hassle to build, and only available in a 32 bit version - and with the opencl code and other issues, the 32 bit jvm limits are starting to cramp the application a bit.

The biggest problem was working out how to compile it, and after a lot of buggerising around I found it was easiest to just install the mingw 64-bit compiler as a cross compiler and I get to keep the nice coding tools I always use and keep myself in linux. Trying to do any work at all - and particularly development - in windows is like trying to ride a bike with one leg cut-off and a broken arm. Unpleasant, and painful.

Apart from that it was mostly just re-arranging the code to call some simple macros which change depending on the platform - i.e. dlopen/dlsym or LoadLibrary/GetProcAddress. And then a bit of a rethink on how the binaries are built to support multiple targets via a cross compiler.

I have done very little testing, but when setup properly it found the library and decoded an mp3 file, which is good enough for me.

(and obviously, there will never be windows support for the linux-dvb code, only for the libavformat/libavcodec binding).

OpenCL killer application?

So i've been trying to think of some killer application that OpenCL could enable.

Sure you have video rendering or processing, signal analysis and the like - but for desktop use these sorts of things can already be done. And if it's a little slow you can always throw more cores and/or boxes at it.

But I guess the big thing is hand-held devices. This is probably why the ARM guys are starting to make noise of late: being able to put `desktop power' into hand-held devices. Still, this is more of an evolutionary change than a revolutionary one - with mobile phones now being pocket computers we all expect that one day they'll be able to do everything we can on bigger machines, with moores law and all that (which is related to the number of transistors, not the processing performance).

I was also thinking again about AMD's next-gen designs - one aspect I hadn't fully appreciated is that they can scale up as well as down. Even just a single SM unit with 4x16 SIMD cores running at a modest and battery-friendly clock rate would add a mammoth amount of processing power to a hand-held device. It has some similar traits to the goals behind the CELL CPU - the design forces you to partition your work into chunks that fit on a single SPU. But once done you get that done - you gain a massive benefit of then being able to scale up the software by (almost) transparently executing these discrete units of work on more processors if they're available.

So, I don't think there will be a 'killer application' - software that only becomes possible and popular because of OpenCL (for one, the platform support is going to be weak until the hardware is common, and even then micro$oft wont support it because they're wanker khunts) - rather it will be the hardware application of placing desktop-power into your hand (and if such performance is only utilised to play flash games at high resolution, I fear the future of humanity is already lost).

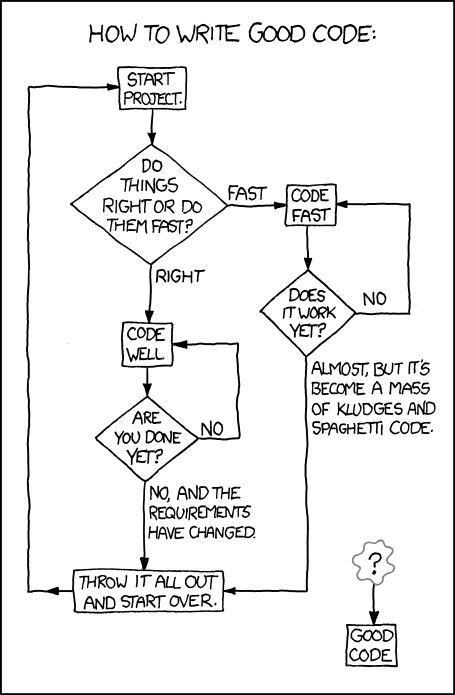

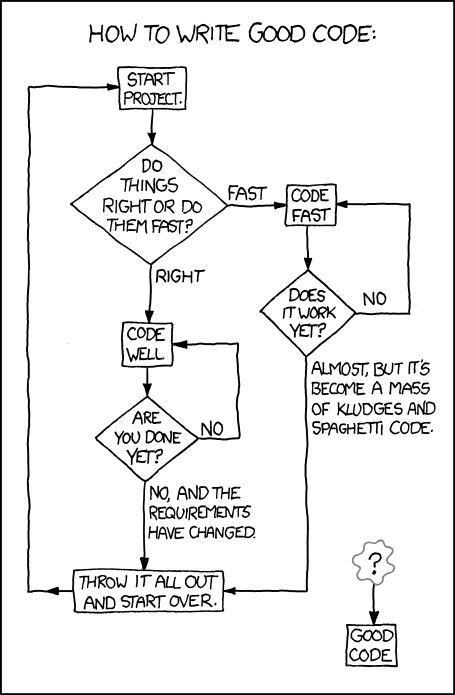

It's funny 'cause it's true ...

From a little while ago, but I just flipped threw a few weeks worth of xkcd the other day and came across it.

When I was doing engineering at uni we talked about the reams of documentation and being able to pre-define the problem to such a degree that the coding itself would be an afterthought. A mere bullet-point to be performed by lowly trained knuckle dragging code monkeys somewhere between finalising the design and testing. Of course, this was proven to be immediately impractical during our final year project - and that was about the last time I ever saw an SDD. In one job I had we started with lofty goals of fully documenting it using references SRS and SDD's and the like but in the end we just ended up with piles of junk. They were complete, and even sometimes up to date but ultimately useless - they didn't add any value.

In reality of course there are many impediments to such an approach:

- The customer doesn't know what they ultimately want. Ever.

- New ideas come along which change or add requirements.

- You don't know the best way to solve a problem without trying it.

- You don't know where to even start solving problems without plenty of experience.

- The market or other outside circumstances force a change.

- That just isn't how the brain works - you continue to learn every second of every day and that changes how you would solve problems or present them.

- It's slow and too expensive for anyone who has to earn money and not just ask for it (i.e. outside of defence, govt).

Although contracts are still written this way, and documentation is still a phone-book sized deliverable in military software. And computer engineering academia are still trying to turn what is essentially an art, into a science. I don't think their efforts are completely worthless (at least not all of them), but I think software is too complex for this at this stage and only getting more complex.

It's not that development documentation isn't useful - I wouldn't mind a good SRS myself - but there needs to be a happy medium.

Back to the flow-chart - which to me has a deeper meta-meaning even by being a flow-chart. The software engineering lecturers scoffed at flow-charts as being obsolete and out of date - yet they seem to be more useful than anything they claimed replaced it.

Personally I try to do it right but sometimes do it fast - because ultimately you always end up having to refresh a significant chunk of the code-base when the customer reveals what they really wanted from the start. Fortunately when i'm in the groove (say 30% of the time?) I can hack so fast and well (not to put tickets on myself, but i can) the line is a bit blurred - writing and (re)-re-factoring gobs of code on the fly as the design almost anneals itself into a workable solution. Pity I can't do that all the time.

Extra effort is usually is worth it, but not always. And sometimes the knack is just knowing when you get get away with taking short-cuts. For isolated code at the tail-end of the call-graph it usually makes little difference so long as it works.

If you throw the front-end away and start from scratch and you have some well designed code underneath, you can usually re-use most of it. Crappy code is much harder to re-use. But in the earlier stages of a project doing it right can be more of a hindrance. Particularly with OO languages - which force you to create good data models to fit the problem - which means even a small change to the problem can be a big change to the data model. Of course, many coders never achieve good data models, so perhaps for them the cost isn't so high - at the cost of perpetually low quality code. Yes I say data, not code - the data is always more important.

Annealing is probably a good way to describe software design and maturity process - early stages punctuated by large fluid changes due to high-energy experimentation then and over time the changes becoming smaller as it matures and solidifies. If the requirements change you have to put it back into the fire to liquefy the structure and reconsider how it fits in the new solution.

Simply bolting on new bits will only create an ugly and brittle solution.

Why not -1 button?

So apparently google have added a +1 button to the search results. Why not a -1? I'm not sure exactly what this is supposed to achieve - yet more ways for spammers to skew the results? I'm already a bit wary of google giving me my own private view of the internet, I hardly want that increased. And often what you're trying to find is the stuff that doesn't come up on the front page now - it seems this would only make that worse.

Just more clutter I don't need or want which simply slows down the page loading.

They also seem to have fucked up the mail client by making a section of the screen not scroll. Which makes it unusably slow and even uglier than it's already ugly forebear. So I guess I will have to go to basic HTML mode now. Which is a pity because I use the chat thing to find out when drinks are on.

Stuff

Been pretty lazy this week - I seemed to spend too much time reading a few sites I frequent from time to time, mostly about the GFC and some of the local political-media clown-show (they are no longer separate entities). But the picture they paint of the world is pretty bleak so it's really all just a bit of a downer; although i'm not sure if it's just the reading that gets me down or feeling a bit flat in the first place that tends to drive me toward reading it.

So no spare-time hacking this week. I did however prune back the golden rain tree in the back yard yesterday - and given we had a couple of days of sunlight I even got a little red in the face. Always nice to get some sunshine in the middle of winter even when such days are few and far between. I also made that lime cordial last week.

For work i'm hitting some big performance problems on the target platform - partly because I think the customer has some unrealistic expectations, and partly because I didn't do enough research at the time on card performance, or they just weren't up to scratch. Oh well. I presume it's something to do with the EOFY purchase dash as well but buying new hardware has come up as a possible solution. Fortunately things have moved a bit since then so at least buying new hardware should be a big help although it wont solve everything.

I'm also pushing for AMD hardware this time - although the Nvidia hardware has been ok as far as that goes, they've obviously given up on OpenCL (no released 1.1 driver, and their opencl 'zone' hasn't changed in a year) and it doesn't seem like a company that wants my money or deserves any support (even the forums are pretty quiet so it seems i'm not alone - we all get the hint). Expanding ones experience and educating yourself about the alternatives is always a good thing too.

By coincidence AMD just had some marketing event about their heterogeneous computing plans, and Anandtech has a really interesting article on where AMD are going with their GPU/CPU architecture. Looks quite promising, although i'd really like to see a bump in local-store size. Although there is certainly enough there to be useful it is still a bottleneck, and with even more parallelism possible due to the design, the limited global bandwidth will only become more of a bottleneck.

Pity it's still a way off, because a change in architecture of that magnitude will require a different approach for performance, although in general it looks like it will be easier and it will also map well to OpenCL.

Copyright (C) 2019 Michael Zucchi, All Rights Reserved.

Powered by gcc & me!