About Me

Michael Zucchi

B.E. (Comp. Sys. Eng.)

also known as Zed

to his mates & enemies!

< notzed at gmail >

< fosstodon.org/@notzed >

The World Wide Web

So M$ are up to their old tricks again and restricting competitors from accessing their operating systems properly. Nothing ever changes apart from the date. Does it really matter much anyway? (who in their right mind is even going to use that shit to start with?). Mozilla are still pushing their it's-really-an-operating-system angle, but is that really ideal for me as a customer? Or even as a developer for that matter. One only has to look at any application on a tablet and compare it to it's browser counterpart. Even running on a monster desktop workstation the tablet usually beats the pants of it. e.g. google maps or youtube. For all it's "lowly" specifications (well, they aren't really), the tablet i've been using shits all over anything i've ever seen in a web browser, HTML5, or Flash, on much much faster machines. I would really like to see browsers eschew all that heavy-client crap which has turned them into bloated power wasters, and return to a world of delivering information in an open and linked way. This is something they actually do quite well, and then they can leave the heavier stuff to native applications which always do a better job. And with the range of devices exploding - from workstations to tv's to pocket computers - doing anything more than text with a few pictures, the odd form, is only going to become more difficult. The right tool for the right job, and all that.

Abject failure

I spent an inordinate amount of time last night trying to lick the decoder stability issues.

I ported the jjmpegdemo MediaPlayer over to android, and re-arranged it so it was shipping AVPacket's around instead of AVFrames and decoding on a separate thread (this is how ffplay does it). I changed the rendering to copy to a texture synchronously with the decoding (i figured sharing EGL context work can wait). I used multiple textures to implement some buffering.

So when I finally got it running (after wasting a good hour on a silly place-holder mistake when i started), it still suffers from the same problem. Along the way netbeans decided it had too many projects open, and the debug cycle time on the tablet seemed to get longer and longer.

Ho hum.

This morning I poked at using shared contexts, and although it seems to use less CPU time, it still crashes and it's doing something weird with the frame rendering order as well.

So yeah, might have to sit on this for a bit and brood.

Update: As one does ... I persevered. Current guess is that it's something to do with the texture load: if I isolate that out things seem to run fine (but with no repeatability this is hard to judge). More perseverance required.

Speaker-busting screech

So yeah, don't forget to set your endian-ness on your direct ByteBuffers when you're playing with sound files. Poor speaker. This morning I got sound going with jjmpeg/android. This was mostly fixing up the way I was using AVPacket's - I removed the the AVAudioPacket class and added the functionality to AVPacket. I need to look at the new audio_decode_4 api, as that might simplify the usage anyway and remove the need for the special case. I also hit some surround-sound files - i've just never played with audio much (don't even have speakers on my pc) - so have some more api to think about binding. Shouldn't be too hard to fit this into the video player, AudioTrack reports it's position which is enough.

jjmpeg/Android

So yesterday I checked in the work I did last week for the android jjmpeg port. Not heavily tested, but it appears to work.

Then I spent far too long poking around trying to find out about the EGL OES_external_images extension, but finally came to the conclusion that it just isn't exposed publicly (yet?) so it's way more effort than it's worth to me to get it going.

So I suppose it's just glTexSubImage2D for me then.

GLES

I had a long work week this week and although I had intended to get a couple more things out of the way this morning I let it slide, and eventually got sucked in to playing with jjmpeg. I rigged up a version using GL, but still for now performing CPU-side colour conversion. I at least added a separate thread to do the colour conversion, and hooked it all up so that nothing needs to wait for anything else unless absolutely necessary.

The display stuff is a little bit faster - but nothing to worry about TBH. The colour conversion is only about 20% of the time, so it isn't a real big difference either.

I also tried with/without VFP: which made stuff all difference too, barely 5%. I started with 32-bit RGB textures simply because I had that already, and changed those to RGB565 which made another small difference. But all these small differences just weren't adding up to anything but a lot of small differences.

So anyway ... I went and looked at some of the options for skipping frames, multi-threaded decoding and what have you, and I noticed that threading wasn't enabled in the build.

Cut a long story short - beware of copies of copies of scripts one finds on the net: my compile script was basically shit. I also turned on NEON this time ...

Success!

I'm not sure if it's just the NEON, or the threads - or simply compiling it properly - but boy what a difference.

(actually it's all those not-so-little-bits now adding up, even single-threaded it's now much faster, in performance mode 2 threads can nearly handle 720p).

So it's now decoding 720p MP4 fine (taken from the on-board camera), even in 'balanced power saving' mode. Before it was struggling with this, under 10fps. And now the colour conversion is more like 50% of the time, so I will have to investigate using GLES for this since it should be a lot better at it.

Anyway, i'm quite chuffed at this now - I was starting to think it was pretty much pointless apart from perhaps encoding or more control over decoding. But this level of performance opens up a lot of possibilities. I'm also still only using ffmpeg 0.10.0 release, so there might be more there now too.

Update: So I kept going and added the GL colour conversion. Quite a bit better and 720p is fine with 2 threads, but not able to handle 1080P from the built-in camera.

Android Camera & Video, OpenGL

Ahh, somehow I ended up hacking most of the Saturday rather than taking advantage of the relatively pleasant weather to do something better. Addicted I guess. If the weather holds today ... maybe i'll get out.

I was just going to have a quick look at android camera operation, but one thing lead to another ...

After I got a basic camera view working, I wanted to work out how to present it in opengl. So I got pretty side-tracked trying to work out some opengles2 stuff - the examples and suggestions on the net were usually small fragments or for opengl1 - until I found the android sdk sample they all came from which had everything I needed (GL2CameraEyes or somesuch, my workstation is off now). GLES2 is a bit of a pain and requires a lot of scaffolding to get started, but I guess it is what it is.

So yeah, camera to texture, texture to display, etc.

Also checked out taking photos, and recording videos - although the latter requires a preview view, and wouldn't work with a GL 'preview' window so I gave up on that in this context.

The basic objects are a bit bare, but they provide enough functionality to be usable, and are generally fairly simple to use once you know how.

"Broadcast Quality"

One thing that is a bit disappointing is the frame rate achievable. Even with a simple GL render loop it doesn't match the frame-rate properly and you get skips and drops. It's almost like the screen and GL are refreshing at different rates. I tried a dirty-refresh-on-camera-frame, as well as update-continuously-refresh-texture-when-camera-frame, but both ways were simply not smooth.

For fucks sake even a fucking Commodore 64 could generate video-rate-smooth graphics.

(Even something like GL gears, a simple demo which should easily manage a solid and smooth 60fps, is not properly smooth, although it might be the internal animation calculations that are out).

Because of this, and the stupid choice to use a 60hz panel (my laptops run at 50hz for this very reason), one of the few things that might actually be useful on a tablet - watching recorded tv shows or movies - is actually a pretty crappy experience (if you can even get it to work in the first place). Movies on a 50hz display running at 25fps look a LOT LOT better than telecined crap on a 60hz one.

People are so used to totally woeful 'pc graphics' these days nobody even notices or cares (horrah: at least there's no bloody tearing!).

Even tv stations no longer seem to care about 'broadcast quality' any more - I guess the old guard engineers who knew the difference are being replaced by kids who spent more time on youtube than watching broadcast tv). Sport looks like it's been shot with a pocket camera and run through a single-pass of ffmpeg encoding at middling settings - severely blocky from lack of bandwidth and just not very good. And we're often getting American version of movies now - i.e. which have been fucked up by being telecined to 60fps to start with - and here they are just blasted out at 50fps using simple nearest-frame or sometimes average frame 5+6 output both of which looks totally totally shithouse (9, 7, and 10, i'm looking at you). And even when they do have PAL source, sometimes the interlaced frames are flattened to 25fps(AND THEN SCALED!!!), and encoded at 25fps progressive: which is astoundingly bad.

So why did I buy that giant TV for again? Well at least I can read the current score or the news ticker from the couch ...

JJPlayer

Although I'm pretty knackered after a week of hacking on android user interface and REST stuff, a few things fell together near the end of today so I felt like I had a bit more energy to poke around jjmpeg on android.

I converted most of the rest of the binding to use integers as a pointer rather than bytebuffer's and after a bit of debugging managed to read a frame in ok. I'm still holding out on the AVIOContext stuff as it's a bit of a mess.

Using integers and looking up the value as a member variable on the lowest concrete class works pretty well. In some cases it even simplifies the binding as for example I don't need special 'object holder' classes for in/out parameters. I got sick of trying to get the horrid mess of a binding generator to work for all cases and hand-coded these in/out functions as well: it's only 3 calls and a lot less work just doing it by hand than making the generator handle all cases.

Performance doesn't seem terribly hot, but then i'm not au fait on the myriad of ARM compiler options in gcc. And in any event it looks like my build script had a bug and only built using the defaults anyway (ok, so i fixed that whilst typing this and it's a bit faster, but well who cares anyway, it's not the goal to beat the hardware decoder at mp4).

I noticed that I branched for 0.10 before i'd finished off some of the api, so I will have to back-port a bunch of stuff to make it more usable.

I'm also considering simplifying the object model significantly: at the moment 4 (6, if i want transparent 64 bit/32 bit support) classes go together to make 2 objects for each C object; which allows for automatic garbage collection so make the api more java-friendly. It works quite well at least on the oracle and openjdk jvm's, but adds a bit of extra crap to the binding. I could simplify it down to 2 (4) classes and 1 object if I force the developer to manage memory explicitly. But what I have works, so I am in no rush on that one. One doesn't need to create very many objects to decode a video, so it's hardly a big overhead. And although freeing them explicitly isn't that much of an effort either: it's still nice to have it work automatically.

My local code-base is a bit of a mess - I couldn't get netbeans to open the android project as an android project properly until I created a new directory, created another blank project with the android tool, and then copied the source around till it worked. So it might be a bit of hassle copying this all back to a versioned tree and checking it in.

android hacking, jjmpeg

So winter has sort of started here - although we had a it of sun today with a bit of heat in it. It tends to stick from about ANZAC day - the 25th, and we're only a few days early.

Which means: I don't have much to do apart from cook and hack. Still a bit tired from the previous week so I didn't get into much but I had enough time between drinks to have a look.

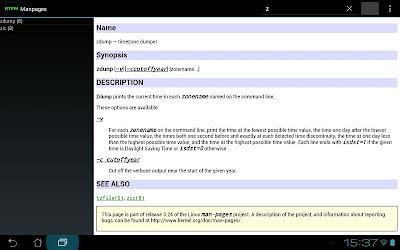

RTFM

Yesterday I spent a few hours playing around with the Manpages source. I seemed to get a lot done but after looking at what I came up with, it didn't seem to amount to much ... just a bit of GUI re-arrangement to make it take advantage of a tablet. I don't need man pages on a tablet, but I am thinking of how to do a nice interface for accessing documentation as it does seem something the tablet form-factor might actually be useful for. Starting with Manpages just saved me a bit of faffing about.

I first started playing around with the search function: making it use a searchview, and changing it to filter the list of man-pages rather than coming up with a redundant 'shortlist' completion list. And voice search, although it really just doesn't work well for unix commands (grep == brett, great, grit, etc). Probably the stuff that took me the most time was working out how to get the search box to work nicely - getting rid of the onscreen keyboard when it isn't needed and so on. But eventually I have that behaving quite nicely.

The filter stuff isn't very well documented, but it wasn't too difficult to work out. The built-in list filtering has a silly stylistic choice of overlaying the list with a very large label of the filtering string, but it was only a couple of lines of code to remove it by doing it manually. I implemented it using a tree, so an interactive prefix search runs in real-time with no trouble at all.

I'm still only experimenting but if it goes somewhere this might end up another project to divide my time (an android version of 'ReaderZ'?).

jjmpeg

Today I thought i'd instead have a poke at jjmpeg. I'd already had FFmpeg building from source using the NDK, but not gone anywhere with the jjmpeg stuff.

I'm in two minds over this - I don't really need it, and am not particularly interested in writing a video player, although the free ones on the market are pretty crap (and they expect me to pay for ffmpeg? I don't think so tim ...). But it might be useful for work and it's a good opportunity for some self education on mobile hardware, opengles, and so on.

So i've branched jjmpeg, and started work on an android specific port: things are different enough that at the moment that branch will only build the android code. For example I am linking directly to ffmpeg's libraries so I've removed all the dynamic loader stuff.

But anyway, don't expect rapid progress.

GPL3

I've also decided the whole library will be GPL3 - for a couple of reasons.

First, since I have to distribute FFmpeg in the binary, I have to distribute the source now too. If I have to deal with that crap I may as well ask for reciprocation.

Secondly, many android developers seem to be utter leech-tards, and don't mind wrapping anything they can get hold of in a new name and trying to make money off it. I don't really need to contribute to that in any way - after-all I didn't do all the hard work which makes jjmpeg work.

And finally, and related to the previous: the LGPL requires that all the LGPL code be replaceable by the user. But with android this is quite a difficult process which prejudices the user. It's not like GPL will make building with the NDK any easier; but at least it means those capable of doing so end up with more than just a new makefile for ffmpeg which is about all the LGPL requires.

A week of the android sdk

Well, a work-week.

It's a pretty crap api. All that really nasty XML and pre-compiled resources: maybe it makes sense if you're really resource constrained, but I can't see how that's an issue for the future. The whole XML stuff is a pretty annoying too: it's a horrible language and its hard to write and harder to debug. Repeatedly I see claims that it's all about 'good practice to separate view from logic'. Sure it is, but you don't need to learn some fucked up shitty language to do that, you can just do it in programming language too. And I can't see how XML is any easier to learn than the tiny bit of code you need to do it programatically. If you dig hard enough you can work out how to avoid it, but it's a constant battle with the documentation.

I think XML is only popular because many people have only learned how to use a rock as a tool and to them every problem looks like a nut that needs smashing.

A lot of the api is similarly infused with short-sighted decisions [such as the pre-compiled resource id stuff].

Which means ... the api's are constantly changing to cover up earlier mistakes. I'm not sure exactly what the fragment stuff is about for example; it seems to be important for a reason but i'm not sure what yet (but such things are usually the way when encountering a new toolkit). It also means the documentation is a bit over the place and old api's aren't clearly marked as deprecated even if 'the new way is not to use them' as are any third party resources. Stack overflow has been invaluable to sort the chaff from the wheat.

Still, even with the weird api's and broken cut-down Java runtime, it's pretty easy to create fairly slick responsive applications. They've gone some way of hiding the 'complexity' of multi-threaded applications (which is needed to make this a reality) and introduced it to neophyte programmers who probably would have shied away from it - even though all modern toolkits provide similar levels of support. I always preferred threading vs callbacks (it's a lot easier to maintain state in local variables and for loops vs callbacks and callback 'user data'), and the fact it scales cleanly on multi-core hardware is just a bonus.

HTML5

Compared to HTML5 that looked like arse (we hadn't even thought about styling it yet), limited user interaction, and all sorts of platform problems. In android we had a slicker interface with less time. It took a bit more code but not much (and it was simple java) - and it already had decent styling and proper user interaction. And well, in HTML5 it was still just a web page ...

One just has to compare 'youtube' from the browser (any browser) and the android youtube application. It's like comparing apples and oranges, it really is. And if that's all google can come up with - with all the experience and almost almost infinite resources - what hope does anyone else have?

If I was mozilla i'd be shaking in my boots - i'm surprised google haven't come out with a browser plugin or standalone 'ARE' (android runtime environment) yet. As a TV/flat surface toolkit/runtime, it's strides ahead of HTML5. Strides.

I'm also surprised mozilla (or ms) hasn't come up with a really solid, high quality javascript extension library (which must be cross platform, but they would bundle) to turn what is a simple low-level scripting language runtime environment - totally inadequate and utterly woeful for desktop application production - into a usable platform. Right now, using half-arsed, poorly designed third party libraries is really a struggle. You have to learn their strange 'flavour of the week' api conventions, and then all you've learnt to use is a crappy toolkit which doesn't work very well.

The only thing programming in HTML5 gives you is ubiquity - or at least the hope that most people on most hardware will be able to run some version of your application. It's still just a hope.

If only ...

The real pity with android that it isn't using a proper JVM and a full Java SE environment (minus the toolkit). I guess when it was conceived the hardware was pretty minimal but that isn't the case now let alone in the future.

The other pity is that many of the applications are using the same web model for revenue - i.e. spying on users for fun and profit. I wonder how sustainable this revenue model really is globally: developers get a pittance and most of the money goes to a handful of giant multi-national corporations who siphon it away from the country and avoid paying their fair share of taxes.

Sure a few 'hit the jackpot' and make a good living, but that sounds too much like everyone is playing a casino. And clearly casino's are really just a tax on stupid people - the house is the only real winner. Always.

What now?

Well i'm still not sure what I will hack on now I have an android machine. I might look at porting jjmpeg or something, but probably only do it properly if I will use it for work. The utter shit-house video players available on the android market (based on FFmpeg clearly for the most part, usually without source) could be a driving factor, but I don't personally need another video player and doing it alone would only be for the novelty value and to learn about the problems one faces in such environments.

I've also been keeping an eye out on the rhombus tech/all-winner A10 stuff. And thinking about getting one of these to play with. I have some other motives for that too - I bought a cheap Kogan dual-tuner DVR, but it's been a pretty crappy experience - the software is shit, the remote stops working if it gets humid, and it keeps rebooting itself (I did find out about getting an RMA but just couldn't be fagged with all the hassles of having to ship it back with the proviso 'you have to pay for it if we can't find a fault' - at the time the faults were intermittent, then the remote didn't work for a couple of months, now it's working again but it's rebooting with a hdd plugged in). For 100$ I may as well just throw it in the bin, or open it up and poke at it for some entertainment. I don't use it myself, but it was a present and i'm a bit pissed off that it's just such junk (what I should have expected I guess).

But for now it's a wonderfully warm autumn day, I need to mow the lawn, and there's cold beer in the fridge. Hacking can wait.

Copyright (C) 2019 Michael Zucchi, All Rights Reserved.

Powered by gcc & me!