About Me

Michael Zucchi

B.E. (Comp. Sys. Eng.)

also known as Zed

to his mates & enemies!

< notzed at gmail >

< fosstodon.org/@notzed >

Beware the malloc ...

So one of the earliest performance lessons I learnt was to try to avoid allocating and freeing memory during some processing step. From bss sections, to stack pointer manipulation, to memory pools.

This was particularly important with Amiga code with it's single-threaded fit-first allocator, but also with SunOS and Solaris - it wasn't until a few versions of glibc in, together with faster hardware, that it became less of an issue on GNU systems. And with the JVM many of the allocation scenarios that were still a problem (e.g. many small or short-lived objects) with libc simply vanished (there are others to worry about, but they are easier to deal with ... usually).

It was something I utilised when I wrote zvt to make it quick, unfortunately whomever started maintaining it after I started at Ximian (I wasn't allowed time to work on zvt anymore, and ximian was a bit too busy to keep it as a hobby) didn't understand why the code did that and it was one of the first things to go ... although by then on a GNU system it wasn't so much of an issue.

But even with a super-computer on the desk it's still a fairly major issue with GPU code. Knowing this I always pre-allocate buffers for a given pipeline and let OpenCL virtualise it if required (or more likely, just run out of memory) but today I had a graphic reinforcement of just why this is such a good idea.

After hacking all week I managed to improve the 'kernel time' of a specific high-level algorithm from 50ms to about 6ms. I was pretty damn chuffed, particularly as it also works better at what it's doing.

However when I finally hooked it up to a working tech demo, the performance improvement plummeted to only about 3x - one expects quite a lot of overhead with a first-cut synchronous implementation from c-java-opencl-java-javafx, but that just seemed unreasonable, it seemed like every kernel invocation had nearly 1ms overhead.

Without any way to use the sprofile output at that level (nanosecond timestamps aren't visually rich ...) I added some manual timing and tracked it down to one routine. Turned out that my port of the Apple FFT code was re-allocating temporary work-space whenever the batch-size changed (rather than simply if it grew). Simply those 2 frees and 2 allocations were taking 20ms alone and obviously swamping the processing and other overheads completely.

Whilst at 15ms with about 60% of the time spent in setup, data transfer, and invocation overheads it is still pretty poor, it is acceptable enough for this particular application for a first-cut single-queue synchronous implementation. Actually apart from running much faster, the new routine barely warms up the GPU and the rest of the system remains more responsive. I must try a newer driver to see if it improves anything, i'm still on 12.x.

Bring on HSA ... I really want to see how OpenCL will work with the better architecture of HSA. Maybe the GCN equipped APUs will have enough capability to show where it's headed, if not enough to show where it's going to end.

Why is the interesting hardware always 6-12 months away?

industrial fudge

It survives ... nearly 20 years on.

Never did finish and write the 'AGA' version ... but there were some pretty good reasons for that. When it was released some scumbag stole my second floppy drive, the next night I shorted my home-made video cable and blew out the blue signal, and I had only 2 hours sleep over a 96 hour stretch ... so I kinda lost interest for a while. Can't remember ever having slept particularly well ever since.

Hardware was so much simpler (and more fun) back then.

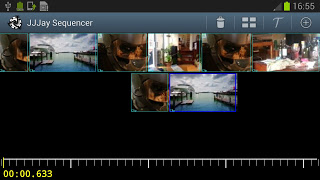

ActionBar, cramped screen-space, etc.

So after a few ui changes I tried jjjay on the tablet ... oh hang on, there's no menu button and no way to bring it up.

That's one ui design idea out the window ...

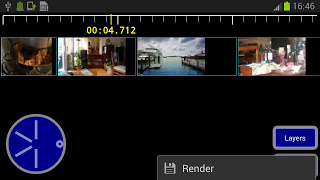

So basically I am forced to use an actionbar, and seeing how that is the case I decided to get rid of everything else and just use the actionbar for the buttons. Goodbye jog-wheel, it wasn't really doing anything useful on that screen anyway (unless I added a preview window, but that's going to be too slow/take up too much room on a phone). I also moved the scale/scrollbar thing to the bottom of the screen which is much more usable on a phone as otherwise you accidentally hit the action bar or the notification pull-down too easily.

It creates a more uncluttered view so I suppose it was a better idea anyway.

I fixed a few other little things, played with hooking up buttons to focus/action, and experimented with a 'fling' animator, but at this point some bits of the prototype hacked-up code is starting to collapse in upon itself. It's not really worth spending much more time trying to get it to do other things without a good reorganisation and clean up of some fairly sizeable sections.

I need a model for the time scale pan/zoom although I could live without that for the time being. A model for the sequencer is probably more necessary otherwise adding basic operations such as delete get messier and messier. There's still a bunch of basic interface required too; setting project and rendering particulars, and basic clip details such as transition time and effects.

So this is basically where the prototype development ends and the application development begins. 90% of the effort left? Yes probably. 6 days of prototype to 2 months of application development ... hmm, sounds fairly reasonable if a little on the optimistic side, generally it takes 3 writes to get something right.

I guess i'll find out if I keep poking at it ...

threads, memory, database, service.

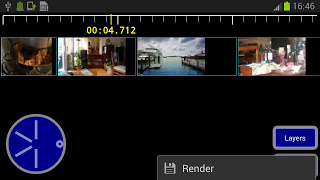

I hooked up the video generator to the jjjay interface last night and did some playing around on a phone form factor.

The video reading and composing was pretty slow, so I separated them into other threads and have them prepare frames in advance. With those changes frames are generally ready in Bitmap form before they're needed, and 80% of the main thread is occupied with the output codec. This allowed me to implement a better frame selection scheme that would let me implement some simple frame interpolation for timebase correction. The frame consumer keeps track of two frames at once, each bracketing the current timestamp. Currently it then chooses the lower-but-in-range one. The frame producer just spits out frames into a blocking queue from another thread - before I had some nasty pull logic and nearby-frame cache, but that is the kind of dumb design decision one makes in design-as-you-go prototype code written at some funny hour of the night.

I guess to be practical at higher resolutions hardware encoding will be necessary, but at SD resolution it isn't too bad. VGA @ 25fps encodes around 1-2x realtime on a quad-core phone, depending mostly on the source material. I think that's liveable. 1280x720 x264 at 1Mb/s was about 1/4 realtime. I suppose I should investigate adding libx264 to the build too.

I cleaned up some of the frame copying and so on by copying the AVFrame directly to a Bitmap, it still needs to use some pretty slow software YUV conversion but that's an issue for another day. Memory use exploded once I started decoding frames in other threads which was puzzling because it should've been an improvement from the previous iteration where all codecs were opened for all clips in the whole scene. But maybe I just didn't look at the numbers. I guess when you do the sums 5x HDxRGBA frames adds up pretty quick, so I reduced the buffering.

It was fun to finally get some output from the full interface, and as mentioned in the last post helps expose the usability issues. I had to play a bit with the interface to make the phone fit better, but i'm still not particularly happy with the sequence editor - it's just hard fitting enough information on the screen at a reasonable size in a usable manner. I changed the database schema so that clips are global rather than per project, and create and store clip/video icons along with the data.

So with a bit more consolidation to add a couple of essential features it'll approach an alpha state. Things like the rendering need to be moved to a service as well. And work out the build ... ugh.

At least I have worked out a (slightly hacked up) way to use jjmpeg from another Android project without too much pain. It involves some copying of files but softlinks would probably work too (jjmpeg only builds on a GNU system so I don't think that's a big problem). Essentially jjmpeg-core.jar is copied to the libs directory and libjjmpegNNN.so is referenced as a pre-build library in the projects own jni/Android.mk. If I created an android library project for jjmpeg-core this could mostly be automatic (apart from the make inside jni).

Incidentally as part of this I've used the on-phone camera to take some test shots - really pretty disappointed with the quality. Phone's still have a long way to go in that department. I can forgive a 100$ chinese tablet and it's front-facing camera for worn-out-VHS quality, but a 800$ phone? Using adb logcat on this 'updated, western phone' is also very frustrating - it's full of debug spew from the system and bundled software which makes it hard to filter out the useful stuff - cyanogen on the tablet is far quieter.

Rounding to the nearest day jjjay is about 5 days work so far.

Attached Bitmaps

On an unrelated note, I was working on updating a bitmap from an algorithm in a background thread, and this caused lots of crashes. Given it's 3KLOC of C+Assembly one generally suspects the C ... But it turned out just to be the greyscale to rgba conversion code which I just hacked up in Java for prototype purposes.

Android seems to do OpenGL stuff when you try to load the pixels on an attached Bitmap, and when done from another thread in Java, things get screwed up.

However ... it only seems to care if you do it from Java.

By changing the code to update the Bitmap from the JNI code - which involves a/an locking/unlocking step it all seems good.

Of course as a side-effect simply moving the greyscale to RGBA conversion to a C loop made it run about 10x faster too. Dalvik pretty much sucks for performance.

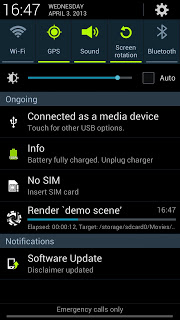

Update: Well add a couple more hours to the development. I just moved the rendering task to a Service, which was overall easier than I remembered dealing with Services last time. I guess it helps when you have your own code to look at and maybe after doing it enough times you learn what is unnecessary fluff. Took me too long to get the Intent-on-finished working (you can play the result video), I wasn't writing the file to a public location.

I cleaned up the interface a bit and moved "render" to a menu item - it's not something you want to press accidentally.

So yeah it does the rendering in a service, one job at a time via a thread pool, provides a notification thing with a progress bar, and once it's finished you can click on it to play the video. You know, all the mod-cons we've come to expect.

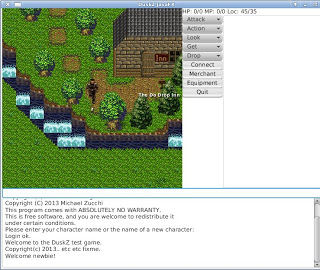

Animated tiles & bigger sprites

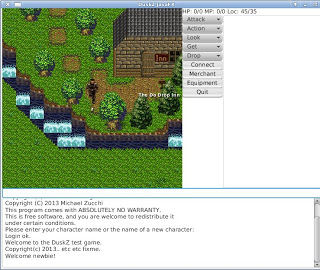

I haven't really had much energy left to play with DuskZ for a while (with work and the android video editor prototype), but after some prompting from the graphics guy I thought i'd have a go at some low-hanging fruit. One thing I've noticed from my customer at work is that if they casually mention something more than twice, they're more than casually interested in it ...

Mostly based on this code idea I hacked up a very simple prototype of adding animated tiles to Dusk.

One issue I thought I might have is with trying to keep a global sync so that moving (which re-renders all the tiles currently) doesn't reset the animation. Solution? Well just leave one animator running and change the set of ImageView's it works with instead of creating a new animator every time.

i.e. piece of piss.

The graphics artist also thought that scaling the player sprites might be a bit of work, but that was just a 3 line change: delete the code that scaled the ImageView, and adjust the origin to suit the new size.

Obviously one cannot see the animation here, but it's flipping between the base water tile and the 'waterfall' tile, in a rather annoying way reminiscent of Geocities in the days of the flashing tag, although it's reliably keeping regular time. And z suddenly got a lot bigger. To do a better demo I would need to render with a separate water layer and have a better water animation.

This version of the animator class needs to be instantiated for each type of animated tile. e.g. one for water, one for fire, or whatever, but then it updates all the visible tiles at once.

public class TileAnimator extends Transition {

Rectangle2D[] viewports;

List<ImageView> nodes;

public TileAnimator(List<ImageView> nodes, Duration duration, Rectangle2D[] images) {

setCycleDuration(duration);

this.viewports = images;

this.nodes = nodes;

}

public void setNodes(List<ImageView> nodes) {

this.nodes = nodes;

}

protected void interpolate(double d) {

int index = Math.min(viewports.length - 1, (int) (d * viewports.length));

for (ImageView node : nodes)

node.setViewport(viewports[index]);

}

}

JavaFX really does most of the work, all the animator is doing is changing the texture coordinates around. The hardest part, if you can call it that, will be defining and communicating the animation meta-data to the client code.

A bit more on jjj

I spent a few spare hours yesterday and today poking at some of the other basic functions needed for an android video editor. First a list of icons that loads asynchronously and then some database stuff.

The asynchronous loading with caching was more involved than i'd hoped but I have it (mostly) working. Unfortunately Android calls getView(0) an awful lot, and often on a view which isn't actually used to show the content, so trying to match views to latent requests doesn't always work (for view 0 only).

I've done something like this before using a thread and request handling queue, but this time I tried just using a threadpool and futures. I tested it by loading individually offset frames from a mp4 file - i.e. it's pretty slow - and the caching works fairly well, i.e. it drops starting of processing frames you've scrolled away from so that the screen refreshes relatively efficiently. The GUI remains smooth and responsive. Apart from the item-0 problem it would be perfectly adequate. And in practice the images would just be loaded from a pre-recorded jpeg so most of the loading latency would vanish.

After that I came up with a fairly reusable class which lets me add 'async image loading and caching' to any list or gridview, which is a necessity on Android to save on memory requirements. I used it to create a graphical clip selector in only a few lines of code.

And today I filled out another big chunk - creating a database to store everything. I tried to keep it as simple as possible but it's still fairly involved. I was thinking of using Lucene just as an index, but then I decided I really did want the referential integrity guaranteed by using Berkeley DB JE. So I used that instead. It's got a really great API for working with POJO's and it's pretty simple to set up complex relational databases using annotations. The only real drawback of using itis that if you need to write complex joins and so on you have to code them by hand: but I don't need them here, and besides often the complex joinery in SQL means you have to write messy code to handle it anyway. It's not something I miss having to deal with.

Then I further hacked up the hackish sequence editor to fit in with the DB backend. Actually I hacked up quite a bit of glue to tie together all the GUI work I have done so far:

- Projects

- The main window is a project window, from which you browse the projects, and create another one. Clicking on a project jumps straight into the scene editor. I'm using a AsyncTaskLoader to open the database which can take a second or so.

A project will have a collection of scenes and probably an output format. Although initially I am only implementing it using a single scene otherwise it seems to add too much complication to the interface.

- Scene

- This is currently the sequence editor as mentioned in the last post. Tracks/layers can be edited using drag and drop. Clicking on the "add clip" brings up the Clip manager, and after a clip is selected it is added to the scene. Changes are persisted to the database as they occur.

- Clip manager

- This brings up a list of clips which have been defined for the given project. Clicking on a clip just selects it and returns it to the caller (i.e. the Scene). There is also an "add" menu item which then brings up a file requester (using the standard Android Intent for "get content") which lets you choose a video file. Once one is selected, it then jumps to the Clip editor.

The database tracks video files used in a separate table, but i'm not sure adding a separate GUI for that is terribly useful - just clutter really. But the table will be used if I want to transcode videos into a quick-editing format.

- Clip Editor

- And this is the first interface I mentioned a couple of posts ago. It lets one mark a single region within a video file, and then save it as a clip. The clip is then saved back into the clipmanager.

Its surprising how much junk you have to write to get anything going. It's not like a command line programme where you can just add another switch in a couple of lines of code, you need a whole new activity, layout, intent management, response handling, blah blah etc etc.

So I guess at another two half-days that takes the total effort to about 4, although i should probably round it up to 5 being a bit more conservative. A few hours more to hook in the prototype renderer and I would have a basic video editor done - not bad for a weekend hack, even if it was a particularly super-long weekend ;-). There are of course some fairly important features missing such as transitions and animations and so forth.

Although having it at this level of integration also begins to show up all the usability issues and edge-cases i've ignored until now. It is also where one can investigate alternatives fairly cheaply: e.g. does it really work having clips per-project, or should they be a globally available resource library? This is what prototypes are for, after-all ...

It's also starting to approach the point where the hacking for entertainment starts to turn south; maintaining and fixing vs exploring and creating.

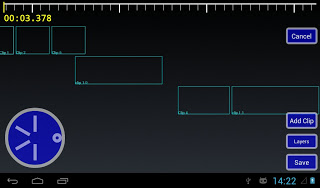

jjj sequence editor

Sometimes I hate it when I have ideas in my head and they just wont go. Last night I was getting bored with TV and kept thinking about a sequence editor - the most complex single interface component I'm after - so I fired up the computer and hacked from 11pm till 3am or so. The the less sleep I get the more "anxious to get shit done" I seem to be, until I fall apart.

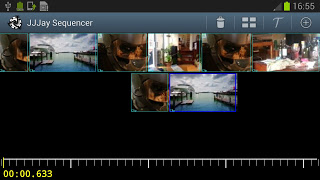

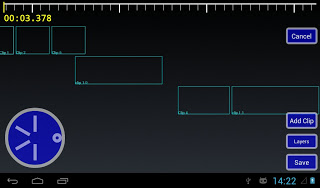

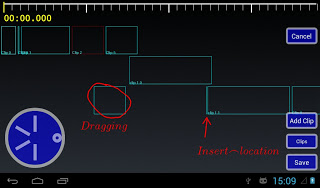

So although it's not much to look at yet - and the code behind it is even worse - last night together with a few hours today I came up with the following interface and all the guts to make it work.

I'm not sure if i need the jog-wheel there as the functionality is provided by the scale too, but it lets one move the timeline forwards/backwards. I also doubt i need to support any-number-of-layers, but I suppose I may as well - once you have more than one, any number isn't much harder.

The scale at the top can be dragged - it acts like a scroll-bar, or two fingers pressed on it can be used to zoom in and out.

The main sequence interface is modal, in that you are either working with 'layers' (or 'tracks'?) or clips. The "Layers" button is a toggle which switches modes, although I think I need a better interface design for that.

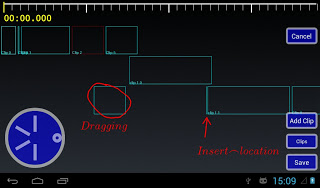

I don't have animations (i'm kind of up in the air on them as most of the time they just piss me off with their added delay) but the clips can be "drag and dropped" around the sequence in fairly obvious ways. So for example dragging a clip creates a layer-locked box which follows your finger, and a | indicator where it can be inserted. Letting go moves the clip to the new location, creating new layers as required.

Layers work similarly, except layers can be re-arranged, and the start position for the layer can be altered. All clips within a layer always run one after another. Doing things like moving the first clip causes the layer origin to be moved so the rest of the content isn't changed and so on, so it kind of 'just works' like you'd expect.

I have a basic single-item selection mechanism too, for deleting or other item-sensitive operations (e.g. where does 'add clip' add?).

So although the code is a complete pigs breakfast it is quite functional and stable, and with a bit of styling and frame graphics it's almost feature complete. Probably the last major functionality missing would be some start/end 'snapping' - but that's more of a nice-to-have.

Compositor too

Yesterday afternoon - when I was supposed to be relaxing - I worked on a prototype of a compositing and video creation engine. Initially I tried to use the ImageZ approach of compositing in floats, row-by-row, but android's java just isn't fast enough to make that practical. So I fell back to just using android's Canvas interface as the compositor. I guess it makes more sense anyway as it gives me a lot of functionality for free. I could get more performance bypassing it, but it's going to be a great deal of work. Obviously, I didn't attempt audio.

Initially I just wanted to see how performance was, but I ended up adding video and text "sources", which can be mixed/matched together. Performance is ... well it's ok, it's just a mobile platform with a purely CPU pipeline. A 10 second 800x600@25fps MP4 medium-quality clip takes about 20 seconds to decode+render+encode on the *ainol elf 2. For the compositor, about half the time is spent in decoding the source material and half spent in compositing, which isn't exactly flash - but it's all bytebuffers and Bitmaps and copying shit around multiple times so it's hardly surprising.

As usual the ideas in my head exploded yesterday and I thought about all sorts things: ways to create an interesting and usable interface, keyframe animation of clips and properties, multiple 'use modes' such as a simple video cutter, potential local client/server operation, using your pc to do the rendering, or using the tablet as an interactive control surface for a pc based application. But whether i'll have the time and motivation to see any of it through is another matter.

If i remember to, I'm going to try to track how much time i've spent on this project, so far it's about 3 days. I initially thought I could do a quick-and-dirty "weekend hack" and see how far I got and just leave it at that, and then thought the better of it. It's a 5-day long weekend for me so that's too much like hard work!

jj .. jay?

Time to drop another ``prototype-bomb'' on the unsuspecting world ..

Ultimate goal is some sort of simple-to-use video editor, but to begin with there are a lot of user interface and algorithmic problems to work out. I tried to find some simple editors in the android store but they pretty much sucked - based on server processing, questionable advertising, difficult and confusing to use, and usually quite slow.

However, a few hours hacking so far and I have a fully functioning "clip" editor, which is one of the basic components required.

I've actually been meaning to play with something like this for quite a long time, and finally the planets aligned such that it was an opportune time to look into it (nothing to do with any internet goings ons). I will probably also dabble in a JavaFX version if I get anywhere with it; although the interface requirements there are very different.

Copyright (C) 2019 Michael Zucchi, All Rights Reserved.

Powered by gcc & me!