About Me

Michael Zucchi

B.E. (Comp. Sys. Eng.)

also known as Zed

to his mates & enemies!

< notzed at gmail >

< fosstodon.org/@notzed >

Speaker Boxes and Music Players

I finally got around to mostly finishing the speakers I was making

out of recovered drivers. Here's a bit of a photo-diary of the final stages.

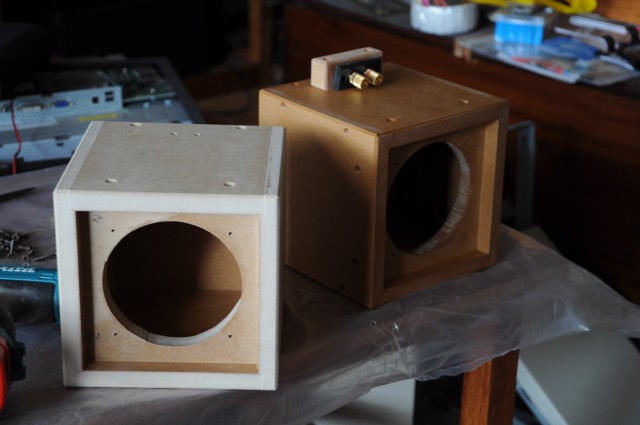

Here we have the completed woodwork. It has been glued and sanded square. I'm mostly happy with how it turned out but I could have done a bit more sanding; I was over it by then. In each case I pretty much practiced on one and got better on the other one, hence a few differences here and there. The one on the left is unpainted and the one on the right has a coat of sanding sealer and also the binding post mounting mechanism.

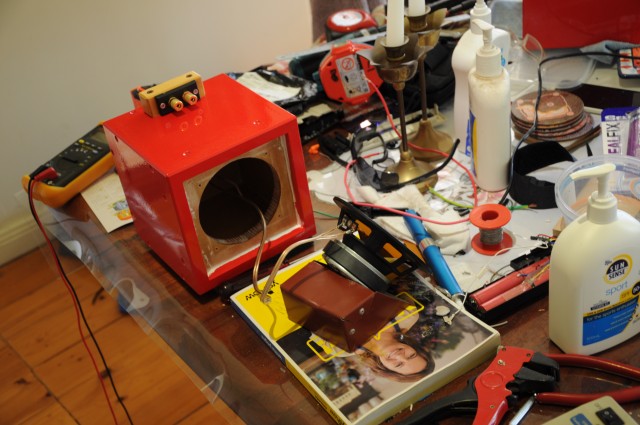

Three undercoats, lots of sanding and two overcoats and it's ready to be put together.

Given the paint was so expensive ($90) I did a bit of a shit job in the end; I just got sick of dealing with everything. First I had a cheap throwaway brush which I should've just thrown away before I even started. Then the paint was too thick for the weather and needed more thinning, then I tried using a small roller - but again i got some cheap junk which left behind piles of dust and shit, and because I hadn't used a roller before I didn't apply it as well as I might have.

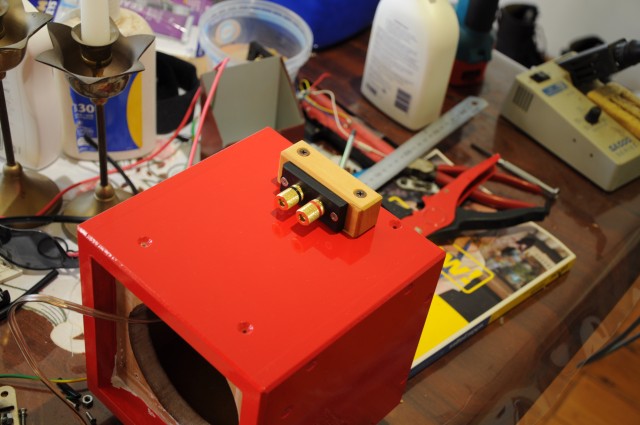

The next shot just shows the mounting point for the binding posts.

Even this I kinda fucked up. I tried using the drill press to drill the screw holes to mount it but to avoid scratching the paint I had it on a small block of foam. It moved when I wasn't looking and I drilled it in the wrong spot. It's hidden but I know it's there.

Soldering the wires.

Completed binding posts.

Connecting the driver.

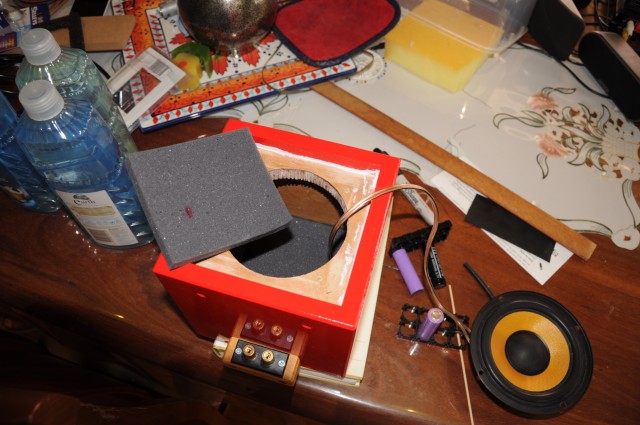

Inserting the acoustic foam. I have 25mm at the read and 12.5mm down the sides - about as much as could fit in the small box.

A completed speaker (so far). The screws (all 24 of them) for the box are really only aesthetic as the box is very well glued. They did help when setting the glue though.

The pair. I've only redone the rubber surround on the right one so far, the one on the left is still a bit scratchy with high volume as a result. The mounting rubber washers/etc is still work in progress.

I'm still yet to get some fabric for the grilles. I did a quick peruse through lincraft but couldn't find any suitable, next time i'll ask and there's a couple of other places to try.

And finally a shot in testing. The plan is to put the electronics

in another box with a battery pack. I've got the bits for the

battery but am still working on the mechanics.

How do they sound? Well ok. They're not going to break any

technical records; there's no engineering behind the boxes other

than being a sealed box with a bit of foam in it. Each only has a

woofer so the top-end is very retarded. The bottom end isn't too

bad but it does have an echoey effect with some mid-tones, then

again so do any other small speakers i've tried (including the

$1000/pair units I just installed in the dining room). Quite ok

for a bit of outside doof though!

It's been a good little project to get away from the screen so

far, although finishing it off will not be.

Electronics and Software

I was looking around for hardware for the player but since I have

the mele doing nothing I thought I may as well use that. Most of

the other prototyping boards around now are either too high

powered or missing some necessary bits; although the nanopi boards

look quite attractive (on a side-note I can't believe how

expensive raspberry pi shit is in australia). As this is

nominally a 'portable' system based on recycled speakers (with no

tweeters!) the audio quality isn't terribly important, the

on-board dac/codec sounds good enough to me. I'm also considering

an old phone or an old 7" tablet I have, although it's far less

attractive to me if they can only run android (fuck that shit;

yeah fuck you too google).

After spending most of a day on it I managed to get debian running

on the mele. I tried

the sunxi wiki

and building my own kernel and bootloader but without any debug

console I couldn't tell if it was working or not. I got

this server image to

work in the end, using the jessie image.

While trying to build using the sunxi instructions I discovered

that the ubuntu

maintainers have

a really broken idea about what a cross-compiler should do.

And they're really quite rude about it, hiding their rudeness

behind the `code of conduct'.

To avoid dragging in gobs of junk and other poettering snot I

built a basic SDL (1.2) and a cut-down ffmpeg (1.0) from source

and have a basic console music player working. With a little more

work I can control it with the mele airmouse although without a

screen it's going to be difficult to do too many interesting

things. Something to poke about with.

Dropper Fire Grill, and Firefox suxors too

So this came about because I was fighting with firefox and needed

to get away from a screen for a while. I wrote a couple of

plugins (oh sorry, 'addons', no hang on, web-extensions, WHATEVER

THE FUCK YOU WANT TO CALL IT MOZILLA) and the experience was sour

(can't you tell?).

The aforementioned BBQ/firepit thing I haven't gotten around to

using yet, one reason is because I wanted to make a fire grate

first because it's a bit too deep.

So a few droppers, a bit of hacksawing and some angle grinding

later here it is. It just slots together with no fastening or

welding.

It's even adjustable! No brick, brick flat, or brick on side!

Actually it might just work better as an esky, but i'll see.

More on the firefox plugins later, they're just for overriding

site fonts and site colours. There do exist such plugins but they

no longer work for some reason. OF course there's not much use

distributing the source becuase you NEED A FUCKING MOZILLA ACCOUNT

JUST TO INSTALL THEM.

Putting things back together

My brother was here a few weeks ago and I took the opportunity of having transport (I don't drive - just never got a license) to get a few things that are a little difficult to transport on the bicycle.

One was to take my old VAF DC-7 Rev 1.0 speakers and get them

reconditioned. It wasn't exactly cheap but they replaced the old

drivers (required a bigger hole) and i'm not sure what else. But

I asked to keep the old drivers just to muck around with, perhaps

build a 'portable speaker' type thing out of them. They are a bit

scratchy from uh, over-use, but I noticed the main problem is the

surrounds had perished. I looked up some info about replacing

them and ended up ordering some cheapies from China - i'm not sure

I can recover them regardless and it's not worth the cost if I

fail.

Part of the probess is removing the old rubber, and about all I

can say about it is you have to be patient. I used a very sharp

chisel and it took a couple of hours just to remove one, although

the final 1/4 went much faster than the first once I got the

technique sorted out.

Tools

Another thing I got was a welder, small drill press and some other

tools. And that lead to a bit of a spending spree that continued

after he left, buying a bunch of other workshop tools. They're

mostly cheap bits and pieces because i'm not sure how much use

i'll get out of them.

It wasn't the reason I got the welder but the first thing I

thought of making was a belt buckle for a very wide kilt belt. I

had asked a small leather work place (shoe repair, belts) shop in

the city whether they could make wide belts but they couldn't and

directed me to a saddlesmith at the other end of town. I dropped

in one day and asked about it - yeah he could make any width belt,

but he didn't have any buckles suitable. So the next weekend I

got the welder out and turned a couple of pieces of wine barrel

ring into a rather large belt buckle.

The welding is pretty shithouse but I haven't welded for years and

the grinding and polishing hides most of it. The buckle has a

loop through which the belt connects and a single pin which

selects the size. The end of the belt comes around out the front

(or can go behind) and a loop holds it in place.

The front finish is a sort of coarse brushed/dented appearance

from using an angle grinder, wire brush and polishing wheel. I'm

still not sure on the finish but I will probably clear coat it.

The belt I got made up is 70mm wide and the loop which connects to

the buckle uses press-studs so I can make more buckles and easily

replace them. 70mm is about the widest that suits the kilts I

have which is just as well because I didn't really know what it

would look like till it was made.

Not particularly cheap either at $99 but at least it's locally

made and very solid leather - it should last forever. My existing

belt was relatively 'cheap' one from utkilts that uses velcro to

adjust the length. But the finish is wearing already (mostly

creases), the velcro is coming off around the back, and the buckle

- while ok - is a bit cheap. The actual bits are made in Pakistan

I believe but the shipping costs from USA are outrageous -

although they seem to have gotten slightly better, but to get the

same belt ($26) and buckle ($17) again would cost $77 when

shipping is added anyway, and they don't handle GST (I have no

idea whether this means you get hit with import hassles above the

$7 GST cost). And that's the absolute cheapest/slowest option, it ranges up to $130!

Actually I have an idea for another buckle mechanism that I might

try out when I get time, if I do that I might get a 60mm belt

made. The other idea would be a lower profile, hide the tail of

the belt (wrap under), and possible be hole-less if I can create a

binding mechanism that wont damage the front surface of the belt.

And today I turned one of the practice pieces of barrel strap into

a bottle opener. I drilled some holes and filed out the opening

shape by hand.

The finish is a little shit because I cut a bit deep using a

sanding disc on an angle grinder and gave up trying to sand it

out, although it is a lot shinier than it appears in the photo.

Partly I was experimenting with finishes and patterns and i'm

happier with the pattern here, or at least the general approach.

I created a round ended punch from an old broken screwdriver and

used a small portable jackhammer (/ hammer drill) to pound in the

dots. Because i'm just cold working it's a bit difficult to do

much in the pattern department.

Gets me away from the computer screen anyway, i'm kinda burnt out

on that. I'm not really doing enough hours lately for the guy who

pays me (for various reasons beyond our control he's got more

money than hours I want to work!), although the customers who pay

him are quite happy with the output!

I'm at the point where I finally need to get some glasses. I had

another eye test last week and while I can still survive without

it it's to the point that i'm not recognising people from afar and

squinting a bit too much reading at times. I need a separate

reading and distance script unfortunately so I got a pair of

sunnies for distance and reading glasses for work. They would've

helped with the fabrication above! It's going to take a while to

get used to them, and/or work out whether I get progressive lenses

or whatnot, for example I can't read games very well on my TV, but

that's farther away than the reading glasses work at, sigh. I

guess i'll find out in a week or so.

Pulling things apart

I pulled an old deskjet printer apart the other day. It wasn't a

particularly expensive machine and it broke years ago; it's just

been sitting in the corner of my room collecting dust for the day

I threw it out or took out the useful bits. I guess what I found

most interesting is despite it being a disposable item just how

well put together it was.

- The main guide bar is a very solid piece of machined stainless steel. I always thought it was just a tube.

- Almost all parts could be removed by hand apart from a handful of pieces held by torx screws.

- All the metal parts could be easily removed from the plastic parts - springs, pins, rails and so on. Similarly

Basically it looks like it was designed to be repairable. Can't

imagine the equivalent today would be.

Flymo

I also have a more recent machine that still runs, a mains powered

electric hover mower. It's one that has the motor on a separate

spindle to the blade which is driven by a belt. For a few years

it's been 'running' rough due to bung bearings, I had looked at it

before but it looked irrepearable. The whole base-plate, clutch

and drive pulley assembly can be bought as a FRU but it must be

ordered from England. The last time I tried I couldn't get the

payment to go through and so i've just been living with a mower on

the edge of self-destruction.

Today it was getting so bad I finally had another look at it. And

lo! I managed to get the bearings out, although it took about 2+

hours with the tools I have at hand and an awful lot of swearing!

Anyway the two bearings should be easy to source and hopefully

it'll be back up and running once I put it back together. It

would be a pity to get a whole new mower just because a couple of

small cheap parts failed, and repairing it would've been

prohibitively expensive. Hate wasting stuff.

Apart from all that I took a few days off and have been doing a

lot of gardening, preparing vegetable gardens and whatnot.

Hopefully a year or so basically fallow will work in my favour, I

need some more exotic chillies and home-grown tomatoes can't be

beat.

Beer for the Win!

So apparently I won one of these things.

Just in time for summer!

I might have a beer to celebrate! I spent the morning pulling out

weeds so I earned it!

Fast incremental Java builds with openjdk 11 and GNU make

This post wont match the article but I think i've solved all the

main problems needed to make it work. The only thing missing is

ancestor scanning - which isn't trivial but should be

straightforward.

Conceptually it's quite simple and it doesn't take much code but

bloody hell it took a lot of mucking about with make and the javac

TaskListener.

I took the approach I outlined yesterday, I did try to get more

out of the AST but couldn't find the info I needed. The module

system is making navigating source-code a pain in Netbeans (it

wont find modules if the source can't). Some of the 'easy' steps

turned out to be a complete headfuck. Anyway some points of interest.

Dependency Tracking

Even though a java file can create any number of classes one

doesn't need to track any of the non top-level .class files that

might be created for dependency purposes. Any time a .java file

is compiled all of it's generated classes are created at the same

time. So if any java file uses for example a nested class it only

need to track the source file.

I didn't realise this at first and it got messy fast.

Modified Class List

I'm using a javac plugin (-Xplugin) to track the compilation via a

TaskListener. This notifies the plugin of various compilation

stages including generating class files. The painful bit here is

that you don't get information on the actual class files

generated, only on the source file and the name of the class being

generated. And you can't get the actual name of the class file

for anonymous inner classes (it's in the implementation but hidden

from public view). In short it's a bit messy getting a simple and

complete list of every class file generated from every java file

compiled.

But for various other reasons this isn't terribly important so I

just track the toplevel class files; but it was a tedious

discovery process on a very poorly documented api.

When the compiler plugin get the COMPILATION finished event it

uses the information it gathered (and more it discovers) to

generate per-class dependency files similar to `gcc -MD'.

Dependency Generation & Consistency

To find all the (immediate) dependencies the .class file is

processed. The ClassInfo records provide a starting point but all

field and method signatures (descriptors) must be parsed as well.

When an inner class is encountered it's container class is used to

determine if the inner class is still extant in the source code -

if not it can be deleted.

And still this isn't quite enough - if you have a package private

additional class embedded inside the .java file there is no

cross-reference between the two apart from the SourceFile

attribute and implied package path. So to determine if this is

stale one needs to check the Modified Class List instead.

The upshot is that you can't just parse the modified class list

and any inner classes that reference them. I scan a whole package

at a time and then look for anomilies.

One-shot compile

Because invoking the compiler is slow - but also because it will

discover and compile classes as necessary - it's highly beneficial

to run it once only. Unfortunately this is not how make works and

so it needs to be manipulated somewhat. After a few false starts

I found a simple way that works:

The per-module rules are required due to the source-tree naming

conventions used by netbeans (src/[module]/classes/[name] to

build/modules/[module]/[name]), a common-stem based approach is

also possible in which case it wouldn't be required. In practice

it isn't particularly onerous as I use metamake facilities to

generate these per-module rules automatically.

I spent an inordinate amount of time trying to get this to work

but kept hitting puzzling (but documented) behaviour with pattern

and implicit rule chaining and various other issues. One big one was

using concrete rules (made files) for tracking stages, suddenly

everything breaks.

I resorted to just individual java invocations as one would do for

gcc, and trying the compiler server idea to mitigate the costs.

It worked well enough particularly since it parallelises properly.

But after I went to bed I realised i'd fucked up and then spent a

few hours working out a better solution.

Example

This is the prototype i've been using to develop the idea.

modules:=notzed.proto notzed.build

SRCS:=$(shell find src -name '*.java')

CLASSES:=$(foreach mod,$(modules),\

$(patsubst src/$(mod)/classes/%.java,classes/$(mod)/%.class,$(filter src/$(mod)/%,$(SRCS))))

all: $(CLASSES)

lists='$(foreach mod,$(modules),$(wildcard status/$(mod).list))' ; \

built='$(patsubst %.list,%.built,$(foreach mod,$(modules),$(wildcard status/$(mod).list)))' ; \

files='$(addprefix @,$(foreach mod,$(modules),$(wildcard status/$(mod).list)))' ; \

if [ -n "$$built" ] ; then \

javac -Xplugin:javadepend --processor-module-path classes --module-source-path 'src/*/classes' -d classes $$files ; \

touch $$built; \

rm $$lists ; \

else \

echo "All classes up to date" ; \

fi

define makemod=

classes/$1/%.class: src/$1/classes/%.java

$$(file >> status/$1.list,$$<)

$1: $2

if [ -f status/$1.list ] ; then \

javac --module-source-path 'src/*/classes' -d classes @status/$1.list ; \

rm status/$1.list ; \

touch status/$1.built ; \

fi

endef

$(foreach mod,$(modules),$(eval $(call makemod,$(mod),\

$(patsubst src/$(mod)/classes/%.java,classes/$(mod)/%.class,$(filter src/$(mod)/%,$(SRCS))))))

-include $(patsubst classes/%,status/%.d,$(CLASSES))

In addition there is a compiler plugin which is about 500 lines of

standalone java code. This creates the dependency files (included

at the end above) and purges any stale .class files.

I still need to work out a few details with ancestor dependencies

and a few other things.

Java 11 Modules, Building

I'm basically done modularising the code at work - at least the

active code. I rather indulgently took the opportunity to do a

massive cleanup - pretty well all the FIXMEs, should be FIXMEs,

TODOs, dead-code and de-duplication that's collected over the last

few years. Even quite a few 'would be a bit nicers'. It's not

perfect but it was nice to be able to afford the time to do it.

I'm still trying to decide if I break the projects up into related

super-projects or just put everything in the single one as

modules. I'm aiming toward the latter because basically i'm sick

of typing "cd ../blah" so often, and Netbeans doesn't recompile

the dependencies properly.

I'm going to reset the repository and try using git. I don't like

it but I don't much like mercurial either.

Building

At the moment I have a build system which uses make and compiles

at the module level - i.e. any source changes and the whole module

is recompiled, and one can add explicit module-module dependencies

to control the build order and ensure a consistent build.

One reason I do this is because there is no 1:1 correspondance

between build sources and build classes. If you add or remove

nested or anonymous (or co-located) classes from a source file

that adds or removes .class files which are generated. So to

ensure there are no stale classes I just reset it on every build.

This isn't too bad and absolutely guarantees a consistent build

(assuming one configures the inter-module dependencies properly)

but the compiler is still invoked multiple times which has

overheads.

Building Faster

Really the speed isn't a problem for these projects but out of

interest i'm having a look at a couple of other things.

One is relatively simple - basically use JSR-199 to create a

compiler server something like the jdk uses to build itself.

The more complicated task is incremental builds using GNU Make. I

think I should be able to hook into JavacTask and with a small bit

of extra code create something akin to the "gcc -MD" option for

auto-generating dependencies. It has the added complication of

having to detect and remove stale .class files, and doing it all

in a way that make understands. I've already done a few little

experiments today while I was procrastinating over some weeding.

Using JavacTask it is possible to find out all the .class files

that are generated for a given source file. This is one big part

of the puzzle and covers the first-level dependencies

(i.e. %.java: %.class plus all the co-resident classes). One can

also get the AST and other information but that isn't necessary

here.

To find the other dependencies I wrote a simple class file decoder

which finds all the classes referenced by the binary. Some

relatively simple pattern matching and name resolution should be

able to turn this into a dependency list.

Actually it may not be necessary to use JavacTask for this because

the .class files contain enough information. There is extra

overhead because they must be parsed, but they are simple to

parse.

Concurrent Hash Tables

So I got curious about whether the GC in NativeZ would cause any

bottlenecks in highly contested situations - one I already faced

with an earlier iteration of the library. The specific case I had

was running out of memory when many threads were creating

short-lived native objects; the single thread consuming values

from the ReferenceQueue wasn't able to keep up.

The out of memory situation was fairly easily addressed by just

running a ReferenceQueue poll whenever a new object is created,

but I was still curious about the locking overheads.

A field of tables

I made a few variations of hash tables which supported the

interface I desired which was to store a value which also provides

the key itself, as a primitive long. So its more of a set but

with the ability to remove items by the key directly. For now

i'll just summarise them.

- SynchronisedHash

This subclasses HashMap and adds the desired interfaces, each of

which is synchronised to the object.

Rather than use the pointer

directly as the key it is shifted by 4 (all malloc are 16-byte

aligned here) to avoid Long.hasCode() pitfalls on pointers.

- ConcurrentHash

This subclasses ConcurrentHashMap and adds the desired

interfaces, but no locking is required.

The same shift trick is

used for the key as above.

- PointerTable

This was the use-weakreference-as-node implementation

mentioned in the last post - a simple single-threaded chained hash

table implementation. I just synchronised all the entry

points.

A load factor of 2.0 is used for further memory

savings.

- CopyHash

This is a novel(?) approach in which all modifications to

the bucket chains is implemented by copying the whole linked list

of nodes and replacing the table entry with the new list

using compare and set (CAS). A couple of special cases can avoid

any copies.

In the event of a failure it means someone else

updated it so it simply retries. Some logic akin to a simplified

version of what ConcurrentHashMap does is used to resize the table

when needed, potentially concurrently.

It uses a load factor of

1.0.

I also tested a fast-path insert which doesn't try to find

an existing value (this occurs frequently with the design) but

that so overloaded the remove() mechanism it wasn't actually a

good fit!

-

- ArrayHash

This is similar to CopyHash but instead of a linked list of

container nodes it either stores the value directly in the table,

or it stores an array of objects. Again any modifications require

rewriting the table entry. Again resize is handled specially and

all threads can contribute.

This also has a small modification

in that the retry loop includes a ficonacci-increasing

Thread.sleep(0,nanos) delay if it fails which may slow down

wall-clock time but can improve the cpu load.

I had some ideas for other approaches but i've had enough

for now.

Tests

I created a native class which allocates and frees memory of a

given size, and a simple 'work' method which just adds up the

octets within. I ran two tests, one which just allocated 16 bytes

whic immediately went out of scope, and another which allocated

1024 bytes, added them up (a small cpu-bound task), then let it

fall out of scope.

Note that lookups are never tested in this scenario apart from the

implicit lookup during get/put. I did implement get() - which are

always non-blocking in the case of the two latter

implementations (even during a resize), but I didn't test or use

them here.

I then created 8 000 000 objects in a tight loop in one

or more threads. Once the threads finish I invoke System.gc(),

then wait for all objects to be freed. The machine I'm running it

on has 4 cores/8 threads so I tried it with 1 and with 8 threads.

Phew, ok that's a bit of a mouthful that probably doesn't make

sense, bit tired. The numbers below are pretty rough and are from

a single run using openjdk 11+28, with -Xmx1G.

8 threads, alloc 8 threads, alloc+sum 1 thread, alloc 1 thread, alloc+sum

x 1 000 000 x 1 000 000 x 8 000 000 x 8 000 000

Elapsed User Elapsed User Elapsed User Elapsed User

SynchronisedHash 8.3 38 11 54 8.4 27 16 40

ConcurrentHash 6.9 35 11 52 8.2 27 17 38

PointerTable 7.2 26 13 44 7.9 17 19 29

CopyHash 6.6 31 8.2 42 8.1 24 18 33

ArrayHash 6.0 28 8.2 39 8.5 23 16 24

ArrayHash* 6.9 23 12 30 8.1 21 17 23

* indicates using a delay in the retry loop for the remove() call

To be honest I think the only real conclusion is that this machine

doesn't have enough threads for this task to cause a bottleneck in

the hash table! Even in the most contested case (alloc only)

simple synchronised methods are just about as good as anything

else. And whilst this is represenative of a real life scenario, it's

just a bloody pain to properly test high concurrency code and i'm just not that

into it for a hobby (the journey not the destination and so forth).

I haven't shown the numbers above but in the case of the Copy and

Array implementations I count how many retries are required for

both put and get calls. In the 8-thread cases where there is no

explicit delay it can be in the order of a million times! And yet

they still run faster and use less memory. Shrug.

All of the non java.util based implementations also benefit in

both time and space from using the primitive key directly without

boxing and storing the key as part of the containee object and not

having to support the full Collections and Streams interfaces.

PointerTable also benefits from fewer gc passes due to not

needing any container nodes and having a higher load factor.

BTW one might note that this is pretty bloody slow compared to C

or pure Java - there are definitely undeniably high overheads of

the JNI back and forths.

The other thing of note is that well hashtables are hashtables -

they're all pretty good at what they're doing here because of the

algorithm that they share. There's not really all that much practical

difference between any of them.

But why?

I'm not sure what posessed me to look into it this deeply but I've

done it now. Maybe i'll post the code a bit later, it may have

enough bugs to invalidate all the results but it was still a

learning experience.

For the lockless algorithms I made use of VarHandles (Java 9's

'safe' interface to Unsafe) to do the CAS and other operations,

and some basic monitor locking for coordinating a resize pass.

The idea of 'read, do work, fail and retry' is something I

originally learnt about using a hardware feature of the CELL SPU

(and Power based PPU). On that you can reserve a memory location

(128 bytes on the SPU, 4 or 8 on the PPU), and if the ticket is

lost by the time you write back to it the write fails and you know

it failed and can retry. So rather than [spin-lock-doing-nothing

WORK unlock], you spin on [reserve WORK write-or-retry]. I guess

it's a speculative reservation. It's not quite as clean when

using READ work CAS (particularly without the 128 byte blocks on

the SPU!) but the net result is (mostly) the same. One significant

difference is that you actually have to write a different value

back and in this instance being able to merely indicate change

could have been useful.

ConcurrentHashMap does something similar but only for the first

insert into an empty hash chain, after that it locks the entry and

only ever appends to the chain.

Some of the trickiest code was getting the resize mechanism to

synchronise across threads, but I really only threw it together

without much thought using object mointors and AtomicInteger.

Occasionally i'm gettting hangs but they don't appear to make

sense: some number of threads will be blocked waiting to enter a

synchronised method, while a few others are already on a wait()

inside it, all the while another thread is calling it at will -

without blocking. If I get keen i'll revisit this part of the

code.

Copyright (C) 2019 Michael Zucchi, All Rights Reserved.

Powered by gcc & me!