Further beyond the ROC - training a better than perfect classifier.

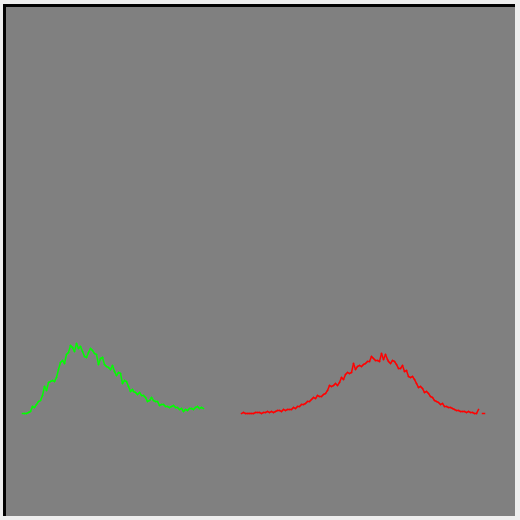

I added a realtime plot of the population distribution to my training code, and noticed something a little odd. Although the two peaks worked themselves apart they always stayed joined at the midpoint. This is not really strange I guess - the optimiser isn't going to waste any effort trying to do something I didn't tell it to.

So with a bit of experimentation I tweaked the fitness sorting to produce a more desriable result. This allows training to keep improving the classifier even though the training data tells it it is 'perfect'. It was a little tricky to get right because incorrect sorting could lead to the evolution getting stuck in a local minimum but I have something now that seems to work quite well. I did a little tuning of the GA parameters to speed things up a bit and added a bit more randomisation to the mutation step.

The black line is the ROC curve (i.e. it's perfect), green is the positive training set, red is the negative. For the population distrtribution the horizontal range is the full possible range of the classifier score, and vertically it's just scaled to be useful. The score is inverted as part of the ROC curve generation so a high score is on the left.

The new fitness collator helps push the peaks of the bell curves outwards too moving the score distribution that bit closer to the ideal for a classifier.

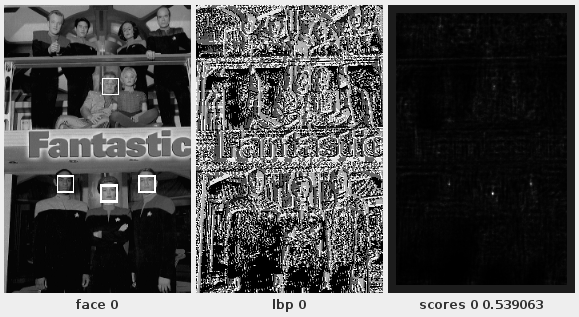

The above is for a face detector - although I had great success with the eyes I wanted to confirm with another type of data. Eyes are actually a harder problem because of scale and distinguishing signal. Late yesterday I experimented with creating a face classifier using the CBCL data-set but I think either the quality of the images is too low or I broke something as it was abysmal and had me thinking I had hit a dead-end.

One reason I didn't try using the Color FERET data directly is I didn't want to try to create a negative training set to match it. But I figured that since the eye detector seemed to work ok with limited negative samples, so should a face detector so I had a go today. It works amazingly well considering the negative training set contains nothing outside of the Color FERET portraits.

Yes, it is Fantastic.

I suspect the reason the Color FERET data worked better is that due to the image sizes they are being downsampled - with the same algorithm as the probe calculation. So both the training data and test data is being run through the same image scaling algorithms. In effect the scaling is part of the LBP transform on which the processing runs.

This is using a 16x16 classifier with a custom 5-bit LBP code (mostly just LBP 8,1).

The classifier response is strong and location specific as can be seen here for a single scale. This detector here is very size specific but i've had almost as good results from one that synthesized some scaling variation.

I couldn't get the young klingon chick to detect no matter what I tried - it may just be her pose but her prosthetics do make her fall outside of the positive training set so perhaps it's just doing it's job.