It was the best of times, it was the worst of times. More fft, more bang for your buck. Same old less bang for your buck.

After posting the result I kept experimenting with the code I `live blogged' about yesterday. I did some linlining but was primarily experimenting with multi-threading. I also looked at a decimation in time algorithm (which i kept fucking up until I got it working today), and a coupe of other things too, as will become apparent.

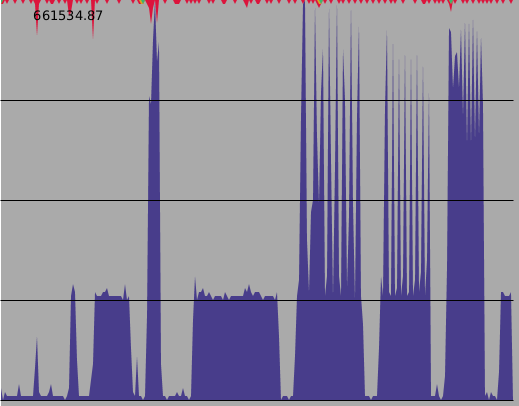

First a picture of a thousand words.

Now the words.

This picture shows the CPU load over time (i'm sure you all know what that is) as I ran a specific set of tests on a test against 8x runs of a 2^24 complex forward transform. The lines represent each core available on this computer. I put some 2s sleep calls between steps to make them distinguishable. Additionally each horizontal pixel represents 250ms which is is about the minimum sampling time which gives usable results.

Refer to earlier posts as to what the names mean.

- The first spike is starting the Java application. Oh boy, look at all that time and resources wasted! Java is the total suxors!!

- The next spike (about 10% utilisation) is initialising my "rot0" implementation. It uses 1 set of Wn exponents per log level of the calculation so has very little setup.

- The 1-load skinny spike is initialising jtransforms. I wonder how it's creating it's tables because it uses a lot of memory for them. It's clearly not using "[sic]Math".cos() for it.

- The 1-load long spike is initialising "table2". Lots of calls to "[sic]Math".sin(), and "[sic]Math".cos().

- The tall skinny spike is initialising the algorithm as discussed yesterday - I made some trivial alterations so it runs multi-threaded.

- There is now a 4 second pause because the next (newer but based on yesterday's code) implementation just uses the tables generated by the previous step and so doesn't register.

That's the setup out of the way, the first 3rd or so of the plot. It's important but not as important as the next bit.

- The long 1-load box is executing rot0 8 times over the data. As expected it takes the longest but fully occupies the CPU it is on while it's running.

- The next haircomb result is from jtransforms. Visually it's only using about 50% of the available cpu cycles above 1 core. The 8 separate transforms executed as part of this benchmark are clearly visible.

- Then comes my the (radix-4 only) implementation from yesterday. Despite it's somewhat trivial multi-threading code it's basically the same; but runs the fastest-so-far.

- Then comes the modified code. A nice solid block and a somewhat shorter execution time. In this case at least 4xthreads are used for all passes including the first. It's still wasting about a core, at least according to my monitor.

- The last spike is just the "rot0" algorithm starting again.

Well that's it I suppose. It utilises more of the available cpu resource and executes in a shorter time. But how was that achieved?

As Deane would say, ``Well Rob, i'm glad you asked''.

It only required a couple of quite simple steps. Firstly I copied the "radix4" routine into the inner loop of "radix4_pass". The jvm compiler wont do this itself without some options and it makes quite a difference of itself. Then I copied this to another "radix6_pass" which takes additional arguments that defines a sub-set of a full transform to calculate. I then just invoke this in parts from 4x separate threads, and keep doing that sort of thing until I hit the "logSplit" point and subsequently proceed as before. It was a quick-and-dirty and could be cleaned up but probably wont add much performance.

It was a bit of mucking about with the addressing logic but once done it's actually a fairly minor change: yet it results in the best performance by far.

At this point i've explored all the isssues and am working on a complete implementation which ties it all together. I think I will write two implementations: one using fully expanded tables for "ultimate performance" and another which calculates the Wn exponents on the fly for "ultimate size". Today I got a DIT algorithm working so I will fill out the API with forward/inverse, pairs-of-real, real-input, perhaps in-order results, and a couple of other useful things to aid convolution performance. Oh and 2D of all that.

But now for a little rant.

Why is software still so fucking shithouse?

So as part of this effort, I ended up having to write my own cpu load monitor. The only one I had available on slackware only has a tiny graph and is mostly just a GUI version of top. The dark slate blue is user time, the grey area is idle, crimson is cpu load, irq is medium sea green and the io wait is golden rod. The kernel doesn't report particularly accurate values in /proc/stat but it sufficed.

But I had intended to annotate this image with some nice 'callouts' and shadowed boxes and whatnot so i wouldn't have to write those 1000 words just to explain what it was showing. However ...

gimp has turned into a "professional photographer editing suite" - i.e. a totally useless piece of junk for most of the planet (and pro photographers wont use it anyway?). So the only other application i had handy was openoffice "dot org" (pretentious twats) draw. I even started to track down the dependencies of inkscape to build that but gtkmm? Yeah ok. But openoffce: Jesus H fucking-A-cunt-of-a-christ, what a load of shit that is. I can't imagine how may millions of dollars that piece of rubbish has cost in terms of developer hours and wasted customer time (aka luser `productivity') but i'm astounded by just how terrible it is. It runs very slow. Has some weird-arse modality/GUI update bullshit going on. Is a total pain to use (in terms of number of mouse clicks required to do the most trivial of operations). And above that it's just buggy as hell. I'd call up the voluminous settings "dialogue" to change a background colour and then it would decide to throw any changes i'd made if i didn't explicitly set it every time.

But it's in good company and about as shit as any `office' software has ever been since the inane marketroid idea to lock users into fucktastic `software ecosystems' was first conceived of. Fuck micro$oft and the fucking hor$e it fucking rode in on.

I was so pissed off I spent the next 4+ hours (till 5am) working on my own structured graphical editor. Ok maybe that's a bit manic and it'll probably go about as far as the last 4 times I did the same thing the last 4 times I also tried using a bit of similar software to accomplish a similar seemingly-simple goal ... but ``like seriously''?

To phrase it in the parlance of our time: what in the actual fuck?