About Me

Michael Zucchi

B.E. (Comp. Sys. Eng.)

also known as Zed

to his mates & enemies!

< notzed at gmail >

< fosstodon.org/@notzed >

Simple infinite canvas

Just for a laff I coded up an 'infinite canvas' thing in JavaFX.

In the back of my mind I have the idea of making a JavaFX version of ImageZ ... but I'm also not sure I could be bothered going the whole hog. Although i could dump much of the code (although no more float support), there's still a lot of crap to deal with implementing basic features like undo.

This runs faster with the CPU renderer than with the GPU one. Drawing is about the same, but if you end up with a scene with many tiles created, the CPU pans faster ... which isn't what you'd expect. It can easily gobble up the whole heap if you create some gigantic image by zooming out a long way.

I didn't originally intend to, but since it was there I also copied the applet + webstarter to the site as well. The main reason is i've not yet had any such JavaFX applets work - but since this one did, there it is. One might need the very latest JRE for it to work (1.7.0-7). Update: I couldn't get it to work on my laptop, but that might be an old firefox - it ran fine from the jar.

I also started a new sub-project `fxperimentz' in MediaZ to chuck these experiments.

Update: Oh, i forgot to check the source in, it's there now.

The #1 Java feature I most desire ...

... is native complex numbers.

And I mean proper high-performance primitive complex numbers using standard operators and syntax. Not that horridly slow immutable object version in the apache maths libraries and being forced to use extremely messy function calls and manually having to de-infix every equation.

I had to write my own class of mutable complex numbers to get any sort of performance (and it is much much faster) - but it's even (a slight bit) uglier than the apache stuff to use. And on writing it yourself: thank you thank you cephes.

Right now coding complex arithmetic in C is far and away easier but the JNI required makes it a bit too painful for prototyping.

The only saving grace for me personally is that OpenCL C doesn't have them and if the algorithms make it that far I need to manually code the arithmetic up anyway.

Java and legacy operating systems, graphics drivers, &c.

So I had to jump into the same operating system the client uses to verify some code changes still worked - really just a formality as I have jjmpeg working fine there and I was only changing some Java code.

Pretty weird results though. First I had some unpleasant flashing when the code was painting a bitmap - I thought it might've been from the latest jre update together with my habit of poking BufferedImage array's directly, but I checked and this code doesn't do any of that. All it does is replace the bitmap pointer and request and invalidate the component. Annoying but no show stopper.

But then the whole system crashed, ... graphics driver? Who knows. Probably. The AMD drivers are known to be ... problematic.

I rebooted, updated the AMD driver and rebooted again and in the short period of verification which followed I didn't see it happen again.

Except then I noticed something else - part of the application runs slower. Based on user input I do a bunch of deconvolutions on frequency-domain data, convert it back to spatial and merge the results to an image. It runs in another thread and on GNU/Linux it pretty much keeps up with as fast as the sliders move. Under the other system I noticed it was a bit laggy - nothing great but noticeable. The result of all this goes to a view which one can zoom using the scroll-wheel. Doesn't make any difference to the performance on GNU/Linux - it's just scaled during the paint() call, but it drops to a crawl on that other system if one zooms in a few times ... like down to 2-3 fps or something shocking.

As I was there I also tried the slideshow thing I wrote for JavaFX too. Apart from not traversing directories as I thought I was (bug), it was definitely slower there as well. Noticeable lags just as it started to transition in the next image. Possibly from GC, or from the blur effect.

I previously noticed other weirdness when I was playing with Glassfish based application a few months ago (in hindsight I should've just used the embedded webserver in SE, but i digress). I had few troubles getting it going on my system - just installed into ${HOME} and pointed netbeans at it - and just let it manage it. But all sorts of issues on the other system. In the end I had to use the command line tools to get anything to work (it was being used for development and running it, so having netbeans function would have been beneficial), and a few other bits of system pokery jiggery I shouldn't have needed.

Maybe it's just a Java thing, but shit just doesn't seem to work too well on that legacy system ... and that's before you count the unusual disk activity and long pauses opening applications.

JavaFX Media, or not, and a Video Cube.

I got a bit stuck at work today (yesterday) so I took the arvo off. It was the warmest day post-winter (25 degrees, but windy) which was a good excuse, but I also had a lazy look at the JavaFX media stuff.

Actually as per the updates on the previous post I also discovered how to enable GL acceleration on AMD hardware, and it gave the appropriate speed-up - given the previous iteration was using Java2D, it shows just how decent Java2D is (Java2D is rather nice).

But as to the media player - nice api - pretty weird results. Despite using ffmpeg, it only supports a limited number of formats and one of the transcoded tv shows I put through it played back oddly. Sound was ok, cpu usage was very low, but it skipped most frames, and not in a particularly regular way either - making it uncomfortable to watch. Who knows, maybe it's a non-standard encoding or just a bug with the current implementation.

So I guess I will port over jjmpeg at some point - it's not like it should be much work. ... although sound looks as painful as ever ...

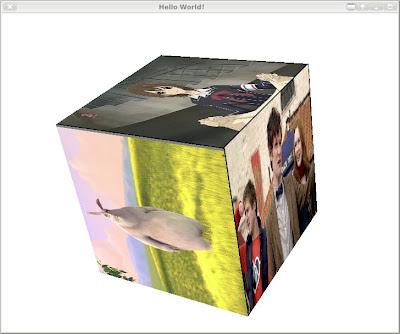

Video Cube

Ok I had another play with JavaFX this evening. It might not be as technically impressive as 9 Fingers, but this one is actually decoding the 6 videos concurrently (2xPAL MPEG TS, 4xlesser MP4), and runs smooth with only about 1.5 cpu cores busy (I7 6x core blah blah).

It looks a lot better in motion (actually I locked this orientation to get a screen capture - otherwise it updated too fast and mucked it up) ... seems to run 'full frame rate', with no tearing I can see. I'm not buffering any frames ahead of time or doing anything complex - but it seems to be keeping up with all the video decodes as well. I get the occasional jump if the system is busy - mostly netbeans displaying the debug logs.

Rather than using MediaPlayer I'm using jjmpeg to do the video decode as it supports more formats and plays back every frame properly on GNU/Linux. I just use a simple JJMediaReader loop, it runs libswscale to create PIX_FMT_BGRA at the original video resolution, and I set the pixels into the WritableImage using PixelFormat.getIntArgbPreInstance(). I'm using JavaFX to do the image scaling and all the rest. I do not decode (nor obviously; play) the sound.

Hah. It's Java.

JavaFX Slideshow

Just to play with JavaFX and to put it through it's paces I wrote a simple slide-show thing.

For a change of pace, i'll just paste the whole source code here.

Update: I noticed stack overflow was linking here, ... and that the code was broken because it was broken and also the final release of Java8 stopped ignoring null pointers in animations so it was broken again. I've fixed the code. Note that it still has the source location hard-coded to my home directory so that needs editing (ScannerLoader).

package au.notzed.slidez;

// this code is public domain

// This is not meant to be wonderful exemplary code, it was just

// my first experiment with JavaFX animations.

import java.io.IOException;

import java.nio.file.FileVisitResult;

import java.nio.file.FileVisitor;

import java.nio.file.Files;

import java.nio.file.Path;

import java.nio.file.Paths;

import java.nio.file.attribute.BasicFileAttributes;

import java.util.concurrent.ArrayBlockingQueue;

import java.util.concurrent.BlockingQueue;

import java.util.logging.Level;

import java.util.logging.Logger;

import javafx.animation.FadeTransition;

import javafx.animation.Interpolator;

import javafx.animation.ParallelTransition;

import javafx.animation.PauseTransition;

import javafx.animation.ScaleTransition;

import javafx.animation.SequentialTransition;

import javafx.application.Application;

import javafx.collections.ObservableList;

import javafx.event.ActionEvent;

import javafx.event.EventHandler;

import javafx.scene.Node;

import javafx.scene.Scene;

import javafx.scene.image.Image;

import javafx.scene.image.ImageView;

import javafx.scene.layout.StackPane;

import javafx.stage.Stage;

import javafx.util.Duration;

/**

* Simple slide show with transition effects.

*/

public class SlideZ extends Application {

StackPane root;

ImageView current;

ImageView next;

int width = 720;

int height = 580;

@Override

public void start(Stage primaryStage) {

root = new StackPane();

root.setStyle("-fx-background-color: #000000;");

Scene scene = new Scene(root, width, height);

primaryStage.setTitle("Photos");

primaryStage.setScene(scene);

primaryStage.show();

// Start worker thread, and kick off first fade in.

loader = new ScannerLoader();

loader.start();

Image image = getNextImage();

if (image != null)

startImage(image);

}

ScannerLoader loader;

public void startImage(Image image) {

ObservableList<Node> c = root.getChildren();

if (current != null)

c.remove(current);

current = next;

next = null;

// Create fade-in for new image.

next = new ImageView(image);

next.setFitHeight(height);

next.setFitHeight(width);

next.setPreserveRatio(true);

next.setOpacity(0);

c.add(next);

FadeTransition fadein = new FadeTransition(Duration.seconds(1), next);

fadein.setFromValue(0);

fadein.setToValue(1);

PauseTransition delay = new PauseTransition(Duration.seconds(1));

SequentialTransition st;

if (current != null) {

ScaleTransition dropout;

dropout = new ScaleTransition(Duration.seconds(1), current);

dropout.setInterpolator(Interpolator.EASE_OUT);

dropout.setFromX(1);

dropout.setFromY(1);

dropout.setToX(0.75);

dropout.setToY(0.75);

st = new SequentialTransition(

new ParallelTransition(fadein, dropout), delay);

} else {

st = new SequentialTransition(

fadein, delay);

}

st.setOnFinished(new EventHandler<ActionEvent>() {

@Override

public void handle(ActionEvent t) {

Image image = getNextImage();

if (image != null)

startImage(image);

}

});

st.playFromStart();

}

@Override

public void stop() throws Exception {

loader.interrupt();

loader.join();

super.stop();

}

public static void main(String[] args) {

launch(args);

}

BlockingQueue<Image> images = new ArrayBlockingQueue(5);

Image getNextImage() {

if (loader.complete) {

return images.poll();

}

try {

return images.take();

} catch (InterruptedException ex) {

Logger.getLogger(SlideZ.class.getName()).log(Level.SEVERE, null, ex);

}

return null;

}

/**

* Scans directories and loads images one at a time.

*/

class ScannerLoader extends Thread implements FileVisitor<Path> {

// Directory to start scanning for pics

String root = "/home/notzed/Pictures";

boolean complete;

@Override

public void run() {

System.out.println("scanning");

try {

Files.walkFileTree(Paths.get(root), this);

System.out.println("complete");

} catch (IOException ex) {

Logger.getLogger(SlideZ.class.getName())

.log(Level.SEVERE, null, ex);

} finally {

complete = true;

}

}

@Override

public FileVisitResult preVisitDirectory(Path t, BasicFileAttributes bfa)

throws IOException {

return FileVisitResult.CONTINUE;

}

@Override

public FileVisitResult visitFile(Path t, BasicFileAttributes bfa)

throws IOException {

try {

Image image = new Image(t.toUri().toString(),

width, height, true, true, false);

if (!image.isError()) {

images.put(image);

}

} catch (InterruptedException ex) {

Logger.getLogger(SlideZ.class.getName())

.log(Level.SEVERE, null, ex);

return FileVisitResult.TERMINATE;

}

return FileVisitResult.CONTINUE;

}

@Override

public FileVisitResult visitFileFailed(Path t, IOException ioe)

throws IOException {

return FileVisitResult.CONTINUE;

}

@Override

public FileVisitResult postVisitDirectory(Path t, IOException ioe)

throws IOException {

return FileVisitResult.CONTINUE;

}

}

}

The only 'tricky bit', if you could call it that, is the use of the Java 7 FileVistitor and a thread to load the files incrementally and asynchronously. Rather than try to make assumptions about the filename I just attempt to load every file I find and let the Image object tell me if it was valid or not.

But the JavaFX bit is pretty simple and straightforward, and i'm looking forward to playing with it further. It's given me a few ideas to try when I have some time and the weather isn't so nice as it is this weekend.

I'm not sure if the rendering pipeline is completely `GPU accelerated' yet on AMD hardware - the docs only mention that '3D' is only on NVidia so far.

If it isn't then the performance is ok enough - the CPU on this box is certainly capable of better mind you.

If it is, then it needs a bit more work. It can keep up ok with the simple fade and scale transitions i'm using at 580p, but adding a blur drops it right down, and trying to run it 1920x1200 results in a pretty slow frame-rate and lots of tearing.

Every JavaFX application also crashes with a hot-spot backtrace when they finish.

But the "main" problem with learning more JavaFX at this point for me is that it's going to make it more painful to maintain any Swing code I have, and it will make Android feel even more funky than it does already.

Update: So i've confirmed the GPU pipeline is not being used on my system. Bummer I guess, but at least the performance i'm getting is ok then.

If one sets -Dprism.verbose=true as a VM argument, it will print out which pipeline it uses.

Update 2: I found another option -Dprism.forceGPU=true which enables the the GPU pipeline on the AMD proprietary drivers I'm using. Oh, that is much better. Added a gaussian blur-in and ran it full-screen and it keeps up fine (and so it should!). There's a jira bug to enable it, so I presume it isn't too far off in the main release.

Update 3: I've done another one with a more sophisticated set of animated-tile transitions as JavaFC Slidershow 2.

JavaFX + webcam, Android n shit.

For Friday Follies I thought i'd have a look at setting up a test harness for mucking about with object detection and so on on a webcam outside of OpenCL - and for a change of pace I thought i'd try JavaFX at last - now it's included in release Java runtime for all platforms it's time I had a look.

Well, i'm not sure i'm a fan of the squashed round-cornered buttons - but it does seem nice so far. No more need to write an 'OverlayView' to draw boxes on the screen. After fighting with some arse-pain from Android for the last couple of weeks it's nice to go back to Java proper again too.

One of the big oversights in the earlier incarnations - being able to load in-memory pixmaps into the scene - has now been fixed, and the solution is simple and elegant to boot.

For example, I hooked up video 4 linux for java to display webcam output with only 1 line of code (outside the rest of the v4l4j stuff).

writableImage.setPixels(0, 0, width, height,

PixelFormat.getByteRgbInstance(), videoFrame.getBytes(),

0, width);

And all I need to do is add the writableImage to the scene. (occasionally when i start the application the window doesn't refresh properly, so i'm probably missing something - but it's quite fine for testing). It would be nice to have some YUV formats, but that should be possible with the api design.

Of course, I could do something similar with Swing, but that involved poking around some internals and making some assumptions - not to mention breaking any possible acceleration - and I still had to invoke a refresh manually.

There's a new set of concurrency stuff to deal with, but at least they seem fairly straightforward.

Let Swing ... swing?

I actually quite liked Swing. It did have a lot of baggage from the awt days but it was a nice enough toolkit to write to, and almost every component was re-usable and/or sub-classable which saved a heap of development effort (now that i'm claiming it was perfect either). The first time I used it in anger i'd just come from a few years fighting with that microsoft shit (jesus, i can't even remember what it was called now, it had fx in the name/predated silverlight) and coming to a toolkit with decent documentation, fewer performance issues, fewer bugs, the ability to subclass rather than forcing you to compose, and the friggan source-code was a big relief. (Not to mention: no visual studio).

But from what little I know about it so far, JavaFX sounds pretty good and I don't think i'll be missing Swing much either. At least now they've made it a proper Java toolkit, and fixed some shortcomings. The main benefit I see is that it eschews all the nasty X and native toolkit shit and just goes straight to the close-to-hardware apis rather than having to try to hack them in after the fact (although I found Swing ok to use from a developer standpoint, I would not have enjoyed working on it's guts too much).

I'll definitely have to have more of a play with it, but so far it looks nice.

Android ...

Or rather, the shit lifecycle of an Android window ...

Until recently I just hadn't had time to investigate and implement orientation-aware applications - so all my prototypes so far were just working with a fixed orientation. Seems like an ok toolkit when used this way - sure it has some quirks but you get decent results for little effort.

But then I looked into supporting screen rotation ... oh hang on, that window object on which you were forced to write all your application code, suddenly goes away just because the user turned the phone?

Of course, the engineers at Google have come up with other solutions to work around this, but they all just add weird amounts of complication to an already complicated situation.

AsyncTask

So when I previously thought AsyncTask was a neat solution to a simple problem, I now realise it just makes things more complicated because of the lifecycle issue. Now there's some AsyncLoader mess which can be used to replace what you might've used an AsyncTask for - but with about 5x as much boiler-plate and lots of 'I don't really get what's going on here' crap to deal with as well. Most of that 'not knowing' goes away with time, but the learning curve is unnecessarily steep.

AsyncTask is simple because it's just a bit more around Runnable, saving you having to runOnGuiThread explicitly. AsyncLoader on the other hand is a whole 'lets pretend static resources are bad unless it's part of the toolkit' framework designed to separate your application from it's source code. i.e. it adds hidden magic. Hidden magic isn't abstraction, it's dumb.

You've been fragged!

Then take fragments. Go on ... please? So as if tying application logic to user windows wasn't enough, now one does the same for individual components of windows. A large part of why they exist just seems to be to be able to support orientation changes whilst preserving live objects - but it seems a pretty round-about way of doing it (they are also purportedly for supporting layout changes, but you can already do that ...).

But one of the biggests problem is the documentation. For the few times there is any, it is way out of date - when you find an official article explaining how to do something you soon find out that way was deprecated when you go to the class doco ... Obviously google are penny pinching on this one, you'd think with their resources they could do a better job to keep everything in sync - even Sun did a much better job and Oracle are probably better again.

To be honest, I think main issue I have personally with Fragments is the name itself - I kind of get what they're for, building activities from parts - but obviously all the meaningful names were taken (hell, even 'widget' means more). But the name 'fragment' just has no useful meaning in this context -

fragments are what you have left over after you've blown shit up

. Fragments are what a plate becomes after dropping one on a cement floor. Or what was left of the longneck I left too long in the freezer the other night (well, fragments and beery ice).

It is not a word of which things are constructed, it's just the mess left after some catastrophic mechanical failure.

(and the fact there are UI+logic, and logic-only 'fragments' surely does not help either)

The GoogleWay(tm)

Unfortunately, this all just forces you to code exactly the way google wants you to - which is fine when the problem suits, but it's a bit of a pain to learn just what that `way' actually is in the first place ...

In the end I just had to put everything in a database, and pass around id's to keep track of the state. Although it works there are still some complications. For example if you have a fragment which maintains its state during an orientation change, you then have to presumably code your activity to only load the state if it doesn't already have one, or fuck around with some other crap in the fragment to deal with it. I think my code just ends up reloading everything anyway, but at least it's on another thread and the user doesn't notice. If you set your object id's properly in your list adapter (now there's another 'lets mix different types of code' mess) then it mostly just works.

Once you've finally done all that ... then at least it does work out more or less, and trying to implement the 'proper' lifecycle stuff (whatever that is) becomes a whole lot easier too.

Internode Streaming Radio App

So last night I threw together a simple application for accessing my ISP's streaming radio proxies from android. Now I have a box plugged into my stereo, suddenly I find a use for such a programme.

It can be found over on a page on my Internode account. I don't have time to up the source, but if i work on other additions (which personally I don't need), i will probably create a project somewhere for it.

You'd really think playing a remote playlist was a pretty basic function - but it took less time to write this (I just made some very small changes to the RandomMusicPlayer from the samples) than to try to find one in the 'play' store that bloody worked. It's not that they were just clumsy, ugly, really slow (for no apparent reason) - I didn't get a single beep out of them either.

I actually first mucked about with JJPlayer from jjmpeg and did get it working playing music from a selected .m3u file in a browser, but I decided that was a bit too hacky and clumsy. And besides if MediaPlayer worked with the internode streams, I would just use that. They did so that's what I went with. The RandomMusicPlayer sample I started with pretty much does all the hard work - it runs as a service, works in the background (even with the Mele 'off'!!!), and so on.

I put a link to it on my internode home page - which has been sitting idle for a few years.

Note:

These streams are only available to Internode customers, a local ISP. There's no point looking at this if you're not one.

Also, I am not affiliated with Internode in any way.

But I am off to listen to some retro electronica ...

Update: I updated the app to be a bit more properer, and wrote another post about it.

Update: Moved the home page.

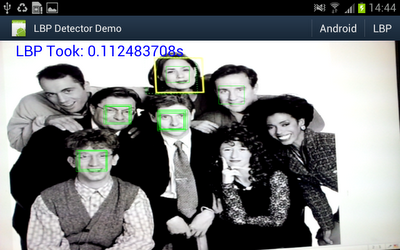

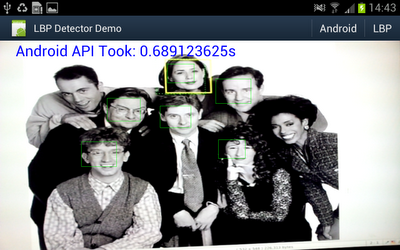

Object detector in action

Well I really wanted to see if the object detector I came up with actually works in practice, and whether all that NEONifying was worth it. Up until now i've just been looking at heat-maps from running the detector on a single still image.

So I hacked up an android demo (I did start on the beagleboard but decided it was too much work even for a cold wintry day), and after fixing some bugs and summation mathematics errors - must fix the other post, managed to get some nice results.

A bit under 10fps. And for comparison I hooked up some code to switch between my code or the android supplied one.

Well under 2fps.

I was just holding the "phone" up to a picture on my workstation monitor.

Some information on what's going on:

- Device is a Samsung Galaxy Note GT-N7000 running ICS. This one has a dual core ARM cortex A9 CPU @ 1.4Ghz.

- Input image is 640x480 from the live preview data from the Camera in NV12 format (planar y, packed uv).

- Both detectors are executing on another thread. I'm timing all the code from 'here's the pixel array' to 'thanks for the results'.

- The LBP detector is using very conservative search parameters: from 2x window (window is 17x17 pixels) to 8x window, in scale steps of 1.1x. This seems to detect a similar lower-sized limit as the android one - and the small size limit is a major factor in processing time.

- At each scale, every location is tested.

- Android requires a 565 RGB bitmap, which i create directly in Java from the Y data.

- The LBP detector is just using the Y data directly, although needs to copy it to access it from C/ASM.

- The LBP detector pipeline is mostly written in NEON ASM apart from the threshold code - but that should optimise ok from C.

- A simple C implementation of the LBP detector is around 0.8s for the same search parameters (scaling and lbp code building still uses assembly).

- The LBP detector is showing the raw thresholded hits, without grouping/post-processing/non-maximum suppression.

- The LBP code is only using a single core (as the android one also appears to)

- The LBP classifier is very specifically trained for front-on images, and the threshold chosen was somewhat arbitrary.

- The LBP classifier is barely tuned at all - it is merely trained using just the training images from the CBCL data set (no synthesised variations), plus some additional non-natural images in the negative set.

- Training the LBP classifier takes about a second on my workstation, depending on i/o.

- Despite this, the false positive rate is very good (in anecdotal testing), and can be tuned using a free threshold parameter at run-time.

- The trained classifier definition itself is just over 2KB.

- As the LBP detector is brute-force and deterministic this is the fixed-case, worst-case performance for the algorithm.

- With the aggressive NEON optimisations outlined over recent posts - the classifier itself only takes about 1 instruction per pixel.

A good 6x performance improvement is ok by me using these conservative search parameters. For example if I simply change the scale step to 1.2x, it ends up 10x faster (with still usable results). Or if I increase the minimum search size to 3x17 instead of 2x17, execution time halves. Doing both results in over 25ps. And there's still an unused core sitting idle ...

As listed above - this represents the worst-case performance. Unlike viola & jones whose execution time is dynamic based on the average depth of cascade executed.

But other more dynamic algorithms could be used to trim the execution time further - possibly significantly - such as a coarser search step, or a smaller subset/smaller scale classifier used as a 'cascaded' pruning stage. Or they could be used to improve the results - e.g. more accurate centring.

Update: Out of curiosity I tried an 8x8 detector - this executes much faster, not only because it's doing under 25% of the work at the same scale, but more to the point because it runs at smaller image sizes for the same detected object scale. I created a pretty dumb classifier using scaled versions of the CBCL face set. It isn't quite reliable enough for a detector on it's own, but it does run a lot faster.

Copyright (C) 2019 Michael Zucchi, All Rights Reserved.

Powered by gcc & me!