About Me

Michael Zucchi

B.E. (Comp. Sys. Eng.)

also known as Zed

to his mates & enemies!

< notzed at gmail >

< fosstodon.org/@notzed >

Java, C, SSE, poor mans lambdas

So after being able to avoid them for decades I got sucked in to having to do some matrix maths this week. I'm mostly just using a library but there were some memory and performance problems so I had to investigate my own matrix multiply routine.

Java vs gcc

Mostly just because of curiosity I tried comparing C to Java ... and the performance difference was negligble, actually sometimes the java cpu time is neglibly less. And i'm timing executing the programme from the command line - so that includes jvm startup and just-in-time compilation. I expected more of a difference in the obvious direction given the jvm overheads so that was a nice surprise. I suppose I shouldn't really be surprised by the performance anymore ...

And then further curiosity led me to attempting a vector based implementation - just using the gcc vector types. This was only about 2.4x faster - it would be worth it worth it, but I just used threads and it works fast enough anyway.

The vector implementation in gcc is simple, one just defines a vector type and then it's much the same as OpenCL, although one must ensure the data is aligned properly otherwise performance is pants.

TBH i'm a little dissapointed hotspot isn't doing any SSE optimisations here automatically (or maybe it is, but the execution time compared to C is too close for it to be a coincidence).

WorkPool

To implement the multi-threaded code I started using a poor-mans implementation of lambdas, or at least the parallel foreach part of it which is imho the main point of interest. I'm waiting for the jdk8 ga before pushing any java8 requirement onto my customer. It doesn't work as well as the lambda code in jdk8 but it isn't too far off and the syntax is fine as far as i'm concerned.

For simplicity I just have a static class which manages one thread per cpu with a simple static foreach call:

public class WorkPool {

... threads stuff ...

public interface WorkItem {

public void accept(int i);

}

public static synchronized void foreach(int start, int end, WorkItem job) {

... statically partition work across threads ...

... pass job to threads ...

... await completion ...

}

With obvious usage:

WorkPool.foreach(0, N, new WorkItem() {

public void accept(int i) {

array[i] = blah ...;

}

});

It's a bit clumsy without the 'effectively final' of Java8, and arguably clumsy due to the syntax but if each WorkItem does a sizable amount of work the overheads are acceptable. More importantly it just gets me thinking about how to solve problems in ways that will map immediately to Java8 when I start using it.

There's some other interesting stuff about the parallel execution model that I want to talk about but i'll leave that for another post. It's about mapping non-rectilinear work loads to a linear index and job execution order.

On another note, I keep running into problems with the thread job management on Java and keep end up having to write custom solutions. Executors seem like a great idea ... and they probably are for enterprise workloads but they basically suck for interactive desktop programmes - where you may be getting many many more update requests than you have time to process but you only need to keep (and must keep) the last one. Managing this with Future handles gets clumsy very fast. JavaFX comes with a new set of 'worker thread' primitives which I haven't really looked into much - they seemed to be light wrappers over existing functionality anyway and only seemed to muddy the waters last time I had a look.

One current implementation of WorkPool uses a ThreadPool executor but I will look at custom thread code too (curiosity again). Currently i'm also implementing static scheduling but it will be worth investigating something more dynamic.

fpu mode, compiler options

Poked a bit more at the 2d scaler on the parallella yesterday. I started just working out all the edge cases for the X scaler, but then I ended up delving into compiler options and assembler optimisations.

Because the floating point unit has some behaviour defined by the CONFIG register the compiler needs to twiddle bits quite a bit - and by default it seems to do it more often than you'd expect. And because it supports writing interrupt handlers in C it also requires any of these bit twiddles to occur within an interrupt disable block. Fun.

To cut a long story short I found that fiddling with the compiler flags makes a pretty big difference to performance.

The flags which seemed to produce the best result here were:

-std=gnu99 -O2 -ffast-math -mfp-mode=truncate -funroll-loops

Actually the option that has the biggest effect was -mfp-mode=truncate as that removes many of the (redundant) mode switches.

What I didn't expect though is that the CONFIG register bits also seem to have a big effect on the assembly code. By adding this to the preamble of the linear interpolator function I got a significant performance boost. Without it it's taking about 5.5Mcycles per core, but with it it's about 4.8Mcycles!?

mov r17,#0xfff0

movt r17,#0xfff1

mov r16,#1

movfs r12,CONFIG

and r12,r12,r17 ; set fpumode = float, turn off exceptions

orr r12,r12,r16 ; truncate rounding

movts CONFIG,r12

It didn't make any difference to the results whether I did this or not.

Not sure what's going on here.

I have a very simple routine that resamples a single line of float data using linear interpolation. I was trying to determine if such a simple routine would compile ok or would need me to resort to assembler language for decent performance. At first it looked like it was needed until I used the compiler flags above (although later I noticed I'd left an option to disable inlining of functions that I was using to investigate compiler output - which may have contributed).

The sampler i'm using is just (see: here for a nice overview):

static inline float sample_linear(float * __restrict__ src, float sxf) {

int sx = (int)sxf;

float r = sxf - sx;

float y1 = src[sx];

float y2 = src[sx+1];

return (y1*(1-r)+y2*r);

}

Called from:

static void scale_linex(float * __restrict__ src, float sxf, float * __restrict__ dst, int dlen, float factor) {

int x;

for (x=0;x<dlen;x++) {

dst[x] = sample(src, sxf);

sxf += factor;

}

}

A straight asm implementation is reasonably simple but there are a lot of dependency-stalls.

mov r19,#0 ; 1.0f

movt r19,#0x3f80

;; linear interpolation

fix r16,r1 ; sx = (int)sxf

lsl r18,r16,#2

float r17,r16 ; (float)sx

add r18,r18,r0

fsub r17,r1,r17 ; r = sxf - sx

ldr r21,[r18,#1] ; y2 = src[sx+1]

ldr r20,[r18,#0] ; y1 = src[sx]

fsub r22,r19,r17 ; 1-r

fmul r21,r21,r17 ; y2 = y2 * r

fmadd r21,r20,r22 ; res = y2 * r + y1 * (1-r)

(I actually implement the whole resample-row routine, not just the sampler).

This simple loop is much faster than the default -O2 optimisation, but slower than the C version with better optimisation flags. I can beat the C compiler with an implementation which processes 4 output pixels per loop - thus allowing for better scheduling with a reduction in stalls, and dword writes to the next core in the pipeline. But the gain is only modest for the amount of effort required.

Overview of

Routine Mcycles per core

C -O2 10.3

C flags as above 4.2

asm 1x 5.3

asm 1x force CONFIG 4.7

asm 4x 3.9

asm 4x force CONFIG 3.8

I'm timing the total instruction cycles on the core which includes the synchronisation work. Image is 512x512 scaled by 1.7x,1.0.

On a semi-relted note I was playing with the VJ detector code and noticed the performance scalability isn't quite so good on PAL-res images because in practice image being searched is very small. i.e. parallelism isn't so hot. I hit this problem with the OpenCL version too and probably the solution is the same as I used there: go wider. Basically generate all probe scales at once and then process them all at once.

Sharing DRAM on parallella - the easier way.

Currently parallella reserves a block of memory at a fixed physical address which the epiphany accesses directly and can be mapped to a linux process via e_alloc(). But as e_alloc() just calls mmap(NULL, ...) the linux-side address is different, and infact it will create a new alias for every e_alloc() invocation with the same parameters.

This makes it a bit of a pain to use as memory locations must be calculated manually via offsets on at least one end.

With the current parallella-16 the shared block is accessible on the epiphany via 0x8e000000, and I was wondering if that could just be mapped to the same location on the ARM side. It's been a while since I looked at the memory map of linux and i wasn't sure if there was anything there - and it turns out there isn't. Using cat /proc/pid/maps I found that it's somewhere between and a long way away from both the heap and the stack.

So ... if instead of calling e_alloc() you can just use mmap directly to create a shared area that can be used to pass complex linked data structures without having to perform any address remapping by hand - which is error prone and simply a big pita.

e.g. to map 8K at the start of this block on the parallella-16 I have:

// parallella-16: physical 3e000000 on arm == physical 8e000000 on epiphany

int fd = open("/dev/mem", O_RDWR | O_SYNC);

void *pmem = mmap((void *)0x8e000000, 8192, PROT_READ|PROT_WRITE, MAP_SHARED,

fd, 0x3e000000);

The specific numbers may vary on a given platform or configuration and can be determined at runtime.

This makes using the shared DRAM a lot easier including features like dynamic memory management (aka malloc).

microsoft's ex-box one more WTF?

So microsoft's xbox-one-again is out and a few weird things have come to light.

Batshit Insanity

Firstly it's obvious that still just don't get it. The general pupulous 'mass market' do not want a PC under their TV, they want an appliance. But they've gone and dropped a full-blown 'metro' interface - which looks confusing as hell to start with, and looks like a total headfuck on a tv with a controller. That's completely apart from the decidedly non-mass-market price.

Nobody's PAL

And then there's the whole tv integration ... no 50hz mode? What?

I'm pretty down on the 'tech press' already, but them claiming that somehow that is 'impossible to fix' is pretty laughable. Every PS2 or PS3 game in PAL regions also support direct 50Hz output because they supported composite out. Given that most of a game is being rendered in real-time it's a trivial run-time alteration (literally a couple of numbers) to change either rendering resolution or framerate output. A bonus here is that you have considerably more frame-time as well so games always run smoother at 50/25 compared to 60/30 too. The only stuff that can't be easily fixed for 50Hz output is pre-rendered video (aka 'FMV') - and that CAN be fixed by just recording two versions at the different frame-rates - which is what the high budget games have usually done. And even then it isn't really that important for lower budget games; a few judders during non-interactive game play is no big deal.

Apart from that, 50Hz is better for ALL video content apart from native NTSC recordings anyway - which is a legacy from electronic pre-history. So for a so-called 'all in one media solution' to force a shitty PC-compatible-60hz is utterly nonsensical. If you're used to watching youtube or videos on your pc you probably wouldn't notice but it just totally shits up the picture.

Is it gaming, or is it gaming?

The other big thing to come to light is the attempt to push the revenue model up significantly higher than the selling-disks model will ever be able to provide.

Here in Australia 'gaming' generally refers not to computer games, but to the computerised gambling industry. An awful lot about the intended revenue models (and mobile/tablet 'free to play games' in general) share a lot with this despicable industry which prays on people psychologically to fleece them of their cash. Even the words being thrown around like 'whales' come from directly that industry.

And gaming is big money compared to the computer game industry.

Unfortunately it seems 'computer games' are going to be headed at least in some part toward this gambling revenue model; anywhere there is this much money to be had it will be sought out actively. Companies that don't embrace it will be fighting for the scraps but hopefully they'll be able to survive and hopefully this is just a passing fad (or gets regulated out of the market).

If one looks at a graph of revenue from microsoft's entertainment division vs the other business units (i think there's a plot from semiaccurate or somewhere that shows this) something jumps right out at you: one has been bumbling on at insignificant profits or losses for a decade whereas the other units generate obscene profits. microsoft will not be in this business much longer if they cannot find a way to bridge that gap and this gambling based user-fleecing revenue model is just one of the despicable anti-customer ways they will attempt it. It will be interesting to see if they can manage it ...

Bundling with Video Services

This is another strange idea coming out of some of the analyst houses and tech press; some weird notion that entertainment providing companies will 'partner' with microsoft to deliver content through their hardware.

Now there's an idea which is bat-shit insane.

The thought that any company would willing GIVE AWAY it's entire family jewels to a DIRECT COMPETITOR is just embarassing. The only way it might happen is if entryism takes over the board as it did with nokia, and destroys the company from the inside.

And it's pretty much an obvious conclusion from applying a bit of common sense - if this was going to happen they'd never have bothered with the dumb out-dated idea of a video pass-through in the first place. That just seeems a decade late ... at best.

Automagic cross-core symbol linking.

So one of the problems with the loader/linker code i've been working on is that you still need to manually link symbols on other cores ... which is rather error prone and frankly a bit of a pain. I was looking at how to wrap some of that pain in macros and had another thought.

It relies on the fact that as is usually the case; relocs can have an additional fixed pointer-sized offset added to them.

Basically the idea is that when you reference an external core, you define a work-group relative address by providing an addend which defines the group-relative core address in the upper 12 bits as normal. At load time this external-core link is detected and offset by the workgroup base (which can be dynamic). It should still work cleanly for all the normal cases like referencing a member of a struct and so on which is what these relative addends are for.

A few simple macros should make it trivial to use but in raw code to define a reference to 'bufferx' in a core which is in column 1:

extern void *bufferx __attribute__ ((weak));

void *refx = (1<<20) + (void *)&bufferx;

Each case would need special handling in the link-loader for the core-address bits (row=31-26, col=25-20, iirc, or vice-versa; it isn't important here):

- row == 0, col == 0

- Left alone - remains a local address. Allows for programmatic resolution as i'm currently using via elib.

- row == 0 col != 0

- Resolves to this.row,

group.col + col this.col +- col.

- row != 0 col == 0

- Resolves to

group.row + row this.row +- row, this.col.

- row != 0 col != 0

- Resolves to

group.row+row, group.col+col this.row +- row, this.col +- col.

- Outside of workgroup or chip?

- Undefined behaviour? Leave it as is? Clamp? Let it resolve as above?

- Matches dram "window" address.

- Leave it alone.

Where group is the group root, and this is the core on which the code resides. I thought of using the non-zero values as 'this relative', but there aren't enough bits for a signed offset (actually, there is if I use wrap-around ... hmm, interesting thought, actually the more i think about it I think it's the better solution, otherwise you can't reference 0,x or x,0 ). Given that a 64x64 core w/ 1MB LDS each might be some time away I could always abuse some of the addressing bits for extra information anyway, but that's probably not a wise idea for the little benefit it might provide.

This will cover most of the common and useful cases and one can always just fall back to using e_get_global_address() for more complex data-flow topologies.

Unfortunately it requires more processing because each programme must be (re)linked for each target core rather than being able to broadcast the code to all common cores and these extra overheads might make it less attractive. OTOH it allows for load-time initialisation of data structures and less on-core code.

Hmm, how did it get to midnight. Blah.

A bit of S-FX fun

Last couple of days i've been poking around a bit with audio - trying to learn a bit more about how to process it digitally.

To start with I just wanted to get some visualisation up to see what sort of stuff you get out of a fourier transform.

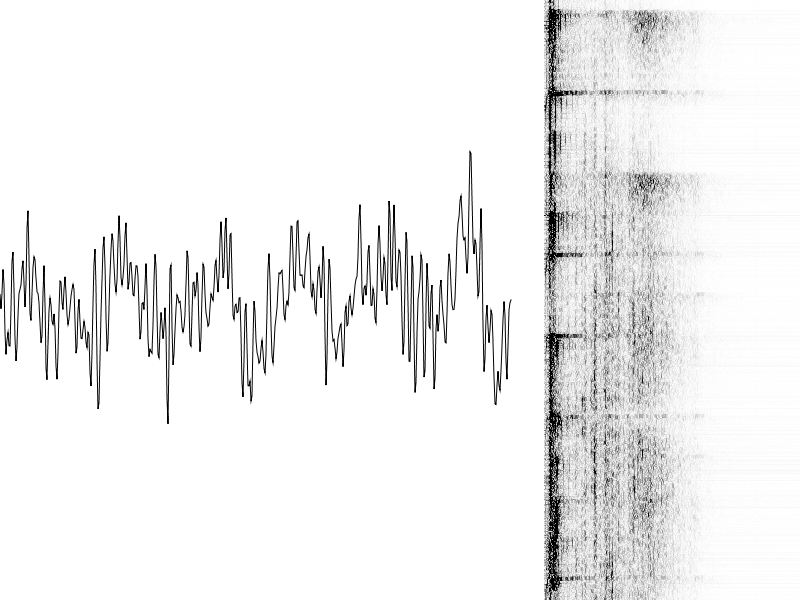

I started in Swing but then re-started in JavaFX and came up with this little tool. A signal plot on the left and a scrolling spectrogram on the right.

I use an old trick from Amiga days to simplify the spectrogram display updates. I create a WritableImage which is twice the visibile size. When a new row of spectrogram data arrives I write it twice, at the current output row and at the current output row + visible size. This just keeps repeating forever, rolling back to the start when it overflows. Then I adjust the viewport on the ImageView to show the correct section - which is just the total output count modulo the visible row count. This creates a smooth scroll without having to write the whole display every time (let the GPU do that since it will anyway) or the need for an extra block of processing once every n frames.

So e.g. for a 256-wide spectrogram on a 600-high display:

WritableImage spectrogram = new WritableImage(256, 1200);

ImageView spec = new ImageView(spectrogram);

int spectrogramRow = 0;

void addRows(int[] srow, int rows) {

PixelFormat fmt = PixelFormat.getIntArgbPreInstance();

spectrogram.getPixelWriter().setPixels(0, spectrogramRow, 256, rows, fmt, srow, 0, 256);

spectrogram.getPixelWriter().setPixels(0, spectrogramRow + 600, 256, rows, fmt, srow, 0, 256);

spectrogramRow = (spectrogramRow + rows) % 600;

spec.setViewport(new Rectangle2D(0, spectrogramRow, 256, 600));

}

Unfortunately it's just running on the CPU (some ancient piece of shit intel graphics on this laptop) so the output isn't really very smooth at all, nor can up the spectrogram rate too high. Not that it's fast enough anyway but I don't think it helps that my laptop outputs 50Hz to it's screen and the external second screen is 60Hz, I think JavaFX uses 60Hz NTSC timing too, bletch.

Actually I dunno, it's only using 30% cpu. Must try it on my workstation.

Anyway, nothing special but it's still hypnotic enough I forgot why I started writing it.

The joy of segfaults

I had another look at some parallella code today but I didn't get as far as i'd hoped. Being a bit tired and not really into it didn't really help I guess.

First major problem I hit was that the linker doesn't allocate bss blocks for relocatable files by default, which I only discovered after a lot of faffing about. This and a few other issues made me decide to create a simpler linker script which I was trying to avoid. Since I have it now I'm using the linker script to merge some of the c-runtime support sections and epiphany sections with the base sections, and rename some of the epiphany sections to something i can use more readily in the loader (e.g. IVT_RESET to .ivt0).

It still didn't work. Which took a lot of tracking down ... and turned out to be an annoying bug with the way I was resolving the address of a remote-core array. I had defined the weak external reference as a pointer type and was just passing it to e_get_global_address - I should have passed the address of the variable instead. Live and learn I suppose, or maybe not. This is the second time I've wasted a good chunk of time on something like this so it's probably something I need to macro/functionise if I can.

But once I worked that out it suddenly started working.

Single-pass resampler

I'm working on a single-pass image resampler. It's something I need for the FD code, and a nice parallel problem which should fit a grid of EPUs nicely to boot. It's also a good test case for the relocating elf loader code i have.

input rows

|

+-------------+-------------+-------------+

| | | |

+-----------+ +-----------+ +-----------+ +-----------+

| scale x 0 | | scale x 1 | | scale x 2 | | scale x 3 |

+-----------+ +-----------+ +-----------+ +-----------+

| | | |

+-----------+ +-----------+ +-----------+ +-----------+

| scale y 0 | | scale y 1 | | scale y 2 | | scale y 3 |

+-----------+ +-----------+ +-----------+ +-----------+

| | | |

+-------------+-------------+-------------+

|

output rows

Workgroup topology' (transposed)

The input stage comprises of 4 cores in a column which load in 1/4 of a row of the input stream at a time and scale it in X - the results are written directly to the next stage in the pipeline.

The y scalers then perform y scaling on the input rows, and output directly to the target.

Because there are a lot of fiddly edge cases I just started with the data-flow code with an X-only scaling case to nearest neighbour (simplifies the y-scaling logic), but the intention is to end up with (at least) bi-cubic resampling. For this reason the Y scalers contain 'some' number of rows which will be greater than one organised in a cyclic buffer - so they can double-buffer with the X scaler and support higher-order resampling. I'm only using 4+4 cores mostly for simplicity but I may also have a use for the other 8. I don't know yet if the workload will balance well with a 1:1 mapping like this - in any event it will be dynamic based on the problem (e.g. x scaler always runs on each input row, by the y scaler only needs to run on each output row), and even if it isn't 100% efficient it should be goodly-efficient[sic].

So as of now I have the basic data-flow working. I'm using an 'eport' for the throttling/arbitration of the Y buffers and by organising the input stage in a column the DMA reads are fair without further work. This also gives me a simple platform to determine how important write DMA arbitration is, although I haven't included it yet.

As the Y stage can have multiple rows of storage (memory permitting) the same structure can be used for separable convolution, wavelets, etc. I can also be extended to high quality rotation and even to general purpose affine resampling - which I may look at eventually.

PicFX out

I did a bit of a clean, a README, some (not very good) tweaks to the layout files for a high dpi phone vs 7" tablet, and just checked in the code into:

I haven't cross-checked by building from a clean checkout from scratch so there could be some missing bits: i'll check when I can. I've also only built it on Fedora with some slightly older-than-current version(s) of the android ndk/sdk.

My previous post has some screenshots which are representative of the state of the code.

I did have grander plans but between my main work and it being a bit of a weird-arsed year and they didn't make it. However apart from some minor layout issues on small screens and the lack of capabilities, the basic operation and backend design i'm fairly pleased with for a couple of weeks work spread over 5 months.

Copyright (C) 2019 Michael Zucchi, All Rights Reserved.

Powered by gcc & me!