About Me

Michael Zucchi

B.E. (Comp. Sys. Eng.)

also known as Zed

to his mates & enemies!

< notzed at gmail >

< fosstodon.org/@notzed >

on JNI callback reference handling

After the previous post detailing some issues with handling callback reference handling I had another look at it this evening.

First for the clBuildProgram() function I just deleted the global reference in the callback. I tried to identify reference leaks using the netbeans memory profiler but it was a little difficult to interpret the results. For starters running the demo routine in a loop didn't result in loop-number reference leaks as one would expect (or even loop-number of reference creations oddly enough; may be related to hotspot and/or it being a static method) ... anyway I think it should work regardless except in the specific case where OpenCL doesn't actually call the notify callback for whatever reason: it is unclear from the specification if it MUST always call it for example. I'm just going to have to assume if that ever happens the system is in such a state then adding a leak is of no practical importance.

Then took at look at the clCreateContext() issue which seemed a bit trickier. On a hunch I looked up how weak references work from JNI and at first I didn't see anything useful but whilst poking around a tidy solution became apparent.

All I have to do is save the original reference to any notify function in the CLContext on the Java side. This lets Java handle the reference as it normally would and any notify object should automatically have the same lifetime as the reference to CLContext.

From the (rather badly formatted) JNI document:

Weak Global References

Weak global references are a special kind of global reference. Unlike normal global references, a weak global reference allows the underlying Java object to be garbage collected. Weak global references may be used in any situation where global or local references are used. When the garbage collector runs, it frees the underlying object if the object is only referred to by weak references. A weak global reference pointing to a freed object is functionally equivalent to NULL. Programmers can detect whether a weak global reference points to a freed object by using IsSameObject to compare the weak reference against NULL. Weak global references in JNI are a simplified version of the Java Weak References, available as part of the Java 2 Platform API ( java.lang.ref package and its classes).

Clarification (added June 2001)

Since garbage collection may occur while native methods are running, objects referred to by weak global references can be freed at any time. While weak global references can be used where global references are used, it is generally inappropriate to do so, as they may become functionally equivalent to

NULL

without notice.

While

IsSameObject

can be used to determine whether a weak global reference refers to a freed object, it does not prevent the object from being freed immediately thereafter. Consequently, programmers may not rely on this check to determine whether a weak global reference may used (as a non-

NULL

reference) in any future JNI function call.

To overcome this inherent limitation, it is recommended that a standard (strong) local or global reference to the same object be acquired using the JNI functions

NewLocalRef

or

NewGlobalRef

, and that this strong reference be used to access the intended object. These functions will return

NULL

if the object has been freed, and otherwise will return a strong reference (which will prevent the object from being freed). The new reference should be explicitly deleted when immediate access to the object is no longer required, allowing the object to be freed.

So all the native callback function has to do is call NewLocalRef() on the passed in handle, and if that is not-null it is still live and can be called; otherwise it can print some warning and continue on it's merry way. The reference can either be saved by creating a different constructor or by adding a wrapper to the native method which does the saving.

If I don't find some short-coming in this implementation then this is a nice clean solution without having to try to create my own mirror of either the opencl or java reference trees - which would be a very undesirable.

For the buildProgram notify I decided to pass a reference to the actual CLProgram rather than create a new instance, not particularly important but a bit tidier. Other than that it just deletes the references and frees the callback block after invoking the notify interface.

For createContext I went with a new constructor mechanism and it only needed some minor changes in the JNI code.

public class CLContext extends CLObject {

final CLContextNotify notify;

CLContext(long p, CLContextNotify notify) {

super(p);

this.notify = notify;

}

}

And some changes to the JNI init code:

- data = (*env)->NewGlobalRef(env, jnotify);

+ data = (*env)->NewWeakGlobalRef(env, jnotify);

And JNI callback code:

+ jnotify = (*env)->NewLocalRef(env, jnotify);

+ if (!jnotify) {

+ fprintf(stderr, "cl_context notify called after object death\n");

+ return;

+ }

I'm still not sure how i'm going to manage native kernels yet, hopefully it is like CLBuild and just runs once per invocation.

I guess over the next few hacking sessions i'll fill it out a bit and look at dumping the source somewhere. I'm not sure if i'm even going to use it for anything or just use it as a learning exercise.

OpenCL binding - a bit more tweaking

Had a bit of another look at the OpenCL binding I was working on. I wasn't happy that some of the public interfaces still uses long[] arrays to represent intptr_t arrays - specially for property lists. So I made a bit more java-ish. It's still a bit clumsy but it's about as good as it's going to get.

public static native CLContext createContext(long[] properties,

CLDevice[] devices,

CLContextNotify notify) throws CLRuntimeException;

Becomes:

public static native CLContext createContext(CLContextProperty[] properties,

CLDevice[] devices,

CLContextNotify notify) throws CLRuntimeException;

Properties all inherit from a base class:

public class CLProperty {

protected final long tag;

protected final long value;

protected CLProperty(long tag, long value) {

this.tag = tag;

this.value = value;

}

}

This is so the JNI code only needs to deal with one type of object. Then I have factory methods for the various property types.

public class CLContextProperty extends CLProperty {

...

public static CLContextProperty CL_CONTEXT_PLATFORM(CLPlatform platform) {

return new CLContextProperty(CL.CL_CONTEXT_PLATFORM, platform.p);

}

...

}

Although I might make the names more java-friendly.

So based on the createContext interfaces above, one changes:

cl = createContext(new long[] { CL.CL_CONTEXT_PLATFORM, platform.p, 0 },

new CLDevice[] { dev },

null);

to:

cl = createContext(new CLContextProperty[] { CLContextProperty.CL_CONTEXT_PLATFORM(platform) },

new CLDevice[] { dev },

null);

It's not like it saves typing but it is type-safe, and you don't have to remember to put the closing 0 tag on the end of the list. Perhaps the factory methods should sit on CLContext for that matter.

Callbacks, Leaks, Lambdas

Another part I looked into implementing was the callback methods from C to Java, such as the one passed to createContext or buildProgram.

This is mostly straightforward - just pass a hook function to the OpenCL call which locates an environment and invokes the callback function on an interface. There is no need to support a 'user data' field for the java side, so that is just used to pass a global reference to the interface itself.

If one considers the generic interface used for build callbacks:

public interface CLNotify<T< {

public void notify(T source);

}

The C hook is relatively straightforward ...

static void build_notify_hook(cl_program prog, void *data) {

jobject jnotify = data;

jobject source;

JNIEnv *env;

jlong lprog = (jlong)prog;

if ((*vm)->GetEnv(vm, (void *)&env, JNI_VERSION_1_4) != 0

&& (*vm)->AttachCurrentThread(vm, (void *)&env, NULL) != 0) {

fprintf(stderr, "Unable to attach java environment\n");

return;

}

source = (*env)->NewObjectA(env, classid[PROGRAM], new_p[PROGRAM], (void *)&lprog);

if (!source)

return;

(*env)->CallVoidMethodA(env, jnotify, CLNotify_notify, (void *)&source);

}

(FIXME: this may need to detach the thread also). (FIXME: this may need to de-ref jnotify)

One notices that the callback simply creates a new CLProgram object instance to the pass the pointer to Java. This means that OpenCL handles may map to more than one Java object: this goes some way to validating my decision to stick with simple holder objects rather than trying to keep some data copied to the Java side. Although it wouldn't be that difficult to track object instances if necessary: instead of calling NewObject() invoke a factory method which handles the object instances. Albeit at the cost of duplicating the reference tree in Java.

Another bonus i didn't realise is that the way lambdas are implemented allows these to be used from the Java side without the JNI needing to know anything about it. I think I did read about this at some point but it's been a while and I forgot about it. I had a look at a dissassemby of the class file and it's just using invokedymanic to create an interface object which is just a function pointer rather than having to create an instance of an abstract class.

So e.g. this works:

prog.buildProgram(new CLDevice[]{dev}, null,

(CLProgram source) -> {

System.out.printf("Build notify, status = %d\nlog:\n",

source.getBuildInfoInt(dev, CL_PROGRAM_BUILD_STATUS));

System.out.println(source.getBuildInfoString(dev, CL_PROGRAM_BUILD_LOG));

});

The one very big caveat for all of the above ... is that I haven't worked out a clean way to avoid leaking the notify instance object. This is because the OpenCL api specifies that these callback functions may be invoked asynchronously and/or from other threads.

Thinking aloud:

For the specific case of clBuildProgram and friends it looks like the notify function is only ever (and always) called once and I can thus deref the interface in the hook routine. If I pass both the CLProgram object and CLNotify interface to the hook routine I can keep the CLProgram instance unique anyway ... (And to be honest i'm not sure how useful this mechanism is to start with since it's easier just to compile synchronously and check the return code / exception). But CLContext has it's own notify function too which needs to live as long as the CLContext so I can't use the same trick there. At first I thought of creating an set/remove listener interface that just keyed everything off the point value and tracking the listeners in Java. But that doesn't work because presumably it's possible to get a callback call without ever getting a context. I guess I could use the listener itself as a key and provide a static native clearNotify() method which must be called explicitly but it gets a bit messy for a few reasons.

struct notify_info {

int id;

jobject jnotify;

};

clCreateContext(..., jobject jnotify) {

...

lock {

info = malloc();

info.id = getsequence();

info.jnotify = NewGlobalRef(jnotify);

listeners.add(info);

}

...

clCreateContext(..., create_context_hook, (void *)id);

...

}

create_context_hook(..., void *data) {

int id = (int)data;

lock {

info = listeners.find(id);

if (info) {

... invoke info.jnotify;

}

}

}

clear_context_notify(..., jobject jnotify) {

lock {

info = listeners.find(jnotify);

if (info) {

deleteGlobalRef(info.jnotify);

listeners.remove(info);

}

}

}

Yeah, messy. A bunch of it could be (synchronous) static Java methods, but it just isn't particularly elegant either way. Again i'm not sure how useful implementing this precise interface is anyway: it may just as well do to implement a completely separate system which funnels all events through a global event handler mechanism.

javafx + internet radio = partial success?

After a long and pointless goose-chase with different versions of ffmpeg and libav ... I got my internet radio thing to work from JavaFX.

It turned out to be at least partly a problem with my proxy code. The ice-cast stream doesn't include a Content-Length header (because you know, it's a stream), so this was causing libfxplugins to crash as in my previous post on the subject. Shouldn't really cause a crash - at most it should just throw a protocol error exception? Not sure if it has javafx security implications (e.g. if media on a web page through the web component goes through the same mechanism it certainly does - Update: I filed a bug in jira, and it seems to have been escalated - even at this late stage of java 8).

If I add a made-up content-length value at least I get the music playing, .. but the 'user experience' isn't very good because it seems to change how much it pre-buffers depending on the reported length (rather strange i think). So if i report 1-10M it starts after a few seconds but if i report some gigantic number it buffers for minutes (or maybe it never starts, i lost patience). The problem is that as the data length is hounoured (as it should be) which means that too small a number causes the stream to finish quickly. And it seems to read the same stream twice at the same time which is also odd.

To be blunt I find it pretty perplexing 'in this day and age' that javafx doesn't support streaming media to start with. Or that g-"so-called-streamer" seems to be the reason for this. If I could work out how to compile libfxplugins.so from the openjfx dist I would have a poke, but alas ...

I was going to go ahead with the application anyway but I think because of this streaming issue I wont - or if I do I wont be using javafx to do the decoding which is a bit of a pain.

Update 2: The bug has just been patched. 4 working days from reporting and if the openjdk schedule is still correct, just 2 weeks from release. So yeah I guess it was reasonably important and even just mucking about has value.

Simple Java binding for OpenCL 1.2

Well I have it to the point of working - it still needs some functions filled out plus helper functions and a bit of tweaking but it's mostly there. So far under 2KLOC of C and less of Java. I went with the 'every pointer is 64-bits' implementation, using non-static methods, and passing objects around to the JNI rather than the pointers (except for a couple of apis). This allows me to implement the raw interface fully in C with just an 'interface' in Java - and thus write a lot less code.

Currently i'm mapping a bit closer to the C api than JOCL does. I'm using only using ByteBuffers to transfer memory asynchronously, for any other array arguments i'm just using arrays.

This example is with the raw api with no helpers coming in to play - there are some obvious simple ones to add which will make it a bit more comfortable to use.

// For all the CL_* constants

import static au.notzed.zcl.CL.*;

...

CLPlatform[] platforms = CLPlatform.getPlatforms();

CLPlatform plat = platforms[0];

CLDevice dev = plat.getDevices(CL_DEVICE_TYPE_CPU)[0];

CLContext cl = plat.createContext(dev);

CLCommandQueue q = cl.createCommandQueue(dev, 0);

CLBuffer mem = cl.createBuffer(0, 1024 * 4, null);

CLProgram prog = cl.createProgramWithSource(

new String[] {

"kernel void testa(global int *buffer, int4 n, float f) {" +

" buffer[get_global_id(0)] = n.s1 + get_global_id(0);" +

"}"

});

pog.buildProgram(new CLDevice[]{dev}, null, null);

CLKernel k = prog.createKernel("testa");

ByteBuffer buffer = ByteBuffer.allocateDirect(1024 * 4).order(ByteOrder.nativeOrder());

k.set(0, mem);

k.set(1, 12, 13, 14, 15);

k.set(2, 1.3f);

q.enqueueWriteBuffer(mem, CL_FALSE, 0, 1024 * 4, buffer, 0, null, null);

q.enqueueNDRangeKernel(k, 1, new long[] { 0 }, new long[] { 16 }, new long[] { 1 }, null, null);

q.enqueueReadBuffer(mem, CL_TRUE, 0, 1024 * 4, buffer, 0, null, null);

q.finish();

IntBuffer ib = buffer.asIntBuffer();

for (int i=0;i<32;i++) {

System.out.printf(" %3d = %3d\n", i, ib.get());

}

Currently CLBuffer (and CLImage) is just a handle to the cl_mem - it has no local meta-data or a fixed Buffer backing. The way JOCL handles this is reasonably convenient but i'm still yet to decide whether I will do something similar. Whilst it may be handy to have local copies of data like 'width' and 'format', I'm inclined to just have accessors which invoke the GetImageInfo call instead - it might be a bit more expensive but redundant copies of data isn't free either.

I'm not really all that fond of the way JOCL honours the position() of Buffers - it kind of seems useful but usually it's just a pita. And manipulating that from C is also a pain. So at the moment I treat them as one would treat malloc() although I allow an offset to be used where appropriate.

Such as ...

public class CLCommandQueue {

...

native public void enqueueWriteBuffer(CLBuffer mem, boolean blocking,

long mem_offset, long size,

Buffer buffer, long buf_offset,

CLEventList wait,

CLEventList event) throws CLException;

...

}

Compare to the C api:

extern CL_API_ENTRY cl_int CL_API_CALL

clEnqueueWriteBuffer(cl_command_queue /* command_queue */,

cl_mem /* buffer */,

cl_bool /* blocking_write */,

size_t /* offset */,

size_t /* size */,

const void * /* ptr */,

cl_uint /* num_events_in_wait_list */,

const cl_event * /* event_wait_list */,

cl_event * /* event */) CL_API_SUFFIX__VERSION_1_0;

In C "ptr" can just be adjusted before you use it but in Java I need to pass buf_offset to allow the same flexibility. It would have been nice to be able to pass array types here too ... but then I realised that these can run asynchronous which doesn't work from jni (or doesn't work well).

I'm still not sure if the query interface is based only on the type-specific queries implemented in C or whether I have helpers for every value on the objects themselves. The latter makes the code size and maintenance burden a lot bigger for questionable benefit. Maybe just do it for the more useful types.

Haven't yet done the callback stuff or native kernels (i don't quite understand those yet) but most of that is fairly easy apart from some resource tracking issues that come in to play.

Of course now i've done 90% of the work i'm not sure i can be fagged to do the last 10% ...

more on JNI overheads

I wrote most of the OpenCL binding yesterday but now i'm mucking about with simplifying it.

I've experimented with a couple of binding mechanisms but they have various drawbacks. They all work in basically the same way in that there is an abstract base class of each type then a concrete platform-specific implementation that defines the pointer holder.

The difference is how the jni C code gets hold of that pointer:

- Passed directly

- The abstract base class defines all the methods, which are implemented in the concrete class, which just invokes the native methods. The native methods may be static or non-static.

This requires a lot of boilerplate in the java code, but the C code can just use a simple cast to access the CL resources.

- C code performs a field lookup

- The base class can define the methods directly as native. The concrete class primarily is just a holder for the pointer value.

This requires only minimal boiler-plate but the resources must be looked up via a field reference. The field reference is dependent on the type though.

- C code performs a virtual method invocation.

- The base class can define the methods directly as native. The concrete class primarily is just a holder for the pointer value.

This requires only minimal boiler-plate but the resources must be looked up via a method invocation. But here the field reference is independent on the type.

The last is kind of the nicest - in the C code it's the same amount of effort (coding wise) as the second but allows for some polymorphism. The first is the least attractive as it requires a lot of boilerplate - 3 simple functions rather than just one empty one.

But, a big chunk of the OpenCL API is dealing with mundane things like 'get*Info()' lookups and to simplify it's use I came up with a number of type-specific calls. However rather than write these for every possible type I pass a type-id to the JNI code so a single function works. This works fine except that I would like to have a separate CLBuffer and CLImage object - and in this case the second implementation falls down.

To gain more information on the trade-off involved I did some timing on a basic function:

public CLDevice[] getDevices(long type) throws CLException;

This invokes clGetDeviceIDs twice (first to get the list size) and then returns an array of instantiated wrappers for the pointers. I invoked this 10M times for various binding mechanisms.

Method Time

pass long 13.777s

pass long static 14.212s

field lookup 14.060s

method lookup 16.252s

So interesting points here. First is that static method invocations appear to be slower than non-static even when the pointer isn't being used. This is somewhat surprising as 'static' methods seem to be quite popular as a mechanism for JNI binding.

Second is that a field lookup from C isn't that much cost compared to a field lookup in Java.

Lastly, as expected the method lookup is more expensive and if one considers that the task does somewhat more than the pointer resolution then it is quite significantly more expensive. So much so that it probably isn't the ideal solution.

So ... it looks like I may end up going with the same solution I've used before. That is, just use the simple field lookup from C. Although it's slightly slower than the first mechanism it is just a lot less work for me without a code generator and produces much smaller classes either way. I'll just have to work out a way to implement the polymorphic getInfo methods some other way: using IsInstanceOf() or just using CLMemory for all memory types. In general performance is not an issue here anyway.

I suppose to do it properly I would need to profile the same stuff on 32-bit platforms and/or android as well. But right now i don't particularly care and don't have any capable hardware anyway (apart from the parallella). I wasn't even bothering to implement the 32-bit backend so far anyway.

Examples

This is just more detail on how the bindings work. In each case objects are instantiated from the C code - so the java doesn't need to know anything about the platform (and is thus, automatically platform agnostic).

First is passing the pointer directly. Drawback is all the bulky boilerplate - it looks less severe here as there is only a single method.

public abstract class CLPlatform extends CLObject {

abstract public CLDevice[] getDevices(long type) throws CLException;

class CLPlatform64 extends CLPlatform {

final long p;

CLPlatform64(long p) {

this.p = p;

}

public CLDevice[] getDevices(long type) throws CLException {

return getDevices(p, type);

}

native CLDevice[] getDevices(long p, long type) throws CLException;

}

class CLPlatform32 extends CLPlatform {

final int p;

CLPlatform32(int p) {

this.p = p;

}

public CLDevice[] getDevices(long type) throws CLException {

return getDevices(p, type);

}

native CLDevice[] getDevices(int p, long type) throws CLException;

}

}

Then having the C lookup the field. Drawback is each concrete class must be handled separately.

public abstract class CLPlatform extends CLObject {

native public CLDevice[] getDevices(long type) throws CLException;

class CLPlatform64 extends CLPlatform {

final long p;

CLPlatform64(long p) {

this.p = p;

}

}

class CLPlatform64 extends CLPlatform {

final long p;

CLPlatform64(long p) {

this.p = p;

}

}

class CLPlatform32 extends CLPlatform {

final int p;

CLPlatform32(long p) {

this.p = p;

}

}

}

And lastly having a pointer retrieval method. This has lots of nice coding benefits ... but too much in the way of overheads.

public abstract class CLPlatform extends CLObject {

native public CLDevice[] getDevices(long type) throws CLException;

class CLPlatform64 extends CLPlatform implements CLNative64 {

final long p;

CLPlatform64(long p) {

this.p = p;

}

long getPointer() {

return p;

}

}

class CLPlatform64 extends CLPlatform implements CLNative32 {

final int p;

CLPlatform64(int p) {

this.p = p;

}

int getPointer() {

return p;

}

}

}

Or ... I could of course just use a long for storage on 32-bit platforms and be done with it - the extra memory overhead is pretty much insignificant in the grand scheme of things. It might require some extra work on the C side when dealing with a couple of the interfaces but it is pretty minor.

With that mechanism the worst-case becomes:

public abstract class CLPlatform extends CLObject {

final long p;

CLPlatform(long p) {

this.p = p;

}

public CLDevice[] getDevices(long type) throws CLException {

return getDevices(p, type);

}

native CLDevice[] getDevices(long p, long type) throws CLException;

}

Actually I can move 'p' to the base class then which simplifies any polymorphism too.

I still like the second approach somewhat for a hand-coded binding since it keeps the type information and allows all the details to be hidden in the C code where it is easier to hide using macros and so on. And the java becomes very simple:

public abstract class CLPlatform extends CLObject {

CLPlatform(long p) {

super(p);

}

public native CLDevice[] getDevices(long type) throws CLException;

}

CLEventList

Another problematic part of the OpenCL api is cl_event. It's actually a bit of a pain to work with even in C but the idea doesn't really map well to java at all.

I think I came up with a workable solution that hides all the details without too much overheads. My initial solution is to have a growable list of items (the same as JOCL) that was managed on the Java side. It's a bit messy on the C side but really messy on the Java side:

public class CLEventList {

static class CLEventList64 {

int index;

long[] events;

}

}

...

enqueueSomething(..., CLEventList wait, CLEventList event) {

CLEventList64 wait64 = (CLEventList64)wait;

CLEventList64 event64 = (CLEventList64)event;

enqueueSomething(...,

wait64 == null ? 0 : wait64.index, wait64 == null ? null : wait64.events,

event64 == null ? 0 : event64.index, event64 == null ? null : event64.events);

if (event64 != null) {

event64.index+=1;

}

}

Yeah, maybe not - for the 20 odd enqueue functions in the API.

So I moved most of the logic to the C code - actually the logic isn't really any different on the C side it just has to do a couple of field lookups rather than take arguments, and I added a method to record the output event.

public class CLEventList {

static class CLEventList64 {

int index;

long[] events;

void addEvent(long e) {

events[index++];

}

}

}

...

enqueueSomething(..., CLEventList wait, CLEventList event) {

enqueueSomething(...,

wait,

event);

}

UserEvents are still a bit of a pain to fit in with this but I think I can work those out. The difficulty is with the reference counting.

Distraction

As a 'distraction' last night I started coding up a custom OpenCL binding for Java. This was after sitting / staring at my pc for a few hours wondering if i'd simply given up and 'lost the knack'. Maybe I still have. Actually in hindsight i'm not sure why i'm doing it other than as some relatively 'simple' distraction to keep me busy. It's quite simple because it's mostly a lot of boilerplate mapping the relatively concise OpenCL api to a small number of classes and there isn't too much to think about.

Not sure i'll finish it actually. Like I said, distraction.

But FWIW I took a different approach to the binding this time - all custom code, trying to use / support native java types where possible (rather than forcing ByteBuffer for every interaction), etc. Also a different approach to the 32/64 bit problem compared to previous JNI bindings - spreading the logic between the C and Java code by having the C make the decisions about constructors but having the Java define the behaviour via abstract methods (it's more obvious than i'm able to describe right now). Well I got as far as some of CLContext but there's still a day or two's work to get it 'feature complete' so we'll see if I get that far.

Was a nice day today so after a shit sleep (i think the dentist hit some nerves with the injections and/or bruised the roots - the original heat-sensitive pain is gone but now i have worse to deal with) I decided to try to do some socialising. I dropped by some mates houses unannounced but I guess they were out doing the same thing but I did catch up with a cousin I haven't seen properly for years. Pretty good for just off 70, wish I had genes from his part of the family tree. Then had a few beers in town (and caught up with him and his son by coincidence) - really busy for a Sunday no doubt due to the Fringe.

Plenty of food-for-the-eyes at least. Hooley dooley.

Small poke

Started moving some of my code and whatnot over to the new pc and had a bit of a poke around DuskZ after fixing a bug in the FX slide-show code exposed by java 8.

I'm just working on putting the backend into berkeley db. Took a while to remember where I was at, then I made some changes to add a level of indirection between item (types) where they exist (on-map or in-inventory). And then I realised I need to do something similar for active objects but hit a small snag on how to do it ...

I'm trying to resolve the relationship between persistent storage and active instances whilst maintaining the class hierarchy and trying to leverage indices and referential integrity from the DB. And trying not to rewrite huge chunks of code. I think i'm probably just over-thinking it a bit.

Also now have too many importers/exporters for dead formats which can probably start to get culled.

So yeah, quick visit but (still) need to think a bit more.

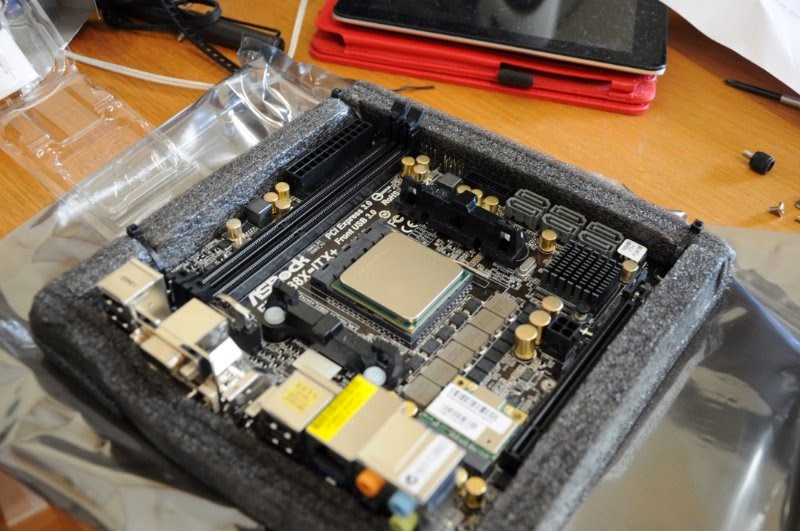

Kaveri 'mini' pc

Yesterday I had to go somewhere and it was near a PC shop I go to so I dropped in an ordered the bits for a new computer with a A10-7850K APU. I got one of the small antec cases (ISK300-150) - for some reason I thought it had an external PSU so I wasn't considering it. I'm really over 'mini' tower cases these days which don't seem too mini. Ordered a 256GB SSD - not really sure why now i think about it, my laptop only has a 100G drive and unless I have movies or lots of DVD iso's on the disk that is more than enough. 8G DD3-2133 ram, ASRock ITX board. Hopefully the 150W PSU will suffice even if i need to run the APU in a lower-power mode, and hopefully everything fits anyway. Going to see how it goes without an optical drive too.

I guess from being new, using some expensive bits like the case, being in australia, and not being the cheapest place to get the parts ... it still added up pretty fast for only 5 things: to about $850 just for the computer with no screens or input peripherals. *shrug* it is what it is. As I suspected the guy said nobody around here really buys AMD of late. Hopefully the HSA driver isn't too far away either, i'm interested in looking into HSA enabled Java, plain old OpenCL, and probably the most interesting to me; other ways to access HSA more directly (assuming linux will support all that too ...). Well, when I get back into it anyway.

Should hopefully get the bits tomorrow arvo - if i'm not too rooted after the "root canal stage 1" in the morning (pun intended). I'll update this post with the build and os install - going to try slackware on this one. I'm interested to see EFI for the first time and/or if there will be problems because of it; i'm not fan of the old PC-BIOS and it's more than about time it died (asrock's efi gui looks pretty garish from pics mind you). Although if M$ and intel were involved i'm sure they managed to fuck it up some how (beyond the obvious mess with the take-over-your-computer encrypted boot stuff. I'm pretty much convinced this is all for one purpose: to embed DRM into the system. I have a hunch that systemd will also be the enabler for this to happen to GNU/Linux. Making life difficult for non-M$ os's was just a bonus.)

PS This will be my primary day-to-day desktop computer; mostly web browsing + email, but also a bit of hobby hacking, console for parallella, etc.

Dentist was ... a little disturbing. He wasn't at all confident he was even going to be able to save the tooth until the last 10 minutes after poking around for an hour and a half. He was just about to give up. It was for resorbtion - and amounted to a very deep and very fiddly filling that went all the way through the top and out the side below the gum-line. Apart from being pretty boring it wasn't really too bad except for a couple of stabs of pain when he went into the nerves before blasting them with more drugs ... until the injections wore off that is. Ouch - I think mainly just bruising from the injections. Well I hope it was worth it anyway and it doesn't just rot away after all that, even with a microscope I don't know how he could see what was going on. Have to go back in 3 months for the root canal job :-( That's the expensive one too.

Anyway, had a few beers then went and got the computer bits.

Case is an antec ISK300-150. The in-built PSU is about 1/4 the size of a standard ATX PSU.

Motherboard is ASRock FM2A88X-ITX - haven't bought one for a while so it seems to have an awful lot of shit on it. Not sure what use hdmi in is ...

And everything fits fairly well. The main pain was the USB3 header connector which is 2 fat cables and a tall connector. This the first SSD i've installed and it's interesting to see how small/light they are. The guy in the computer shop was origianlly going to sell me some cruicial ram but i went with a lower-profile g.skill one - and just as well, I don't think the other would have fit.

Apart from that everything fits in pretty easy (I might cable-tie some of the cables to the frame though). I updated the firmware using the network bios update thing - which was nice.

Then I booted the slackware64 usb image, created the partitions using gdisk, and started installing directly from my ISP's slackware mirror. A bit slower than doing it locally but I'm in no rush.

So far it's so boringly straightforward there's nothing really to report. I presume the catalyst driver will be straightforward too.

I have an old keyboard I intend to use and i was surprised the mobo comes with a PS/2 socket (I was going to use a usb converter). I got it at a mysterious pawn shop one saturday afternoon - mysterious because i've never been able to find it again despite a few attempts looking for it. I must've wildly mis-remembered where it was. It's got a steel base and no m$ windoze keys.

Time passes ... (installs via ftp) ...

Ok, so looks like I did make a mistake: one must boot in EFI mode from the USB stick for it to install the EFI loader properly. Initially it must've booted using BIOS mode automagically so it didn't prompt for the ELILO install. I just rebooted from the stick and ran setup again. It setup EFI and the bios boot menu fine.

And it took me a little while on X - the APU requires a different driver from the normal ones (search apu catalyst driver). And ... well my test monitor with a HDMI to DVI cable had slipped out a bit and caused some strange behaviour. It worked fine in text mode and for the EFI interface, but turned off when X started (how bizarre). Once I seated it properly it worked as expected. Now hopefully that HSA driver isn't too far away.

Now i've got it that far I don't feel like shuffling screens and cables around to set it up, maybe tomorrow.

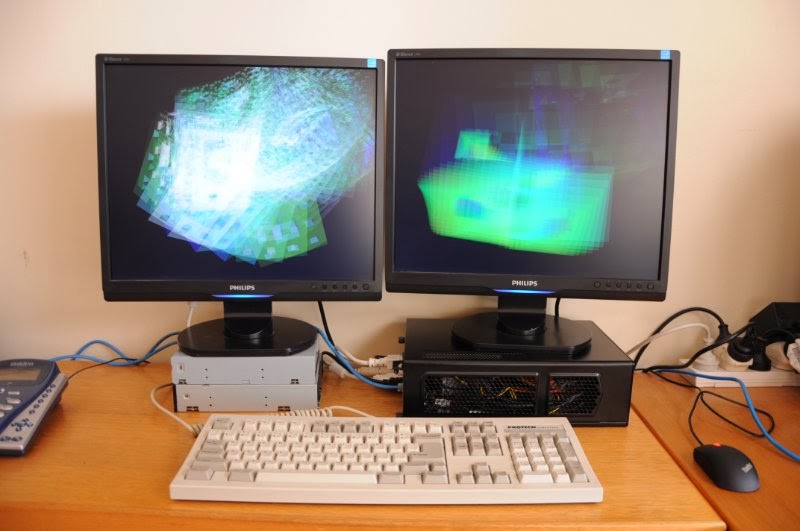

Must've had too many coffees yesterday at the pub, I ended up installing the box in a 'temporary' setup and playing around till past midnight.

I've got another workstation on the main part of the desk so i'm just using the return which is only 450mm deep - it's a bit cramped but I think this will be ok - not sure on the ergonomics yet. This is where I had my laptop before anyway. There may be other options too but this will do for now.

And yeah, I really did buy some 4:3 monitors although I got them a few years ago (at a slight premium over wide-screen models). For web or writing or pretty much anything other than playing games or watching movies, it's a much better screen size. These 19" models have about as much usable space as a 24" monitor in much less physical area and even a higher resolution at 1600x1200.

I also had a bit of a play with the thermal throttling and so on. With no throttling it gets hot pretty fast - the AMD heatsink is a funny vertical design that doesn't allow cross-flow from the case fan so it doesn't work very well. And it's radial design also seems to cause extra fan noise when it ramps up. The case fan is a bit noisy too. If i turn it up to flat out it will cause the cpu fan to slow down to a reasonable level - so I guess I could operate it that way if I really wanted the speed.

Throttling at 65W via the bios seems a good compromise, I can set the case fan to middle-speed (or low if i'm not doing much) and the machine is only about 10-15% slower (compiling linux).

I knew it was going to be a compromise when going for such a small case so this is ok by me.

Hmm, maybe I spoke too soon - although the X driver is working, GL definitely isn't. For whatever reason GL seemed to point to the wrong version (libGL.so.1.2 points to fglrx but libGL.so.1.2.0 was the old one).

But fixing that ... and nothing GL works at all. Just running glxinfo causes artifacts to show up and anything that outputs graphics == instant (X) crash.

Trying newer kernels.

Initially I had no luck - i built a 3.12.12 kernel using the huge config from testing/; the driver build fails due to the use of a GPL symbol. It turns out that was because kernel debugging was turned on in that config. Removing that let me build the driver.

While I was building 3.12.12 I also tried 3.13.4 ... But the driver interface wont build with this one and it looks like it needs a patch for that. Or I missed some kernel config option in the byzantine xconfig (there's something that definitely hasn't improved over the years).

So with 3.12.12 and a running driver GL still didn't seem to work and crashed as soon as I started any GL app. I was about to give up. Then as one last thing I tried turning on the iommu again; and viola ... so far so good. Or maybe not - that lasted till the next reboot. Tried an ATX PSU as well. No difference.

Blah. I have no idea now?

Then I saw a new bios came out between when i updated it yesterday and today so I tried that.

Hmm, seems to be working so far. I reset the bios to defaults (oops, bad idea, it wiped out the efi boot entry), fixed the boot entry, fixed the ram speed (it uses 1600 intead of 2133). Doesn't need the iommu on. And doesn't seem to need thermal throttling to keep it running ok. So maybe it was a bung bios.

Bloody PeeCees!

I decided to do a little cleanup of the cables to help with airflow and tidy up the main volume. It had been getting a bit warm on one of the support chips (the heatsink on left corner of mobo). The whole drive frame is a bit of a pain for that tiny SSD drive.

Since the BIOS update it's been running a lot cooler anyway. In general use I might be able to get away with the case fan on it's lowest setting with all the default BIOS settings.

Update: Been running solid for the 3 days since I put it back together. During 'normal use' the slowest fan setting is more than enough and it runs quiet and cool (normal use == browsing 20+tabs, pdf viewers, netbeans, a pile of xterms). And quite novel having a 'suspend to ram' option that works reliably on a desktop machine (like i said: been a long time since i build a pc, and that just didn't work properly). Yay for slackware!

Copyright (C) 2019 Michael Zucchi, All Rights Reserved.

Powered by gcc & me!