About Me

Michael Zucchi

B.E. (Comp. Sys. Eng.)

also known as Zed

to his mates & enemies!

< notzed at gmail >

< fosstodon.org/@notzed >

note to self ...

Remember you need to save the status register before you execute an add.

I went about coding up that atomic counter idea from the previous post but had a hard time getting it to work. After giving up for a bit I had another go (couldn't let it rest as it was so close) and I discovered the register-saving preamble i'd copied from the async dma isr was simply broken.

_some_isr:

sub sp,sp,#24

strd r0,[sp]

strd r2,[sp,#1]

strd r4,[sp,#2]

movfs r5,status

... routine

movts status,r5

ldrd r4,[sp,#2]

ldrd r2,[sp,#1]

ldrd r0,[sp],#3

rti

Well it certainly looked the part, but it clobbers the status register in the first instruction. Oops.

strd r4,[sp,#-1]

movfs r5,status

sub sp,sp,#24

strd r0,[sp]

strd r2,[sp,#1]

There goes the symmetry. Actually it could probably just leave the stack pointer where it is because the routine isn't allowing other interrupts to run at the same time.

Fixed, the atomic counter worked ok. It shows some ... interesting ... behaviour when things get busy. It's quite good at being fair to all the cores except the core on which the atomic counter resides. Actually it gets so busy that the core has no time left to run any other code. The interrupt routine is around 200 cycles for 16 possible clients.

So ... I might have to try a mutex implementation and do some profiling. Under a lot of contention it may still be better due to the fixed bandwidth requirements but it's probably worth finding out where the crossover point is and how each scales. A mutex implementation wont support host access either ... always trade-offs. Definitely a candidate for FPGA I guess.

ipc primitive ideas

Had a couple of ideas for parallella IPC mechanisms whilst in the shower this morning. I guess i'm not out of ideas yet then.

atomic counter

These are really useful for writing non-blocking algorithms ... and would best be implemented in hardware. But since they aren't (yet - it could go into fpga too) here's one idea for a possible solution.

The idea is to implement it as an interrupt routine on each core - each core provides a single atomic counter. This avoids the problem of having special code on some cores and could be integrated into the runtime since it would need so little code.

Each core maintains a list of atomic counter requests and the counter itself.

unsigned int *atomic_counter_requests[MAX_CORES];

unsigned int atomic_counter;

To request an atomic number:

unsigned int local_counter;

local_counter = ~0;

remote atomic_counter = &local_counter;

remote ILATST = set interrupt bit;

while local_counter = ~0

// nop

wend

The ISR is straightforward:

atomic_isr:

counter = atomic_counter;

for i [get_group_size()]

if (atomic_counter_requests[i])

*atomic_counter_requests[i] = counter++;

atomic_counter_requests[i] = NULL;

fi

done

atomic_counter = counter;

Why not just use a mutex? Under high contention - which is possible with many cores - they cause a lot of blockage and flood the mesh with useless traffic of retries. Looking at the mesh traffic required:

do {

-> mutex write request

<- mutex read request return

} while (!locked)

-> counter read request

<- counter read request return

-> counter write request

-> mutex write request

Even in the best-case scenario of no contention there is two round trips and then two writes which don't block the callee.

In comparison the mesh traffic for the interrupt based routine is:

-> counter location write request

-> ILATST write request

<- counter result write request

So even if the interrupt takes a while to scan the list of requests the mesh traffic is much lower and more importantly - fixed and bounded. There is also only a single data round-trip to offset the loop iteration time.

By having just one counter on each core the overhead is minimal on a given core but it still allows for lots of counters and for load balancing ideas (e.g. on a 4x4 grid, use one of the central cores if they all need to use it). Atomic counters let you implement a myriad of (basically) non-blocking multi-core primitives such as multi reader/multi-writer queues.

This is something i'll definitely look at adding to the ezecore runtime. Actually if it's embedded into the runtime it can size the request array to suit the workgroup or hardware size and it can include one or more slots for external requests (e.g. allow the host to participate).

Broadcast port

Another idea was for a broadcast 'port'. The current port implementation only works with a single reader / single writer at a time. Whilst this can be used to implement all sorts of variations by polling one idea might be to combine them into another structure to allow broadcasts - i.e. single producer and multiple readers which read the same entries.

If the assumption is that every receiver is at the same memory location then the port only needs to track the offset into the LDS for the port. The sender then has to write the head updates to every receiver and the receivers have to have their own tail slots. The sender still has to poll multiple results but they could for example be stored as bytes for greater efficiency.

This idea might need some more cooking - is it really useful enough or significantly more efficient enough compared to just having a port-pair for each receiver?

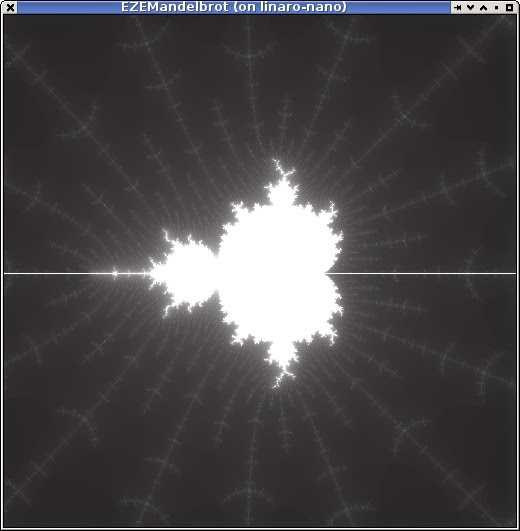

that 'graphical' demo

Ended up coding that 'graphical' demo. Just another mandelbrot thingo ... not big on the imagination right now and I suppose it demonstrates flops performance.

This is using JavaFX as the frontend - using the monocle driver as mentioned on a previous post. I'm still running it via remote X11 over ssh.

I have each EPU core calculating interleaved lines which works quite well at balancing the scheduling - at first i tried breaking the output into bands but that worked very poorly. The start position (not shown, but is (-1.5,-1.0) to (0.5,+1.0) and 256 maximum iterations) with 16 cores takes 0.100 seconds. A single-threaded implementation in Java using Oracle JDK 1.8 on the ARM takes 1.500 seconds, i'm using the performance mode. A single EPU core takes a little longer at 1.589s - obviously showing near-perfect scaling of the trivially paralellisable problem here even with the simple static scheduling i'm using.

For comparison my kaveri workstation using the single-core Java takes 0.120 seconds. Using Java8 parallel foreach takes that down to 0.036 seconds (didn't have this available when I timed the ARM version).

Details

The IPC mechanism i'm using is an 'ez_port' with a on-core queue. The host uses the port to calculate an index into the queue and writes to it's reserved slot directly, and so then the on-core code can just use it when the job is posted to the port.

The main loop on the core-code is about as simple as one can expect considering the runtime isn't doing anything here.

struct job {

float sx, sy, dx, dy;

int *dst;

int dstride, w, h;

};

ez_port_t local;

struct job queue[QSIZE];

int main(void) {

while (1) {

unsigned int wi = ez_port_await(&local, 1);

if (!queue[wi].dst) {

ez_port_complete(&local, 1);

break;

}

calculate(&queue[wi]);

ez_port_complete(&local, 1);

}

return 0;

}

This is the totality of code which communicates with the host. calculate() does the work according to the received job details. By placing the port_complete after the work is done rather than after the work has been copied locally allows it to be used as an implicit completion flag as well.

The Java side is a bit more involved but that's just because the host code has to be. After the cores are loaded but before they are started the communication values need to be resolved in the host code. This is done symbolically:

for (int r = 0; r < rows; r++) {

for (int c = 0; c < cols; c++) {

int i = r * cols + c;

cores[i] = wg.createPort(r, c, 2, "_local");

queues[i] = wg.mapSymbol(r, c, "_queue");

}

}

Then once the cores are started the calculation code just has to poke the result into the processing queue on each core. It has to duplicate the struct layout using a ByteBuffer - it's a bit clumsy but it's just what you do on Java (well, unless you do some much more complicated things).

int N = rows * cols;

// Send job to queue on each core directly

for (int i = 0; i < N; i++) {

int qi = cores[i].reserve(1);

// struct job {

// float sx, sy, dx, dy;

// int *dst;

// int dstride, w, h;

//};

ByteBuffer bb = queues[i];

bb.position(qi * 32);

// Each core calculates interleaved rows

bb.putFloat(sx);

bb.putFloat(sy + dy * i);

bb.putFloat(dx);

bb.putFloat(dy * N);

// dst (in bytes), dstride (in ints)

bb.putInt(dst.getEPUAddress() + i * w * 4);

bb.putInt(w * N);

// w,h

bb.putInt(w);

bb.putInt(h - i);

bb.rewind();

cores[i].post(1);

}

The post() call will trigger the calculation in the target core from the loop above.

Then it can just wait for it to finish by checking when the work queue is empty. A current hardware limitation requires busy wait loops.

// Wait for them all to finish

for (int i = 0; i < N; i++) {

while (cores[i].depth() != 0) {

try {

Thread.sleep(1);

} catch (InterruptedException ex) {

}

}

}

These two loops can then be called repeatedly to calculate new locations.

The pixels as RGBA are then copied to the WritableImage and JavaFX displays that at some point. It's a little slow via remote X but it works and is just a bit better than writing to a pnm file from C ;-)

I've exhausted my current ideas for the moment so I might drop out another release. At some point. There are still quite a lot of issues to resolve in the library but it's more than enough for experimentation although i'm not sure if anyone but me seems remotely interested in that.

minimal memory allocator n stuff

Over the last couple of days i've been hacking on the ezesdk code - original aim was to get a graphical Java app up which uses epiphany compute. But as i'm just about there i'm done for a bit.

Along the way I realised I pretty much needed a memory allocator for the shared memory block because I can't use static allocations or fixed addresses from Java. So I implemented a very minimal memory allocator for this purpose. It's small it enough it could potentially be used on-core for on-core memory allocations but i'm not look at using it there at this point.

It uses first-fit which I surprisingly found (some profiling a few years ago) was one of the better algorithms in terms of utilisation when memory is tight. The lowest level part of the allocator requires no overheads for memory allocations apart from those required for maintaining alignment. So for example 2 32xbyte allocations will consume 64-byte of memory exactly. Since this isn't enough to store the size of the allocation it must be passed in to the free function. I have a higher level malloc like entry point also which has an 8-byte overhead for each allocation and saves the size for the free call.

The only real drawback is that it isn't really very fast - it does a memory-order scan for both allocations and de-allocations. But as it is basically the algorithm AmigaOS used for years for it's system allocator it has been proven to work well enough.

So once I had that working I moved all the loader code to use it and implemented some higher level primitives which make communicating with cores a bit easier from host code.

I just ran some trivial tests with the JNI code and I have enough working to load some code and communicate with it - but i'll come up with that graphical demo another day. A rather crappy cold/wet weekend though so it might be sooner rather than later.

parallella ezesdk 0.1

As seems to be the habit lately I had a bunch of code sitting around on the hard drive for too long doing nothing so i packaged it up incase someone else finds it interesting. I should have some free time throughout June so doing this might also be a kick to restart development but I wouldn't be holding my breath on that one.

It's over on the ezesdk homepage I just whipped up.

It's all pretty much still subject to change but I think i've nailed down a pretty nice and compact core-side library api and the low-level host library as well. I had intended to include proper documentation and Java bindings by this point but ... well I didn't get to it.

Features of note is that it includes a functional printf, on-core barrier, async (interrupt-driven) dma routines, and 2d dma entry points; some of which has been discussed in more detail on a hacker's craic.

Chocolate cake with hazelenuts

So I just finished the my last bit of work and the office/team has a thing about people bringing cakes for various events so I baked a cake to take along as a good-bye and partly just to say that the people weren't the reason I was refusing any potential continuation of the work.

I was going to go with an apple cake i've made a couple of times which usually turns out pretty well but there was an apple cake the week before for someone's birthday and and since i've been trying to get a good chocolate cake under my belt for a while I thought i'd risk it - if it failed i'd just leave it at home. I have been hoeing through some mixed raw nuts lately and the pack included hazelnuts - which are pretty foul raw - so i'd been saving them up wondering what to do with them and it seemed like an obvious match. I couldn't find quite the type of recipe I was after (either they were all about gluten free-ness, making super-heavy cakes or some kids-birthday Nutella-based thing) so I tried adjusting one I had for another chocolate cake and it worked rather well ...

- 125g butter

- 155g brown sugar

- 3 eggs

- 125g self-raising flour

- 1/4 teaspoon bicarb soda

- 45g cocoa powder

- 125g roasted and blended hazelnuts (aka meal)

- 190ml milk

- 125g dark chocolate (I used 40% solids)

- about 1 short black of strong espresso coffee (I use a 4-cup stove-top espresso maker and half-filled the water with a full load of grounds)

- Pre-heat oven to 160C.

- Melt the chocolate with the coffee using a steam bath and while it's cooling ...

- Cream butter and sugar.

- Blend in eggs one at a time until fully mixed.

- Mix in flour, bicarb, cocoa powder, hazelnuts, milk, and melted chocolate until just combined.

- Pour into greased/lined 22-24cm spring-form cake tin and level off.

- Cook for 1 hour and 15 minutes 'or until done'.

From the original recipe I replaced 30g of plain flour with all the hazelnuts I had, added the coffee when melting the chocolate to make it easier to mix in (doesn't just go hard/curdle, and it just tastes better), and adjusted a couple of other things slightly to suit what I had on hand. Although my oven works really well for everything else i've had a lot of trouble with cakes not cooking enough but this time I tried turning off the fan-forced fan and it was right on the money (perhaps slightly over, but not enough to matter).

It managed to survive the ride in my pannier bags and generally impressed. Probably the best cake i've ever made actually, although the bar has been until this point, rather low.

short hsa on kaveri note

I finally got around to upgrading to the latest hsa kernel and so far it seems as though hsa now continues to work after a suspend. Well i've suspended and resumed the machine once and the one of the aparapi-lambda tests continues to function with hsail.

Although this machine reboots rather fast it was just an inconvenience that pretty much put a stop to me playing around with hsa. I think there were other reasons too actually but I guess it's one less at least.

It's the 'v0.5' tag of git://people.freedesktop.org/~gabbayo/linux which I built from source rather than use the ubuntu images in Linux-HSA-Drivers-And-Images-AMD (although i grabbed the firmwares from there).

...

I've got 1 day left on my current bit of work, then another break, and then i'm not sure what i'm going to do. There is pretty much perpetually on-going 'bits of work' if I want it but i'm considering not continuing for various reasons (and have been for a while). Have to weigh that against trying to find some interesting work though. Ho hum.

Selfie

Had nothing better to do on my RDO but sit at the pub drinking jugs (translation for americans: rdo=rostered day off, jugs=pitchers). Took the pic with my cheap tablet on the front-facing camera so it's a bit ordinary but the subject matter hardly requires better ...

A very unseasonably warm 25C+ late autumn day, bloody wonderful. Bit of eye candy but I was well occupied reminiscing about happier timers and better company. Many people I knew/know and miss due to trans-state movements, emigration, and other. All the rest were busy today - work, pah.

Everyone moved on but I just got old and fat and grey. Yeah it's the same shirt I wore out in Bangalore but i've been working on the chops (may as well look the part if i'm going to be eccentric). Must be a bit of the Celtic blood showing itself there.

Copyright (C) 2019 Michael Zucchi, All Rights Reserved.

Powered by gcc & me!