Viola & Jones Detector OpenCL

After being a bit distracted yesterday evening by my co-dwellers, I got stuck into a problem I've been wanting to look at for a while - running a Viola & Jones cascade detector on the GPU using OpenCL. I'd just got an integral image calculator done so was eager to use it.I've had a few goes in the past but always seemed to mess up some of the weighting calculations, so I started with the code from OpenClooVision which is a bit easier to follow than the OpenCV implementation, although could certainly use some work.

So, by about 6am I had a working implementation ... but it was really very slow. Far too slow to even consider for what I need, and worse, it doesn't scale at all well - running it on smaller problem sizes just makes it even less efficient.

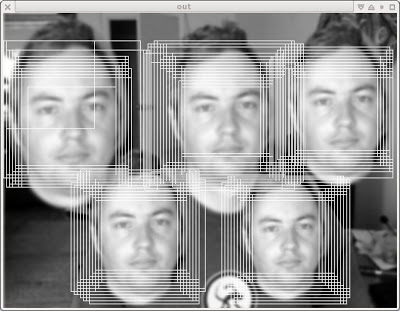

Who is that fat bastard?

I went to bed shivering cold but mostly wondering just what I could do to speed it up. I have previously done some work with integral images, and I found they do not work particularly well on GPUs - even calculating them aside (which I managed to solve with an acceptable solution although it took many many dead-ends and grey hairs). Although on paper they look like an efficient solution - a handful of array lookups to calculate an area sum - in use they seem to interact poorly with texture cache.

It was taking in excess of 30 000uS to perform 14 passes on a 640x480 test image in steps of 5 with a scale of 1.25.

The OpenCV and faint implementations both pre-scale the features and feature-weights. I never quite understood why until I had a working implementation, and the OpenClooVision version was calculating them on the fly. So the first stop was to try this. Pre-calculating the weights is extremely cheap, and this lead to around a 50% performance boost.

I still had a problem with the GPU hardly being utilised, particularly at the larger scales (fewer tasks/call) or with smaller images. And because each thread was working on a separate probe, there was very little coherency in processing or data.

However, I noticed that for the cascade I was using (the default one from OpenCV) it was running many feature tests for each stage - 25-200; and that calculating the feature value was unconditional - something ripe for parallelisation.

So I tried launching 64 threads for each probe location, and they work together on the list of features in blocks of 64, and then tally them up at the end using thread 0. This was the biggest improvement and I managed to get it down to around 12 000uS.

I then tried a parallel prefix sum - which got it down to about 11 000uS, although then I tried a sqrt(N) sum (split the summation into 2 passes, first 16 threads produce 16 partial sums, then 1 thread adds those up) and got it down to about 10 500uS. Parallel prefix sum loses again.

And then to cut a long story short I tried a whole bunch of variations, such as storing the regions in integer format - this made it faster, but only because it wasn't calculating the same results. And different work-sizes - 64 worked the best. And different packing formats for the feature descriptors. But no matter what I tried, about 10 500 uS was about the best I could manage with the test image.

I also tried a slightly modified version (no thread dependencies) running on the AMD CPU driver, on the 6 core+HT machine I have. That managed 90mS. So the GPU is only 10x faster, which although nothing to sneeze at is still a bit disappointing.

To me this is still a little on the slow side, but I'm pretty much out of ideas for now. It might just be a problem that defies any particularly efficient mapping to GPU hardware.

Actually one thing I haven't tried is scaling the images instead of the features ... but I think that's something that can wait for another day.