About Me

Michael Zucchi

B.E. (Comp. Sys. Eng.)

also known as Zed

to his mates & enemies!

< notzed at gmail >

< fosstodon.org/@notzed >

jjmpeg updates

I finally checked in some jjmpeg changes I had lying around, added seeking to JJMediaReader and an icon creation helper (hmmm, maybe that was api bloat ...) and a few other odds and sods.

I've been playing with some interactive video stuff for work and when the video file is in good condition (i.e. seeking works properly) it's quite amazing to me just how zippy it all is - coming from the days of the C=64 I still can't get over just how fast modern machines are. And Java of course makes the multi-threading required to make the GUI very responsive an absolute doddle.

Absorbing rapid events

One idea I borrowed from my experiments on ReaderZ was a fairly simple mechanism to collapse rapidly incoming events. e.g. for a slider bar calibrated in ms, one can get many many updates as the slider moves; more than can be accommodated whilst panning an e-ink display or seeking around HD video. In the past i've either used a timeout, or some other throttling mechanism on the caller end such as a 'i'm busy' flag. This usually needs some other logic to handle completion cases, perhaps cancelling of jobs and other quite complex synchronisation tasks to ensure valid programme state when it's all done and dusted.

In ReaderZ I tried a different approach in order to simplify serial processing:

- Incoming tasks are queued as they arrive into a blocking queue.

- A consumer thread waits until something arrives on the queue.

- The consumer thread then polls the queue for any other tasks waiting to be processed.

- Based on the class of the request, jobs are discarded explicitly. For example, if you have a seek followed by an open or another seek, the first seek can be thrown away. i.e. the command is either kept, changed, or nullified.

- Then at most, 1 job each of each class are executed in the correct order.

- Repeat, go back to 1.

So basically a simplified broken-apart state-machine with explicit state reduction. If a given job is indivisible/can't be ignored (say, 'save current image'), then the collapse processing is cut short, and it jumps to step 5.

This way there's no need for any locking (apart from the task queue): the code is always called from a single thread, with guaranteed execution order and with simple state management. And even when something does take a while to run; it eventually catches up and never does more than less than one lot of extra work. It does need to ferry ALL sequentially oriented tasks through the command queue, but usually one has a fairly limited number of operations required.

Because the same thread is used to decode and play the video for my video player, if it is in 'play' mode, this just polls the command queue after each frame is displayed rather than waiting for it to contain something; but the overall logic is the same.

Also because it's done in one place I can more easily add a timeout if I really want to make sure the system is idle: rather than a separate timeout callback which needs resetting and gets called once things are done, I can just add a timeout to the Queue.poll() invocation. Or just as easily not, for example if it's been faffing around collapsing too many commands and hasn't updated the output for a while.

Speaking of ReaderZ, although I don't have any other plans for it at the moment, I am waiting for the next version of mupdf to be released at which point I will update PDFZ to match that. It should be quite soon.

Although I wasn't going to look into JavaFX too closely, every time I hit a problem in Swing I keep thinking the solution is a dead-end and the time spent on it is wasted. Unfortunately the one thing I need the GUI toolkit to to: i.e. display an array of pixels generated elsewhere: is one of the major things it cannot do yet! It can only read images from a url/disk, and that feature is targeted for 3.0 - about 18 months away. I suppose i'll just have to wait ...

Damn I wish I wasn't so tired. Must be the weather ... Autumn started very suddenly on the 29th.

It's scary joining a free software project?

I started writing a comment on this this post about contributing to free software: and it got so long I thought I'd move it here.

Overall I agree: it is quite scary, but the comment I was writing follows, somewhat expanded.

For the types of people for whom meeting people is difficult, software projects cannot be any different because the same notions apply: you don't really know how someone will react to you and whether you will be accepted or respected. I've been writing free software for about 15 years, and before that I gave away 'freeware' as well, and i'm probably more anxious about contributing to a new project than i've ever been ...

It is also unfortunate you use the term 'open source', because clearly merely having access to the source code makes no representation on whether a project is even interested in contributions. There are many reasons people write software and publish it freely, and for many projects, success or popularity is simply not a concern: the developers don't really care what anyone thinks because they have something they use themselves and the sharing is already an end in itself.

However ignoring the specific terminology used, trying to brush a wide audience with their sole unique characteristic is generally a pointless exercise. e.g. that all Greens voters are smelly hippy vegetarians, conservatives are all gun tot'n 4WD drivers, etc. People with only a few things in common are still very different from each other.

And just because the source is available and has a project page and a mailing list, it doesn't mean the project is interested in contributions from the general public. But clearly Layfield's experience is pretty poor - if a project purports to desire contributors and has a work wanted list, then at the bare minimum civility and politeness should be present. If such a project intends to survive by using external contributions, then it wont live too long.

Some of my experiences:

a) 'my first elisp' code, which turned into a handy script to add java-doc like comments to C functions. I submitted this to emacs, but RMS wanted it integrated into CC mode and a bunch of other stuff which was well beyond the time I wanted to spend on it or the features I needed (and I wasn't particularly interested in the kudos of contributing to a high-profile project). So I just added it to the project repository and my .emacs and left it at that.

(needless to say, I never wrote any elisp subsequently, but that's because I just wasn't interested in lisp as a language and that was the sum-total of the lisp I ever wrote).

b) AROS - these guys were very easy, commit access was easy to get, and then it was pretty much commit what you liked - obviously avoiding stepping on any toes. Even for a project with a lot of politics, there were plenty of small holes to fill.

c) I submitted a patch to mplayer, which was accepted without too much fuss. Just a bit of formatting changes iirc. In hindsight this was smoother than i'd have thought: certainly at the time they gave an abrasive impression of themselves on their web-site.

d) I think the first free software i contributed to was a patch to amanda circa 1995 - amanda is a distributed backup system. It was a horrible patch in hindsight but they accepted it easily. Of course this was back in the day when the internet was only accessible to academics, students, engineers, and sysops and overall was a much nicer place (yes, despite the flame-wars).

e) Working on Evolution. This was a commercial product with a (reasonably) defined direction and design. It was also complex enough and with enough of a user-base that any changes needed a lot of checking to make sure they were going to work technically and be up to scratch in terms of quality. Although the whole team spent quite a bit of effort trying to increase the community involvement: In the end I didn't really like being offered all but the most trivial of patches because it was always much faster just to write the code myself. Or I felt like a real heel telling some young lad that we couldn't use his patch because it didn't fit with the PM driven agenda. The one time we did accept a sizeable patch (and I was on holidays so was overridden), I spent weeks replacing a poor implementation which caused a lot of problems with a decent one. Nobody ever became a long-term contributor so we were left to learn and maintain any patches they gave us as well. I thought the 'bounty' system was an unmitigated disaster and would never consider such an approach again. People who are desperate for the paltry money on offer are probably not the cream to start with, and it is also very unlikely to lead to long term unpaid commitment.

Developers ...

Developer scalability was a huge issue in evolution: with thousands of reported bugs/feature requests and 2-3 coders there's just nowhere to even start making a dent. People wanted stuff we could never deliver (either too costly, unfit for the application, etc), and some people were nasty and insistent arseholes who wouldn't take no for an answer, or wouldn't take any time to try a patch or other work-around suggestions (which obviously took non-trivial effort to suggest). Crash reports were rarely followed up, and without being able to re-create them were basically useless. Not to mention distributions (esp debian) re-packaging the code in ways we only had to guess, and maintaining their own separate patch-sets. Placing bugs into 'wishlist' limbo was just as bad as saying 'wontfix', since they were never going to happen.

This latter point about scalability can't be ignored even for projects which do actively seek contributors. Every contributor comes along with a clean slate and thinks they're the first to be in their position. Yet for developers they might be one of hundreds, and even after giving out the same information only 10 times one gets pretty sick of it. This is actually one reason I find it more difficult to contribute to projects now: I don't want to piss someone off because I couldn't find their FAQ or didn't search the email archives enough, or they're still anal about 'top posting' (I really can't believe anyone still gives a rats-arse about that anymore ...).

Submitters ...

The 'problem' isn't just with the developers either: for example, what is the motivation behind the people submitting the patch? Why should a developer be particularly interested in a patch from someone who is just after the experience of submitting a patch? Or hoping for the fame of having a bit of their code included in a popular application?

I would certainly be much more interested in a patch from an active user who has found a deficiency in their day-to-day active use of the project versus someone who is just looking for something to do or something to add to their CV.

And if you're not a direct or close peer to the developer: the relationship is in quite a different space and now the developer has become a mentor. It takes far more effort and resources to be a mentor and in the vast majority of cases that effort is never returned to the project. The goal of most projects is to provide a solution to a problem, not to train people how to code or interact with a public project. It's quite arrogant and rude to assume that just because it's code and mailing list is available to the public that it gives the public a free reign on developer time ...

Me ...

Now, i'm definitely not interested in authoring applications for the general public. I get paid to work on a research project with a single individual as the sole customer I deal with. And for my free software projects the only ones of worth are only useful for other developers. And even then most of those are just stuff i'm playing with for my own entertainment; it is therefore costing me nothing to share it with the world and i'm more interested in helping people learn than solving their problems (that's not to say I don't get a buzz out of knowing my stuff is used - I check the stats all the time - but it isn't the motivation at all).

I don't think it will happen any time soon (not the least reason being that I'm miles away from building anything useful to the average user): but having a project of mine picked up by a distribution would be quite unappealing.

As for patches, I still submit the odd small patch here and there. But what turns me off is:

- Anal retentiveness about specific code style, mailing list etiquette and so on.

- I used to do this way way too much in evolution: If the patch was basically ok I should've just taken it and fixed it up to match my preferences and fixed minor errors. Once one has submitter access things are different, but for a random patch it's just not worth the to-fro and agro. Previous to ximian I'd had a pretty unpleasant experience working with an Indian sub-contractor (TATA Infotech) and we were being anal about their (really bad) code because we were paying a lot for it for no reason - so I was a bit thingy with code reviews.

Worrying about 'top posting'? How 90s, get-the-fuck over it.

- Using git (or some obscure cms)

- I just hate git to start with. And being asked to create a public fork of a project for a one-off small patch is low on my list of `things to do before i die'.

The first patch I ever created I used 'diff -ruN' to create, and that's still a reliable way to do it without having to learn obscure commands for half a dozen popular systems.

- Too many pre-requisites.

- e.g. joining a mailing list and a bug tracker in which you must create a bug and attach the patch, copyright assignments, and so on. It's ok for a couple of projects or if you become an active long-term participant, but it quickly gets far too unwieldy if you're just submitting a rare patch to some product you use occasionally. Another thing we (totally!) fucked up in evolution.

Obviously for a team project a mailing list (or forum) is pretty much essential, and legalities might require a copyright assignment or other agreement, but the bug systems tend to give me the willies.

- Build complexity.

- Some projects are just too complex to build or require too many pre-requisites: rubbish like ant, cmake, and all the other weird arsed build systems (jam, bitbake, custom python, rake ...) become insurmountable barriers that stop you even getting started.

I was quite astonished the other day that I even got a cmake based project to compile at all. If it wasn't for netbeans I wouldn't be using ant, and even then it sometimes fucks it up.

- Python.

- Which reminds me ... it's just a personal thing but I detest python in any form. tcl isn't far behind.

- Arrogance.

- Using a project leader's celebrity or the project's popularity to make one feel like it is an absolute privilege to be doing some of their work for free. I don't really encounter this because I'm just not attracted to such projects, but there needs to be some sort of recognition that work is being done for free (assuming it's at an adequate level for the complexity of the patch).

Concluding ...

To respond to the final question of what can be done to improve first impressions, I think i'd just say 'not much'.

Unless your specific goal is to maximise user contributions and popularity amongst potential contributors you're probably not too concerned about what they think. And if you are, you're probably already doing all you need to do.

And importantly beyond some fairly basic civility, there should be absolutely no obligation on you, as a free software developer, to provide any sort of expected level of support or accept patches in any form from anyone whatsoever.

If one wishes to be popular and extra-friendly then all the better for you and your users, but it is certainly no pre-requisite to calling your software free (or the related but somewhat meaningless term 'open source').

socles updated for jogamp 2

Just to show that it's not all angry rants around here ...

Continuing my mirror of the news items on my projects, I just updated socles to include jogamp 2.0 - fortunately the API was the same so it was just changing the included libraries in the build.

I still have a JOAL patch outstanding but just haven't been in the right mood to work on it for a while. Getting back to work last week was a bit of a shock to the system; but i'm slowly getting back into the groove and will eventually have time and energy left over for hacking.

I've been doing a bit of video stuff which is helping to harden and clean up jjmpeg a bit more, and I have a few minor patches pending for that. I've also been poking very tenatively at a slideshow creator/video compositor: but there's a lot of crap I don't really want to have to write (ugh, timeline anyone) so i'm not exactly making any headway yet.

WTF Google?

I noticed google search becoming less and less useful of late: it can be quite hard to track down meaningful results, and I started to suspect I was getting my own private view of the internets ... (which is

precisely

the thing I do not want from a global internet search!!).

So with in that mind check these two results out, one from my signed in google account - with verbatim enabled, and one from another machine in which I'm not signed explicitly into google's world-encompassing spy network.

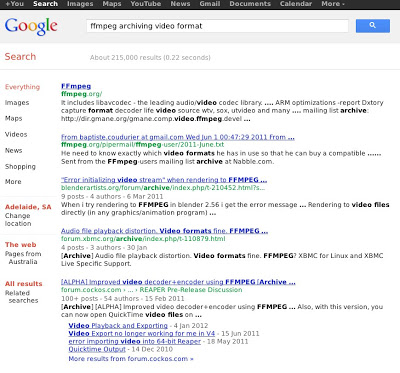

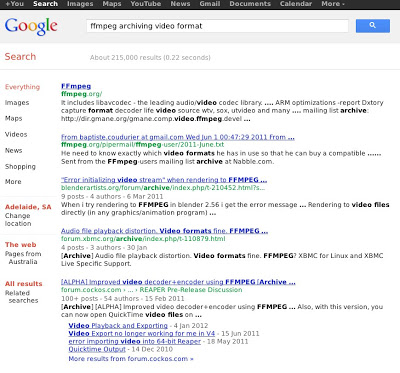

Standard results:

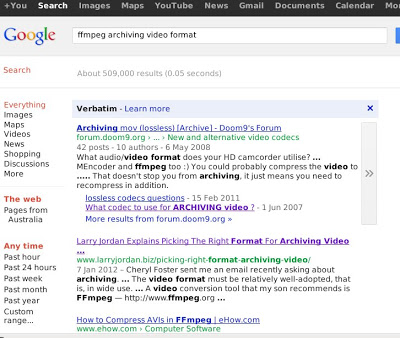

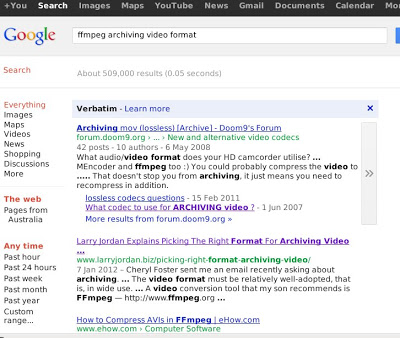

Verbatim results:

(I also checked the non-verbatim results on my logged in version, which are at least in this case thankfully the same as the standard result; although i'm sure i've seen differences in the past).

One might note that the verbatim result is the

only one that has anything related directly to the search terms

, particularly for the most important keyword of high specificity - there is in fact no relevant result AT ALL on the first page of the non-verbatim search (as suggested by the title and/or preview content). I really don't think a generic '[Archive]' header applied to old mailing list views should rank highly.

The new search algorithm is quite good at finding home pages for projects and products; but for specific information it's starting to suck major arse.

At least verbatim taught me this isn't quite the search term i'm after anyway.

Cantarell Sucks

Why any designer with any mote of sense would choose Cantarell as a font is beyond me, let alone a system-wide default one. I know it was trendy - nearly 30 fucking years ago - to create a distinctively unique system font; but that was mostly about cost anyway and now there are plenty of decent free fonts available using a number of common formats.

When I re-installed my workstation with a minimal fedora 15, Cantarell was about the only font that came along for the ride by the time I had XFCE up. Which made for a particularly unpleasant experience in Terminal and emacs - until I installed xterm and 'fixed'. Apart from being a disastrously ugly and unreadable font; it decided to use the proportional one as well; so it didn't even work.

Apart from many of the letter forms being simply ugly and out of balance, the kerning and hinting is abysmal (although TBH I think hinting has more than had its day, and we're better off with blurry aa text, even on screens 1024 pixels wide). It is just one fugly font and the only reason I can see that anyone would like it is that their favourite hero endorsed it and the group-think around the hero's heroish aura is suppressing their mind's own ability to reason. I suppose having someone working on free software as a hero is better than worshipping some money grubbing greedy pig-fucker like the late Jobs, or Gates (however, being a brainless sheep is nothing to be proud of); although those money grubbing greedy pig-fucker's are often the inspiration in the first place. Not a fan of the ubuntu font either; which again seems to gain its popularity solely from celebrity endorsement.

(As an aside: people seem to be trained more and more these days not to think. Not to make a stir. To go along with the crowd. Even the wild frontier of the internets has been tamed, flame-wars seem to be banned from most forums and minority views are blatantly suppressed as a matter of open forum policing. As if suppressing and censoring dissenting views somehow makes them go away or is a valid long-term solution ...).

So ... although i've resolved not to go whine on other people's personal blogs (it's like going into someone's house and abusing them), i let my guard slip a bit this morning and posted a long dissenting (but mostly polite) view on a post about GNOME. I really wanted to sleep in but was awake before 6, so I wasn't in the best mood.

Anyway, it ties in with my last rant about 'tabbed desktop'; some of the suggestions are just stupid. Most of the suggestions are just a straight-up rip-off of an iphone, and then the group-think fanbois have the audacity to turn around and accuse people of stifling experimentation if they don't like it or don't think they'll work on a desktop computer? Nothing innovative or experimental about copying an apple interface (which seems to have been the sole `raison d'être' for GNOME ever since Apple's Mac OSX came out). The thought that one application can cater to every possible device is as inane as it is nonsensical. Even less so is the suggestion that one stern style guide can cope with every possible application and user class ...

I'm not sure why I even care; I haven't even used GNOME for years, I didn't even start using GNOME for years even when I was working on GNOME software (iirc until Novell bought ximian and I dropped RedHat 9 and amiwm for development ...); so I never really liked it. I thought gnome2 was bloated, slow, ugly and too limited so my less-than-gold standard of GNOME goodness pre-dates even that.

However, now I think about it, I know why I care: I know how this shit works. It becomes trendy and then everyone starts doing it and suddenly there's no-where else to go and you've got some fugly font installed by default. Or systemd, or networkmanager, or pulseaudio. Thankfully the font is easy to fix and pulse audio is easy to blacklist (although yum seems to ignore that sometimes), and networkmanager easy enough to bin as well. But it still makes it more difficult to get a working system set up every time and there's always a chance some snot like systemd (whose whole purpose seems to be to enable gui tools to poke into areas they shouldn't be involved with in the first place) which weaves itself so tightly into the system it simply cannot be removed.

(Aside again: It would help if systemd wasn't written by an author who clearly doesn't have a clue with system software, and adding such a complex and horribly nasty implementation behind it. And it would help if fedora didn't let pricks like this bully their way into such a core system service as init.)

The counter-argument is that it must be a good idea if it's popular and people are using it ... which if course is crap. We all saw how microsoft illegally forced its crapware onto everyone; popularity is not a technical metric, it's a political one. And people are easily manipulated, particularly if they're proud of their inability to think independently.

Although there's one thing that the GNOME developers and I agree on, even if they might not admit it: hacking is fun, and users suck. I wouldn't want to listen to whiney know-it-all's either. Then again, i'm not working on anything that the public relies on for their day to day computing experience ...

I just wish i'd had a good night's sleep now. Headed for an unpleasantly hot (39) and unpleasantly windy (30km/hr+) day today, i'm too tired to think about hacking, bored with tv, movies, and games, and my eyes are really tired from reading the screen too much this week; best hope is to water the garden a bit and maybe have a nap later followed by a couple of cold beers.

Scene Graphs

So, today is my weekly RDO and rather than spending it down the beach like I should have, I poked around ...

I was thinking of experimenting with a scene-graph model for GadgetZ (used in ReaderZ); not for any particularly practical reason, just to see how it would work. But I kind of got stuck on how to manage the layout mechanism and then lost interest ... still a bit burnt out from my hacking spree a few weeks ago, and I need a good sleep-in one day to catch up on sleep as well (they're still building next door).

I did however end up spending quite a bit of time playing with the JavaFX stuff. The 32-bit-only build wasn't too much hassle to get up and running on Fedora. It has some (pretty big) issues with focus, and the performance ranges from awesome to barely ok, but it is only a developer preview after-all.

Some observations:

- The rendering model looks very interesting. Obviously the aim is to provide a fully accelerated zoomable interface via the scene-graph. This is obviously absolutely the right thing to do.

- And for the most part this works well: it's very snappy when it's snappy.

- It tries to sync the rendering to the display and double-buffer rendering.

- The WebView thing looks pretty decent - it's a whole webkit binding, with dom access and the plan is to move to a JVM based javascript eventually.

Some issues though:

- No printing yet.

- Performance degrades fairly significantly when a lot of data is present. e.g. in the JavaFX Ensemble demo opening view - trying to scroll list of sample icons.

- The vsync doesn't work very well, lots of glitches (but i blame this mostly on both pc hardware and linux: even a commodore 64 had hardware well beyond a pc video card for smooth animation).

- Things like scrolling the WebView is flicker-free, but not as high a frame-rate as i'd expect for hardware rendering. But I don't know if it's delegating rendering to webkit as I do with PDFZ.

- The documentation needs work.

I'm not about to use it for anything; i'm not even going to bother to try for that matter, but I'll be keeping an eye on it. It seems to finally heading in the direction it initially promised after JavaFX 2 was announced: a high performance modern toolkit with a clean simple(ish) design, media support, and so on. Simple enough to use as a RAD tool, but complete enough to write real applications as well.

It seems as though Java8 will have something to look forward to for once ...

I'm also curious to see how the JVM based JavaScript engine will work; the JVM is fast, but I suspect the language will some bearing on achievable performance as well.

(i've nothing to comment on regards the hope to use it as a flash replacement for internet deployment, i'm just considering it as a desktop application development platform).

Flash: good bye and good riddance

Hmmm, so the news of the day is that Adobe are dropping flash support for GNU/linux. Oh praise the day it finally goes away for everyone ...

One can speculate on why, and why they're only `supporting Google':

- Adobe are in the creation-tool business, why are they wasting so much resources on maintaining a crapply plugin they give away for free? i.e. there are compelling business reasons to kill it entirely.

- More and more internet-enabled devices are unable or unwilling to support it; without the ubiquity of client access there is little reason to use it. This is also why silvelight will never be more than a niche. I don't see the point of JavaFX either unless it is available for free on all devices fast enough to run it (it going GPL shows clearly that Oracle know this too; they can't afford to maintain it for all platforms, and nobody would ever pay to license it - and even then in the end it'll probably just be a swing replacement for desktop development).

- Paying clients are willing to prop up the legacy technology for the time being, so Adobe don't want to kill the whole project off. Pissing off paying customers isn't a long-term bread-winner.

- Most of these paying clients do not care about GNU/Linux enough to keep it around there.

- Google are willing to fund a partial solution: as a marketing exercise in order to move people to their application platform (otherwise known as a 'browser'). It's just a cynical exercise to gain market share.

- It's basically 'the world' vs firefox. Proprietary companies want more control of the web pie, and will work together against firefox any way they can. e.g. see the HTML5 video drm proposal, the fuck-up with H.264 HTML5 vs OGG video, and so on.

Overall this isn't such a bad thing: even if it is just at the periphery, fewer projects will consider using this legacy technology. Already with Apple not allowing flash on their web-enabled mobile devices they have destined it to become history. Without its cross-platform ability it loses its main feature. It's just shit tech anyway; flash struggles doing much on my dual-core low-res thinkpad; it will be a while before ARM chips match that (ok, maybe 12 months in consumer devices), but the machine is fast enough to run other software quite well.

However, everyone seems to think HTML5 will be the answer. I think it will be a horrible mess harking back to the bad old days of netscape and microsoft extensions. Already most of the HTML5 demo's only work on one browser, or even a specific browser version. Most HTML5 video is in H.264 format: i.e. inaccessible from firefox by default. And you can't simply 'flash-block' a javascript animation: leading to an annoying browsing experience and a lot of wasted power animating web pages you're not even looking at.

I find it odd that HTML5 is being pushed so heavily: on the apple iphone you can't really do much more with it than simple games or animations. You can't even upload a file from a web-page (as far as i can tell), and device access is right out. To me the impression is that all the marketing hype is just FUD to get people from spending time on competing technology.

Some of the tech demo's are all very nice and all: but web software is still much shittier than a local application for interactivity and control, not to mention security. It's like using AMOS: it might be a lot easier to write a game, but you still end up with a shitty game. Down the track it's obviously aiming to be a 'be-all-end-all' RAD application development environment that makes writing simple applications easy; thing is, simple applications are already quite simple to write, and complex applications are always going to be complex to write. Adding the web tier adds a lot of complexity in itself.

Complexity is not your friend

The complexity of standard like HTML5 isn't there to benefit users or developers. It is added to benefit proprietary vendors and stifle competition by raising the barrier of entry to new competitors. And of course lawyers and other parasites end up getting a cut as well: the complexity is so great vendors must cross-license software in order to be able to get something working. That's even before you add issues like patents into the mix, which is just insanity.

HTML is already so complex that creating a fully compliant implementation is only possible by large multi-national corporations; and opera and mozilla. HTML5 only raises the bar higher and that's before you add all the proprietary extensions to the standard which are already proliferating.

Goodbye Freedoms

HTML5 applications will also be much harder to modify and control; even if the source is available, it may be impossible to create a local copy that works without the infrastructure on the server-side used to support it.

Thus forget about distributing and sharing your changes with other users, or hiring a third party to make a customisation tailored to your business.

Welcome to the Tabbed Desktop

So we're all moving toward a tabbed desktop. i.e. who needs multitasking when you can just swap applications at the press of a key ... Well, microsoft windows and the apple macintosh pretty much forced this from the start because their systems were so poor at multitasking; but real systems have been able to utilise multiple overlapping windows in a way which improves user productivity for decades.

But it seems we're going back to the full-screen application model. Except now the applications are running in a crappy single-threaded virtual machine and being loaded remotely.

Hang on, I think thats 80's calling ... they're asking how that WIMP thing worked out ...

Early morning drowsy mistakes

Oops, so yesterday morning I went to replace a dead HDD and re-install the OS on it. I thought I was being careful and unplugged the other, working HDD with a different OS on it so when I came to partition the disks I just deleted everything (the replacement disk had previously had CentOS on it).

Only i'd unplugged the wrong one, and ended up deleting the working OS and not the spare drive i'd just installed! Unfortunately that OS's installation partitioning tool writes every change immediately, so it was all gone even though I realised before i'd moved to the next screen. The installation disk for that OS is pretty crap too - it kept refusing to install on the disk I just formatted using the installer: it took a couple of resets before it was happy with it's own formatting ...

I tried recovering the partitions using testdisk, but either because of the filesystem types or the partition layout I had no luck with that. Just an hour down the drain waiting for it to scan the 1TB drive.

Fortunately I had made a full backup, so I lost nothing apart from half a day re-installing everything.

This time I installed Fedora 15 using the 'minimal install' option; and apart from some strange package selections (e.g. ssh client isn't installed, but server is), it actually took me a lot less time getting a comfortable and working system than it did when I just let it install GNOME and then had to remove all the crap (pussaudio, notworkmanager, and similar crud). What is also strange? It boots, logs in, and runs much faster than it did the day before. And now Thunar opens immediately the first time rather than pausing for a few seconds.

I had some weirdness with systemd and it not starting X after I installed it - which seems to be much more fucked and complex than I could possibly have imagined - but that just magically fixed itself after enough reboots. Also with thunar's auto-mounting stuff, which I think I fixed by installing gvfs, but it might've just been enough reboots too ...

Copyright (C) 2019 Michael Zucchi, All Rights Reserved.

Powered by gcc & me!