About Me

Michael Zucchi

B.E. (Comp. Sys. Eng.)

also known as Zed

to his mates & enemies!

< notzed at gmail >

< fosstodon.org/@notzed >

resampling, again

Been having a little re-visit of resampling ... again. By tweaking the parameters of the data extraction code for the eye and face detectors i've come up with a ratio which lets me utilise multiple classifiers at different scales to increase performance and accuracy. I can run a face detection at one scale then check for eyes at 2x the scale, or simply check / improve accuracy with a 2x face classifier. This is a little trickier than it sounds because you can't just take 1/4 of the face and treat it as an eye - the choice of image normalisation for training has a big impact on performance (i.e. how big it is and where it sits relative to the bounding box); I have some numbers which look like they should work but I haven't tried them yet.

With all these powers of two I think i've come up with a simple way to create all the scales necessary for multi-scale detection; scale the input image a small number of times between [0.5, 1.0] so that the scale adjustment is linear. Then create all other scales using simple 2x2 averaging.

This produces good results quickly, and gives me all the octave-pairs I want to any scale; so I don't need to create any special scales for different classifiers.

The tricky bit is coming up with the initial scalers. Cubic resampling would probably work ok because of the limited range of the scale but I wanted to try to do a bit better. I came up with 3 intermediate scales above 0.5 and below 1.0 and spaced evenly on a logarithmic scale and then approximated them with single-digit ratios which can be implemented directly using upsample/filter/downsample filters. Even with very simple ratios they are quite close to the targets - within 0.7%. I then used octave to create a 5-tap filter for each phase of the upscaling and worked out (again) how to write a polyphase filter using it all.

(too lazy for images today)

This gives 4 scales including the original, and from there all the smaller scales are created by scaling each corresponding image by 1/2 in each dimension.

scale approx ratio approx value

1.0 - - _

0.840896 5/6 0.83333

0.707107 5/7 0.71429

0.594604 3/5 0.60000

Actually because of the way the algorithm works having single digit ratios isn't crticial - it just reduces the size of the filter banks needed. But even as a lower limit to size and upper limit to error, these ratios should be good enough for a practical implementation.

A full upfirdn implementation uses division but that can be changed to a single branch/conditional code because of the limited range of scales i.e. simple to put on epiphany. In a more general case it could just use fixed-point arithmetic and one multiplication (for the modulo), which would have enough accuracy for video image scaling.

This is a simple upfirdn filter implementation for this problem. Basically just for my own reference again.

// scale ratio is u / d (up / down)

u = 5;

d = 6;

// filter paramters: taps per phase

kn = 5;

// filter coefficients: arranged per-phase, pre-reversed, pre-normalised

float kern[u * kn];

// source x location

sx = 0;

// filter phase

p = 0;

// resample one row

for (dx=0; dx<dwidth; dx++) {

// convolve with filter for this phase

v = 0;

for (i=0; i<kn; i++)

v += src[clamp(i + sx - kn/2, 0, dwidth-1)] * kern[i + p * kn];

dst[dx] = v;

// increment src location by scale ratio using incremental star-slash/mod

p += d;

sx += (p >= u * 2) ? 2 : 1;

p -= (p >= u * 2) ? u * 2 : u;

// or general case using integer division:

// sx += p / u;

// p %= u;

}

See also: https://code.google.com/p/upfirdn/.

Filters can be created using octave via fir1(), e.g. I used fir1(u * kn - 1, 1/d). This creates a FIR filter which can be broken into 'u' phases, each 'kn' taps long.

This stuff would fit with the scaling thing I was working on for epiphany and allow for high quality one-pass scaling, although i haven't tried putting it in yet. I've been a bit distracted with other stuff lately. It would also work with the NEON code I described in an earlier post for horizontal SIMD resampling and of course for vertical it just happens.

AFAIK this is pretty much the type of algorithm that is in all hardware video scalers e.g. xbone/ps4/mobile phones/tablets etc. They might have some limitations on the ratios or the number of taps but the filter coefficients will be fully programmable. So basically all that talk of the xbone having some magic 'advanced' scaler was simply utter bullsnot. It also makes m$'s choice of some scaling parameters that cause severe over-sharpening all the more baffling. The above filter can also be broken in the same way: but it's something you always try to minimise, not enhance.

The algorithm above can create scalers of arbitrarly good quality - the scaler can never add any more signal than is originally present so a large zoom will become blurry. But a good quality scaler shouldn't add signal that wasn't there to start with or lose any signal that was. The xbone seems to be doing both but that's simply a poor choice of numbers and not due to the hardware.

Having said that, there are other more advanced techniques for resampling to higher resolutions that can achieve super-resolution such as those based on statistical inference, but they are not practical to fit on the small bit of silicon available on a current gpu even if they ran fast enough.

Looks like it wont reach 45 today after some clouds rolled in, but it's still a bit hot to be doing much of anything ... like hacking code or writing blogs.

A great idea or capitalism gone awry?

I've been pondering crowd-funding lately and i'm not really sure it's a good idea.

It seems good on paper - democratic / meritic small-scale funding by interested public. Thing is we already have something like that: the stock market.

But unlike the stock market it is a complete free-for-all unregulated mess full of fraud and failures (ok so is the stock market, but even if it is also no better than a slot-machine, they do pay out sometimes).

In some ways crowd funding could be seen as a clever ploy by capital to finally remove all the risk from their side of the equation - most of it is already gone anyway. Rather than having lawyer backed due dilligence being used to take a calculated risk on an investment with some expected return, the public are taking uneducated risks based on emotion and group-think for no real return at all.

I don't regret helping to find the parallella, but i'm not sure I would do it again.

It is an industry sorely in need of regulation which will surely come before long. It should have a long-term place for small projects but once you get into the millions it seems far too skewed in favour of the fundees.

dead miele washing machine

Blah, washing machine blew up this morning. Novotronic W310, cost $1 900, bought Feb 2004.

During a spin cycle it started making very loud grinding noise and after turning it off and opening it up the drum had a really hot spot near the rim and a bit of a burnt rubber smell. Lucky I was home and it didn't catch fire. I was only washing a few t-shirts, shorts, undies, and my cycling shit.

Despite stating that the drum wont turn freely and that it rotates off-centre the service centre claims they can't tell if it will require major repair (it could only be a bearing, and that is a major repair) and still wants $200 for someone to come and have a look at it. Redeemable if i buy another one. I guess I got 5 weeks shy of 10 years out of it so I can't complain too much - then again being a male living alone for most of that time it hardly got much of a work-out either, so i'm not terribly inclined to buy another miele at the premium they charge here.

I guess i'll think about it over the weekend.

Washing machines aren't exactly a high priority item for a single male, but I don't want to have to deal with replacing broken shit either.

Road Kill

Finally got out on the roadie today and went for a 30km blat down to the beach and back. I haven't gone for a recreational cycle in nearly a year - had to give it a good wipe down to remove the dust and reinflate the tyres after taking it off the wall. I wasn't going to lycra it up but the shy-shorts I have these days come down to my fucking knees (longs?) and it was way too hot to wear those. Ran like new though, it's an awesome bike to ride.

Overall I can't say the experience was particularly enjoyable however - several cars cut me off and a pair of fuckwits (having a race?) nearly took me out through a roundabout on seaview road next to the grange hotel. I always hate that stretch and the fuckwit grange council obviously just hates bikes - they haven't changed it in years so I think i'll just avoid going down that way ever again - grange and henly are nice beaches but all the facilities and vendors are completely anti-cyclist so they can all just go and get fucked. I had to cut it short anyway due to a "natural break" required from a bit too much home-made hot-sauce on my dinner last night which was getting a bit painful. And somehow the racing saddle manages to find the only boney bits of my arse as well so 1.5 hours in 34 degree heat was enough of a re-intro trip after such a long break from it.

At least as a bonus I chanced on a homebrew shop that had a capping bell for champagne bottles - something i've been looking for for a while (not that i really need it, i have a ton of glass longnecks, but champagne bottles are much stronger). Yesterday I finally bottled off the last brew (nearly 2 weeks late - but it was still good, i ended up drinking over 4 litres straight out of the wart - it's quite decent but too warm) and started another one. Unfortunately all I have left from last year is 1 super-hot chilli beer (actually i had a half-bottle of one of those last night, maybe that was the cause of the natural break requirement) and a few stout/porter-like things which are a bit heavy for this weather - so after i finish this lime cordial and soda I'm going to have to find something else to drink today.

I really need to get back into regular cycling but it's not going to happen unless I can find some route that is safe and enjoyable and not too boring to do it on. That's a big part of why I've been so slack at it since coming back from Perth (and all my cycling mates moved interstate or os). Chances are this is the just another last ride for another 6 months, but time will tell ...

Update: Sunday I went to see a friend at Taperoo and he took his young family down to the beach. Apart from a couple of spots that isn't such a bad ride so maybe I can do the Outer Harbour loop - it's about 90 minutes on a good day. Even though one road is a bit truck laden there's plenty of room. Given the weather this week I had thought of hitting the beach a couple of times but today it was already 41 by 10:30 - and a burning northerly wind - so I might not be going anywhere after the washing machine is delivered. Monday I went for a loop through the city and around about to buy a washing machine and do a bit of shopping and that was pretty much the limit - I seemed to catch every red light and waiting in the full sun on newly laid ashphalt on a still day really takes it out of you. The LCD panel on my speedo even started to turn black so hell only knows how hot it was out on the road. And it's warming up tomorrow ;-)

Update: Well this has now become very strange weather. 40+ in summer is as common as sheep shit around here but it ended up hitting 45.1 at 2pm which is a bit on the extreme side even for here (i believe it may be a record for Kent Town). And now thunderstorms are coming? They are looking to miss me, but if they hit it'll turn the place into a sauna. Just saw a nice fork of lightning about 18 seconds away (~6km). Time for beer and a light-show?

Update:

Only 4th hottest day on record after all.

Fast Face Detection in One Line of Code

**** **** ****

* * * * * *

**** **** ****

**** **** ****

* * * * * *

**** **** ****

**** **** ****

* * * * * *

**** **** ****

(blogger is broken: this is supposed to be a pic)

Based on the work of the last couple of weeks I've written up a short article / paper about the algorithm and created a really basic android demo. I think it's something novel and potentially useful but i'm not sure if i'm just suffering from an island effect and it's already been tried and failed. I tried to find similar research but once outside of the software engineering realm the language changes too much to even know if you're looking at the same thing when you are. Statistics gives me the willies.

Since I did this at home and am not aquianted with the academic process I didn't know what else to do. None of my peers, aquaintances or contacts do similar work for a hobby.

I have created a basic 1990s style `home' page on my ISPs web server to store the paper and application and anything else I may want to put there. This may move in the future (and perhaps this blog will too).

And yes, it really does detect faces in a single line of code (well, significant code) - and we're talking C here, not apl - and on SIMD or GPU hardware it is super duper fast. I haven't even looked at optimising the OpenCL code properly yet.

I wasn't going to but I ended up creating an optimised NEON implementation; I kinda needed something to fill the timing table out a bit in the article (at the time; it filled out afterwards) but I guess it was worth it. I also wrote up a NEON implementation of the LBP code i'm using this afternoon and because it is only 4 bits it is quite a bit faster than the LBP8,1u2 code I used last time, although in total it's a pretty insignificant part of the processing pie.

Now perhaps it is time for summer holidays.

And just to help searchers: This is an impossibly simple algorithm for a very fast image classifier and face detector which uses local binary patterns (LBP) and can be implemented directly using single instruction multiple data (SIMD) processors such as ARM/NEON and scales very well to massively multi-core parallel processors including graphics processing units (GPU) and application processing units (APU). OpenCL, CUDA, AMD, NVidia.

Further beyond the ROC - training a better than perfect classifier.

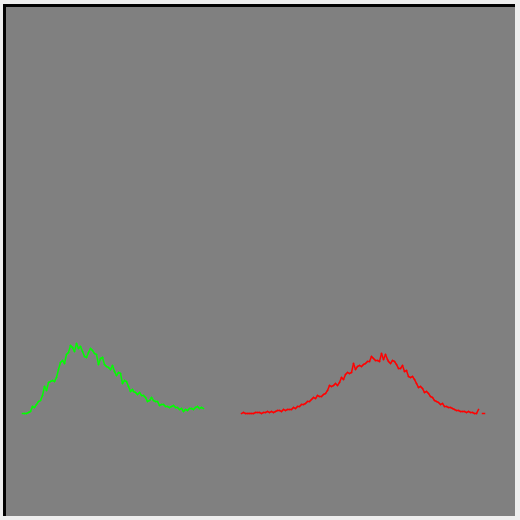

I added a realtime plot of the population distribution to my training code, and noticed something a little odd. Although the two peaks worked themselves apart they always stayed joined at the midpoint. This is not really strange I guess - the optimiser isn't going to waste any effort trying to do something I didn't tell it to.

So with a bit of experimentation I tweaked the fitness sorting to produce a more desriable result. This allows training to keep improving the classifier even though the training data tells it it is 'perfect'. It was a little tricky to get right because incorrect sorting could lead to the evolution getting stuck in a local minimum but I have something now that seems to work quite well. I did a little tuning of the GA parameters to speed things up a bit and added a bit more randomisation to the mutation step.

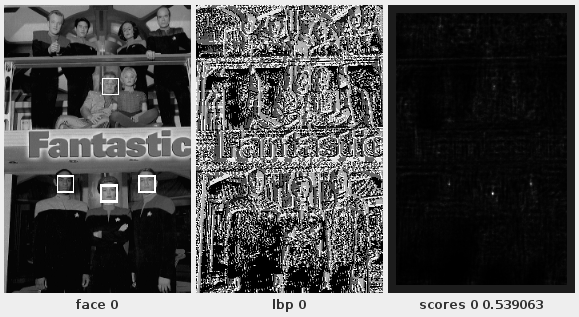

The black line is the ROC curve (i.e. it's perfect), green is the positive training set, red is the negative. For the population distrtribution the horizontal range is the full possible range of the classifier score, and vertically it's just scaled to be useful. The score is inverted as part of the ROC curve generation so a high score is on the left.

The new fitness collator helps push the peaks of the bell curves outwards too moving the score distribution that bit closer to the ideal for a classifier.

The above is for a face detector - although I had great success with the eyes I wanted to confirm with another type of data. Eyes are actually a harder problem because of scale and distinguishing signal. Late yesterday I experimented with creating a face classifier using the CBCL data-set but I think either the quality of the images is too low or I broke something as it was abysmal and had me thinking I had hit a dead-end.

One reason I didn't try using the Color FERET data directly is I didn't want to try to create a negative training set to match it. But I figured that since the eye detector seemed to work ok with limited negative samples, so should a face detector so I had a go today. It works amazingly well considering the negative training set contains nothing outside of the Color FERET portraits.

Yes, it is Fantastic.

I suspect the reason the Color FERET data worked better is that due to the image sizes they are being downsampled - with the same algorithm as the probe calculation. So both the training data and test data is being run through the same image scaling algorithms. In effect the scaling is part of the LBP transform on which the processing runs.

This is using a 16x16 classifier with a custom 5-bit LBP code (mostly just LBP 8,1).

The classifier response is strong and location specific as can be seen here for a single scale. This detector here is very size specific but i've had almost as good results from one that synthesized some scaling variation.

I couldn't get the young klingon chick to detect no matter what I tried - it may just be her pose but her prosthetics do make her fall outside of the positive training set so perhaps it's just doing it's job.

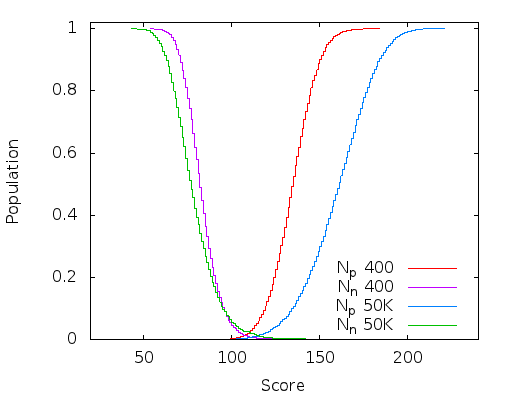

Beyond the ROC

I mentioned a couple of posts ago that i was hitting a wall trying to improve the classifier using a genetic algorithm because the fitness measure i'm using reached 'perfect' ... well I just worked out how to go further.

Here is a plot of the integral of the population density curve (it's just the way it comes out of the code, the reader will have to differentiate this in their head) after 400 and 50K generations of a 16x16 classifier. I now have the full-window classifier working mostly in OpenCL so this only took about 20 minutes.

Although a perfect classifier just has a dividing line separating the two populations, it is clear that these two (near) perfect classifiers are not equal (the above plot was generated from a super-set of the training data, so are not perfect - there should be no overlap at the base of the curves). The wider and deeper the chasm between the positive and negative population, the more robust the classifier is to noise and harder to classify images.

400 generations is the first time it reached a perfect ROC curve on the training data. I just let it run to 50K generations to see how far it would get and although most of the improvement had been reached by about 10K generations it didn't appear to encounter an upper bound by the time I stopped it. Progress is quite slow though and there is probably cause to revisit the genetic algorithm i'm using (might be time to read some books).

This is a very significant improvement and creates much more robust detectors.

Because the genetic algorithm is doing the heavy lifting all I had to do was change the sorting criteria for ranking the population. If the area under the ROC curve is the same for each individual then the distance between the mean positive and mean negative score is used as the sort key instead.

The Work

So i'm kind of not sure where to go with this work. A short search didn't turn up anything on the internets and recent papers are still mucking about with MP-LBP and integral images on GPUs which I found 2 years ago are definitely not a marriage made in heaven. The eye detector result seems remarkable but quite a bit of work is required to create another detector to cross-check the results.

The code is so simple that it's effectiveness defies explanation - until the hidden maths is exposed.

I started writing it up and I've worked out where most of the mathematics behind it come from and it does have a sound basis. Actually I realised the algorithm is just an exisiting common algorithm but with a single specific decision causing almost all of the mathematics to vanish through simplification. I first looked at this about 18 months ago but despite showing some promise it's just been pretty much sitting idle since.

tablet firmware

I had my occasional look for updated firmware for my tablet yesterday - an Onda V712 Quad - and was pleased to find one came out late November. All firmwares, I guess.

For whatever reason this particular tablet seems to be remarkably uncommon on the internet. Apart from the piss-poor battery life it's pretty nice for it's price - although that is a fairly big issue I guess.

I apparently bricked it running the firmware updater via microsoft. Nothing seemed to be happening/it said the device was unplugged, so after a few minutes I unplugged it. Don't really know what happened but the list of instructions that then popped up in a requester managed to get it back on track. Not that I needed it this time, but every time I go into the recovery boot menu I forget which button is 'next' - the machine only has power and home - and always seem to press the wrong one, but luckily I didn't really need it. I know from previous readings that the allwinner SOCs are completely unbrickable anyway, so i wasn't terribly concerned about it.

After a bit of confusion with the - i presume - ipad like launcher they decided to change to, I got it back to where it was before. I didn't even need to reinstall any apps.

So although it's still android 4.2 (4.4 is out for some of their tablets but not this one, not sure if it will get it, Update 11/1/14: 4.4 is now up for my tablet but i haven't tried it yet) they fixed a few things.

The main one for me is that media player service plays streaming mp3 properly now: previously my internoderadioplayer app wouldn't play anything onitdidn't work. I might be motivated to fix a couple of things and do another release sometime soonish.

Other than that it just feels a bit snappier - although I really wouldn't mind if you could set the display to update at some low frame-rate like 12-15fps to save power. That full-screen render can't be cheap on battery and most of the animations just give me the shits to start with.

I'm still a bit annoyed how they changed the order of the software buttons along the bottom - having back in the corner followed by the rest made much more physical sense with all the varying screen sizes out there. Having them centred is a pain in the arse, and I keep accidentally activating that 'google' thing on that bizarre circular menu thing off the home button when trying to scroll scroll scroll through long web pages because there's no fucking scrollbar on anything anymore. I don't even know what that google thing is for (read: i have no interest in finding out) but I sure wish I could disable it from ever showing up.

Pretty much only use it as a web browser for the couch anyway - the screen is too shiny to use outside (despite a very high brightness). Typing on a touch screen is utterly deplorable, and playing games on one isn't much better. It's just passable as a PDF reader, although I wish mupdf handled off-set zoom with page-flipping better (it's a hard thing to get right though). I'm finding the over-bright black-on-white becoming somewhat irritating to read for very long so i might have to patch it for a grey background anyway. Actually it's quite useful for finding papers - for some reason Google search from my desktop has decided to keep returning stuff it thinks I want to read, rather than what I'm searching for. So that just means I keep finding the same fucking papers and articles i've already read - which isn't much use to me. Fortunately for whatever reason the tablet doesn't have this problem, ... yet.

I had to turn off javascript in firefox because it kept sucking battery (and generally just kept sucking full stop), any websites or features that rely on it just wont work - if that breaks a web site then I just dont bother returning. I've no interest in commenting on blogs or forums from it so it doesn't break much for me. Amazing how much smoother the internet is without all that crap. Everything has layout problems too because I force a typeface and font size that's big enough to read; but I have that problem on every browser. Dickhead web designers.

Copyright (C) 2019 Michael Zucchi, All Rights Reserved.

Powered by gcc & me!