About Me

Michael Zucchi

B.E. (Comp. Sys. Eng.)

also known as Zed

to his mates & enemies!

< notzed at gmail >

< fosstodon.org/@notzed >

java 9

Yesterday I had a quick look at java 9 - i hadn't installed it earlier as I was waiting for GA and I didn't really have a need. After a long silent spell I don't get many hits these days to this site so I don't know if anyone will read this but whatevers.

I guess the main new thing is the module system. It probably has some warts but overall it looks quite decent. maven and `aficionados' of other modularisation systems seem to be upset about some things with it but I mean, maven?

I did a bit of playing with zcl to see how it could be modularised. At least on paper it's a very good fit for this project due to the native code used. In practice it seems a little clumsy, at least at my first attempt.

I decided to separate it into two parts - the main reusable library and the tools package. This required adding another top-level directory for each module (as the module name is used by the compiler), and a couple of simple module-info.java files.

au.notzed.zcl/module-info.java

module au.notzed.zcl { // module name

exports au.notzed.zcl; // package name

requires java.logging; // module name

}

au.notzed.zcl/tools/module-info.java

module au.notzed.zcl.tools { // module name

exports au.notzed.zcl.tools; // package name

requires au.notzed.zcl; // module name

}

With the source moved from src/au to au.notzed.zcl/au or au.notzed.zcl.tools/au as appropriate (sigh, yuck). Note that the name is enforced and must match the module name although the rest of the structure and where the module name exists in the path is quite flexible; here i obviously chose the simplest/shortest possible because I much prefer it that way.

The filenames in the Makefile were updated and a tiny change added is all that is needed to create both java modules at once. Makefile

zcl_JAVAC_FLAGS=-h build/include/zcl --module-source-path .

Yes I also moved to using java -h to create the jni header files as I noticed javah is now deprecated.

Ok that was easy. Now what?

The next part is to create a jmod file. This can be platform specific and include native libraries (and other resources - although i'm not sure how flexible that is).

The manual commands are fairly simple. After i've had a bit of play with it I will incorporate it into java.make with a new _jmods target mechanism.

build/jmods/zcl.jmod: build/zcl_built

-rm $@

mkdir -p build/jmods

jmod create \

--class-path build/zcl/au.notzed.zcl \

--libs jni/bin/gnu-amd64/lib \

--target-platform linux-amd64 \

--module-version $(zcl_VERSION) \

$@

As an aside it's nice to see them finally moving to gnu-style command switches.

Now this is where thing kind of get weird and I had a little misreading of the documentation at first (as a further aside I must say the documentation for jdk 9 is not up to it's normal standards at all, it doesn't even come with man pages). While a modularised jar can be used like any other at runtime whilst adding the benefits of encapsulation and dependency checking a jmod really only has one purpose - to create a custom JRE instance. As such I initially went the jmod route for both zcl and zcl.tools and found it was a bit clumsy to use (generating a whole jre for a test app? at least it was "only" 45MB!). The whole idea seems to somewhat fight against the 'write once run anywhere' aspect of java, even though i've had to create the same functionality separately for delivering desktop applications myself. For example it would be nice if jlink could be used to create a multi-platform distribution package for your components without including the jre as well (i.e. lib/linux-amd64, lib/windows-amd64 for native libraries and package up all the jars etc), but I guess that isn't the purpose of the tool and it will be useful for me nevertheless. There is definitely some merit to precise versioning of validated software (aka configuration management) although these days with regular security updates it spreads the task of keeping up to date a bit further out.

One nice thing is that the module path is actually a path of directories and not modules. No need to add every single jar file to the classpath, you just dump them in a directory. This wasn't possible with the classpath because the classpath was what defined the dependencies (albeit in a pretty loose way).

X-platform?

As I only cross-compile my software for toy platforms I was also curious how this was supposed to work ...

I found a question/answer on stack overflow that stated unequivocally that jlink was platform specific and that was that. This is incorrect. jlink is platform agnostic and you must supply the location of the system JDK jmods. The only problem is right now the only microsoft windows jdk available is an executable installer and no tar is available, one only hopes this is a temporary situation. So the binary must first be run inside some microsoft windows instance and then I believe I can just copy the files around. Maybe it will work in wine, but either way I haven't checked this yet.

GNU GPL?

One issue I do see as a free software developer is that once you jlink your modules, they are no longer editable (or cross platform). By design the modules are stored in an undocumented and platform-specific binary format. So here's the question ... how does this affect the GNU General Public License, and particularly the GNU Lesser General Public License? The former perhaps isn't much different from any statically linked binary -because GPL means all source must be GPL compatible. But in the case of the LGPL it is possible to link with non-GPL components - only in the case that the LGPL components may be replaced by the receiver of the software. In dynamic linking this can be achieved by ensuring all LGPL components are isolated in their own library and simply changing the load path or library file, but for static linking this requires that all object files are available for re-linking (hah lol, like anyone gives a shit, but that's the contract). So anyone distributing a binary will have to distribute all the modules that were used to build it together with the source of the LGPL modules. Yeah I can see that happening. Or perhaps the module path might be enough, and there is a mechanism for patching (albeit intended for development purposes).

Still, I think an article may be required from The Free Software Foundation to clarify the new java situation.

It would also be nice if jlink has support for bundling source which addresses much of the GPL distribution issue. Obviously it does because the jdk itself includes it's own source-code but it gets put into lib/ which seems an odd place to put it (again i don't know how flexible this structure is although it appears to be quite limited).

Update: Ahh covered by the classpath exception I guess, at least for the JRE. If one builds a custom JRE though any third party modules would also require the classpath exception? Or would including the source and all modules used to create the JRE suffice? Hmmm.

JDK 9

I would guess that the modularisation will have slow uptake because it's quite a big change and locks your code into java 9+, and it may evolve a little over the next jdk or two. I'm in two minds about using it myself just yet because of this reason and also because NetBeans has failed to deliver any support for it so far that i can tell (I was dissapointed to see Oracle abandon NetBeans to apache which is most probably part of it, and they're too busy changing license headers to get any real work done). There will also likely be blowback from those invested in existing systems, merits or not. And then there's dealing with the fuckup of a situation that android/"java" is in.

I myself will poke around with it for a while and merge the functionality into java.make, it's actually a pretty close fit to everything i've done (which is a nice validation that my solution wasn't far off) and will simplify it even if i might have to make a few minor changes like platform names.

Its a pretty good fit for my work but will require a bit of setup so I wont rush into it (a couple dozen lines of shell is doing a good enough job for me). Besides it's probably worth getting some experience with it before committing to a particular design. The NetBeans situation will also be a bit of a blocker and i'll probably wait for 9 to be released first.

zcl 0.6

Yes it still lives. I've just uploaded an update to zcl

A bunch of bugfixes, new build system, more robustness, and OpenCL 2.1 support.

There are still some thing i'm experimenting with - primarily the functional/task stuff as it's just not flexible enough - but it's stable and robust and easy to work with so i'm no longer using JOCL for anything at work.

On a personal note I still haven't really gotten back into hacking and i had a short sojourn into facebookland so i haven't had much to write about. It's mostly been work, very poor sleep, and drinking! Oh and I started wearing kilts ...

Zed's not dead

I just haven't been coding or doing really anything terribly interesting lately!

And so it goes.

Update: 29.11.16

And so it goes ...

Using GNU make to build Java software

I finally finished writing an article about Java make i started some time ago, multiple times. I was going through cleaning up a new release of dez (still pending) and decided to fill it out with the junit stuff and then write it up what I actually ended up with.

The following few lines is now the complete makefile for dez. This supports `jar' (normal build target), `sources' (ide source jar), `javadoc' (ide javadoc jar), dist (complete rebuildable source), and now even `test' or `check' (unit and integration tests via JUnit 4) targets. The stuff included from java.make is reusable and is under 200 lines once you exclude voluminous comments and documentation.

java_PROGRAMS = dez

dez_VERSION=-1

dez_JAVA_SOURCES_DIRS=src

dez_TEST_JAVA_SOURCES_DIRS=test

DIST_NAME=dez

DIST_VERSION=-1.3

DIST_EXTRA=COPYING.AGPL3 README Makefile

include java.make

The article is over on my home page at Using GNU Make for java under my software articles section.

Images, Pixels, Java Streams

This morning I wrote and published article about writing an image container class for Java which supports efficient use of Streams. It is on my local home page under Pixels - Java Images, Streams.

Although there is much said of it, there is still quite a bit unsaid about how many wrong-footed experiments it took to accomplish the seemingly obvious final result. The code itself is now (or will be) part of an unpublished library I apparently started writing just over 12 months ago for reasons I can no longer recall. It doesn't have enough guts to make publishing it worthwhile as yet.

I'm also still playing with fft code and toying with some human-computer-interaction ideas.

It was the best of times, it was the worst of times. More fft, more bang for your buck. Same old less bang for your buck.

After posting the result I kept experimenting with the code I `live blogged' about yesterday. I did some linlining but was primarily experimenting with multi-threading. I also looked at a decimation in time algorithm (which i kept fucking up until I got it working today), and a coupe of other things too, as will become apparent.

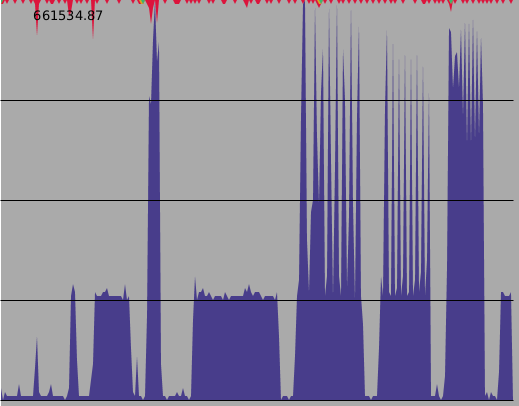

First a picture of a thousand words.

Now the words.

This picture shows the CPU load over time (i'm sure you all know what that is) as I ran a specific set of tests on a test against 8x runs of a 2^24 complex forward transform. The lines represent each core available on this computer. I put some 2s sleep calls between steps to make them distinguishable. Additionally each horizontal pixel represents 250ms which is is about the minimum sampling time which gives usable results.

Refer to earlier posts as to what the names mean.

- The first spike is starting the Java application. Oh boy, look at all that time and resources wasted! Java is the total suxors!!

- The next spike (about 10% utilisation) is initialising my "rot0" implementation. It uses 1 set of Wn exponents per log level of the calculation so has very little setup.

- The 1-load skinny spike is initialising jtransforms. I wonder how it's creating it's tables because it uses a lot of memory for them. It's clearly not using "[sic]Math".cos() for it.

- The 1-load long spike is initialising "table2". Lots of calls to "[sic]Math".sin(), and "[sic]Math".cos().

- The tall skinny spike is initialising the algorithm as discussed yesterday - I made some trivial alterations so it runs multi-threaded.

- There is now a 4 second pause because the next (newer but based on yesterday's code) implementation just uses the tables generated by the previous step and so doesn't register.

That's the setup out of the way, the first 3rd or so of the plot. It's important but not as important as the next bit.

- The long 1-load box is executing rot0 8 times over the data. As expected it takes the longest but fully occupies the CPU it is on while it's running.

- The next haircomb result is from jtransforms. Visually it's only using about 50% of the available cpu cycles above 1 core. The 8 separate transforms executed as part of this benchmark are clearly visible.

- Then comes my the (radix-4 only) implementation from yesterday. Despite it's somewhat trivial multi-threading code it's basically the same; but runs the fastest-so-far.

- Then comes the modified code. A nice solid block and a somewhat shorter execution time. In this case at least 4xthreads are used for all passes including the first. It's still wasting about a core, at least according to my monitor.

- The last spike is just the "rot0" algorithm starting again.

Well that's it I suppose. It utilises more of the available cpu resource and executes in a shorter time. But how was that achieved?

As Deane would say, ``Well Rob, i'm glad you asked''.

It only required a couple of quite simple steps. Firstly I copied the "radix4" routine into the inner loop of "radix4_pass". The jvm compiler wont do this itself without some options and it makes quite a difference of itself. Then I copied this to another "radix6_pass" which takes additional arguments that defines a sub-set of a full transform to calculate. I then just invoke this in parts from 4x separate threads, and keep doing that sort of thing until I hit the "logSplit" point and subsequently proceed as before. It was a quick-and-dirty and could be cleaned up but probably wont add much performance.

It was a bit of mucking about with the addressing logic but once done it's actually a fairly minor change: yet it results in the best performance by far.

At this point i've explored all the isssues and am working on a complete implementation which ties it all together. I think I will write two implementations: one using fully expanded tables for "ultimate performance" and another which calculates the Wn exponents on the fly for "ultimate size". Today I got a DIT algorithm working so I will fill out the API with forward/inverse, pairs-of-real, real-input, perhaps in-order results, and a couple of other useful things to aid convolution performance. Oh and 2D of all that.

But now for a little rant.

Why is software still so fucking shithouse?

So as part of this effort, I ended up having to write my own cpu load monitor. The only one I had available on slackware only has a tiny graph and is mostly just a GUI version of top. The dark slate blue is user time, the grey area is idle, crimson is cpu load, irq is medium sea green and the io wait is golden rod. The kernel doesn't report particularly accurate values in /proc/stat but it sufficed.

But I had intended to annotate this image with some nice 'callouts' and shadowed boxes and whatnot so i wouldn't have to write those 1000 words just to explain what it was showing. However ...

gimp has turned into a "professional photographer editing suite" - i.e. a totally useless piece of junk for most of the planet (and pro photographers wont use it anyway?). So the only other application i had handy was openoffice "dot org" (pretentious twats) draw. I even started to track down the dependencies of inkscape to build that but gtkmm? Yeah ok. But openoffce: Jesus H fucking-A-cunt-of-a-christ, what a load of shit that is. I can't imagine how may millions of dollars that piece of rubbish has cost in terms of developer hours and wasted customer time (aka luser `productivity') but i'm astounded by just how terrible it is. It runs very slow. Has some weird-arse modality/GUI update bullshit going on. Is a total pain to use (in terms of number of mouse clicks required to do the most trivial of operations). And above that it's just buggy as hell. I'd call up the voluminous settings "dialogue" to change a background colour and then it would decide to throw any changes i'd made if i didn't explicitly set it every time.

But it's in good company and about as shit as any `office' software has ever been since the inane marketroid idea to lock users into fucktastic `software ecosystems' was first conceived of. Fuck micro$oft and the fucking hor$e it fucking rode in on.

I was so pissed off I spent the next 4+ hours (till 5am) working on my own structured graphical editor. Ok maybe that's a bit manic and it'll probably go about as far as the last 4 times I did the same thing the last 4 times I also tried using a bit of similar software to accomplish a similar seemingly-simple goal ... but ``like seriously''?

To phrase it in the parlance of our time: what in the actual fuck?

Writing a FFT implementation for Java, in real-time

Just for something a bit different this morning I had an idea to do a record of developing software from the point of view of a "live blog". I was somewhat inspired by a recent video I saw of Media Molecules where they were editing shader routines for their outstandingly impressive new game "Dreams" on a live video stream.

Obviously I didn't quite do that but I did have a hypothesis to test and ended up with a working implementation to test that hypothesis, and recorded the details of the ups and downs as I went.

Did I get a positive or negative answer to my question?

To get the answer to that question and to get an insight into the daily life of one cranky developer, go have a read yourself.

twiddle dweeb twiddle dumb

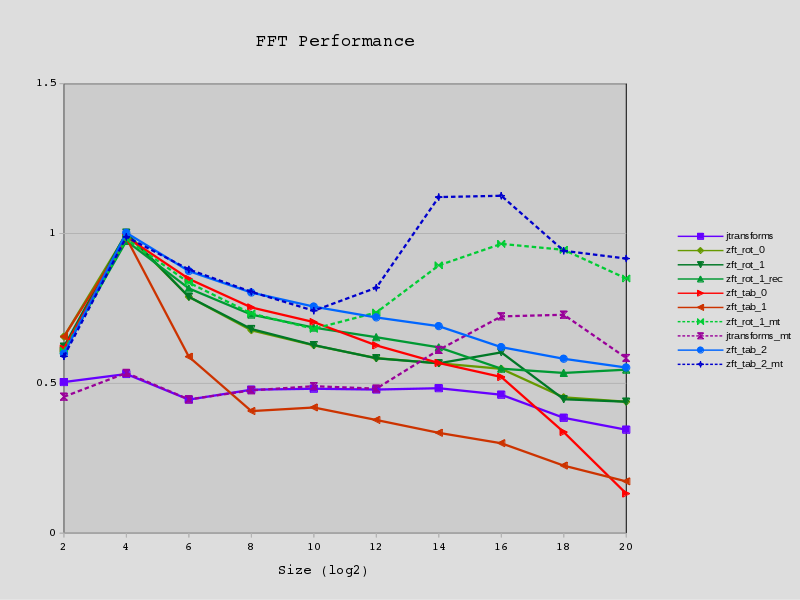

I started writing a decent post with some detail but i'll just post this plot for now.

Ok, some explanation.

I tried to scale the performance by dividing the execution time per transform by N log2 N. I then normalised using a fudge factor so the fastest N=16 is about 1.0.

My first attempt just plotted the relative ratio to jtransforms but that wasn't very useful. It did look a whole lot better though because I used gnuplot, but this time i was lazy and just used openoffice. Pretty terrible tool though, it feels as clumsy as using microsoft junk on microsoft windows 95. Although I had enough pain getting good output from gnuplot via postscript too, but nothing a few calls to netpbm couldn't fix (albeit with it's completely pointless and useless "manual" pages which just redirect you to a web page).

Well, some more on the actual information in the picture:

- As stated above, the execution time of a single whole transform is scaled by N log_2 N and scaled to look about right.

- I run lots of iterations (2^(25 - log N)) in a tight loop and take the best of 3 runs.

- All of mine use the same last two passes, so N=2^2 and N=2^4 are "identical". They are all DIF in-place, out-of-order.

- The '_mt' variants with dashed lines are for multi-threaded transforms; which are obviously at a distinct advantage when measuring executiong time.

- My multi-threading code only kicks in at N > 2^10 ('tab_2' above has a bug and kicks in too early).

- The 'rot' variants don't use any twiddle factor tables - they calculate them on the fly using rotations.

-

'rot_0' is just of academic interest as repeated rotations cause error accumulation for large N.

Wrong again! I haven't done exhaustive tests but an impulse response and linearity test puts it equivalent or very close to every other implementation up to 2^24

- 'rot_1*' compensates with regular resynchronisation with no measurable runtime cost. ^^^ But why bother eh?

- The 'tab' variants use a more 'traditional' lookup table.

- The 'rec' variant (and 'tab_2', 'tab_2_mt', and 'rot_1_mt') use a combined breadth-first/depth-first approach. It's a lot simpler than it sounds.

- I only just wrote 'tab_2' a couple of hours ago so it includes all the knowledge i've gained but hasn't been tuned much.

So it turned out and turns out that the twiddle factors are the primary performance problem and not the data cache. At least up to N=2^20. I should have known this as this was what ffts was addressing (if i recall correctly).

Whilst a single table allows for quick lookup "on paper", in reality it quickly becomes a wildly sparse lookup which murders the data cache. Even attempting to reduce its size has little benefit and too much cost; however 'tab_1' does beat 'tab_0' at the end. While fully pre-calculating the tables looks rather poor "on paper" in practice it leads to the fastest implementation and although it uses more memory it's only about twice a simple table, and around the same size as the data it is processing.

In contrast, the semi-recursive implementation only have a relatively weak bearing on the execution time. This could be due to poor tuning of course.

The rotation implementation adds an extra 18 flops to a calculation of 34 but only has a modest impact on performance so it is presumably offset by a combination of reduced address arithmetic, fewer loads, and otherwise unused flop cycles.

The code is surprisingly simple, I think? There is one very ugly routine for the 2nd to lass pass but even that is merely mandrualic-inlining and not complicated.

Well that's forward, I suppose I have to do inverse now. It's mostly just the same in reverse so the same architecture should work. I already wrote a bunch of DIT code anyway.

And i have some 2D stuff. It runs quite a bit faster than 1D for the same number of numbers (all else being equal) - in contrast to jtransforms. It's not a small amount either, it's like 30% faster. I even tried using it to implement a 1D transform - actually got it working - but even with the same memory access pattern as the 2D code it wasn't as fast as the 1D. Big bummer for a lot of effort.

It was those bloody twiddle factors again.

Update: I just realised that i made a bit of a mistake with the way i've encoded the tables for 'tab0' which has propagated from my first early attempts at writing an fft routine.

Because i started with a simple direct sine+cosine table I just appended extra items to cover the required range when i moved from radix-2 to radix-4. But all this has meant is i have a table which is 3x longer than it needs to be for W^1 and that W^2 and W^3 are sparsely located through it. So apart from adding complexity to the address calculation it leads to poor locality of reference in the inner loop.

It still drops off quite a bit after 2^16 though to just under jtransforms at 2^20.

Copyright (C) 2019 Michael Zucchi, All Rights Reserved.

Powered by gcc & me!