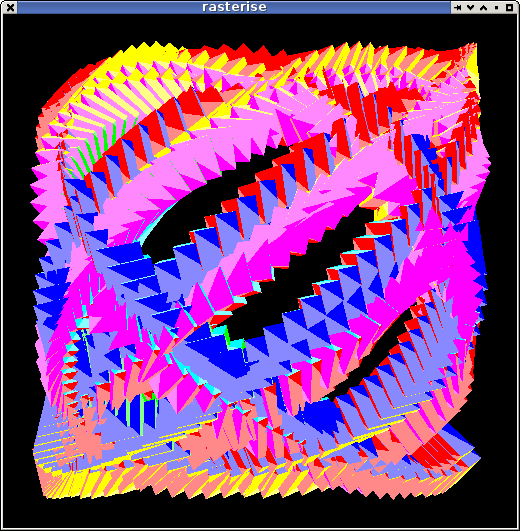

lost in dots

Continued to play with software rendering. Ported the code to C and got it running on the framebuffer - still only on the workstation so I don't have any idea how it will run on the parallella.

The Java seems to be faster than the C TBH but that is probably rendering to X verses the framebuffer which is very slow. gcc isn't having the best time of the critical inner loop either. Currently i'm not running any fragment shaders and I have half an idea to just try creating a small soft-3d engine all in java since the jvm can handle some of that. Maybe not.

I thought i'd look at GLES2+ to see how it handles certain things, ... and decided to base some of the API on that because it dictates to a good degree the backend implementation and is based on 20+ years of industry experience. Apart from the shader compilers (which i'm going to side-step entirely) the core components are quite simple. The biggest one from my perspective is the one i've been focusing on so far - the rasteriser and varying interpolator.

I hadn't really thought about how many varyings there need to be, and 8x4 is a good amount. I hadn't got to thinking about uniforms yet either.

I played a bit with code to implement glDrawArrays() which is mostly just data conversion and flattening. My first obvious thought was to select elements from the arrays and turn them into rows (i.e. array of structures) and then batch-process them using the vertex shader. Locality of reference and all that. But after thinking about the inner loop of the rasteriser it will be more efficient to use a structure of arrays approach. It also makes it easier to SIMDise anything that needs to run on the ARM - quite likely the vertex shaders and primitive setup. It makes reading the vertex array data itself much more efficient anyway since each array type is an outer-loop decision rather than an inner-loop one although could have some poor cache behaviour if that isn't taken into consideration.

The potential size of the various data-structures has made me rethink the approach I was going to take. I was going to load as many triangles into the rasteriser as would fit in LDS and process them all at once. But that just wont be enough to be any use so it will have to cycle through them multiple times for different rendering bands. If I drop it down to just a pair of buffers it simplifies the i/o stuff and doesn't make any real difference to the number of times things are read from the external memory.

Due to memory constraints i'm going to have to split the rasteriser+zbuffer test to one or more cores and the fragment rendering to other cores. Each varying interpolator requires 3 floats per element, or 12 floats per 4-element vector. A simple gourad-shaded polygon will require at least 128 bytes to store everything needed (+some control stuff). I was going to pass the uniforms around with the triangle but they are too big so instead I will pass incremental updates to uniform elements in a processing stream, and the rasteriser will make sure the fragment shaders have the updates when necessary as well.

The queueing will be a bit of a pain to get right, but i'm ignoring it for now.

Rasteriser inner loop

Because of the number of varying elements I had to change the way I was thinking of writing the inner loop. I can't keep everything in registers and will need to load the interpolation addends as I go - this is why the data has to be stored as a structure of arrays because it always getting element 0 of a 3-element vector and that's more efficient to load using dword loads.

Also, since fadd is just as costly as fmadd I can remove the incremental update of the coefficients from always having to be executed - i.e. when a z-buffer test fails. It would also allow me to split the work into a couple of passes - one that generates live pixels, and another which turns them into interpolated fragments which could run branchless. I'm not sure if that is worth it yet though since it doesn't remove the branch.

So a single horizontal scan will be something like the following. This is the biggest case.

(v0,v1,v2) = edge equation results for start location

(zw, 1/w) = interpolants for z/w, 1/w

(p0-p31) = interpolants for varying values

(e0,e1,e2) = update values for edges

(ez, ew) = update values for z/w, 1/w

x = start;

lastx = start

start:

in = v0.sign | v1.sign | v2.sign;

if in

zt = load zw-buffer[x]

if (zw > zt) // i think some of my maths is flipped

store zw-buffer[x] = zw

// use delta of X so that 3-instruction fmadd can be used!

xdiff = x - lastx;

lastx = x;

; imagine this is all magically scheduled properly ...

1/w += xdiff * ew

dload ep0-ep1

p0 += xdiff * ep0

p1 += xdiff * ep1

dload ep2-ep3

p2 += xdiff * ep2

p3 += xdiff * ep3

.. etc

write target fragment record

write 1/w

write z/w

// (i don't think i need v0-v3)

write p0-p31

fi

fi

v0 += e0;

v1 += e1;

v2 += e2;

zw += ez;

while !done

I'm still experimenting with ways to work out how 'start' and '!done' are implemented. I've got a bunch of different algorithms although I need to get it on-hardware to get more accurate timing information. The stuff in ATTILA uses a sort of flood-fill algorithm but for various reasons I don't want to use that. I have a pretty reliable way to get both the start and end point of a line using a binary search, ... but its costly if run every line (it's still not perfect either but I think that's down to float being a shit :-/).

My current winner uses a 8x8 search every 8 lines and then uses that as the bounds. And that's even with a broken test which often over-runs (i've subsequently found the maths that work but haven't included it yet).

For small triangles just using the bounding box is pretty much as good as it gets, so may suffice.

Removing the loop branches

Actually it might be possible to have 2 branchless loops ...

(v0,v1,v2) = edge equation results for start location (zw) = interpolant for z/w, tmp = room for hit locations loop1 (over bounds): in = v0.sign | v1.sign | v2.sign; zt = read zwbuffer[x] in &= (zt - zw).sign zt = cmove(in, zw) write zwbuffer[x] = zt tmp[0] = x tmp = cmove(in, tmp+1)

All branch-free. At the end, tmp points to the end of an array which includes the X values of all visible pixels.

The second loop just interpolates everything for the x locations stored in the tmp array.

lastx = 0 loop2 (over tmp-tmp.start): load x increment everything by (x-lastx) output lastx = x

As with a previous post about getting good fmadd performance, it should be just about possible to dual-issue every fmadd and remove all stalls. All writes are written directly to the fragment shader core for that row?

Requires working memory for the X coordinates though; but that should be doable since this is all the core will be running.

However, having said all that; the fragment output from a single row can more than exceed the fragment processor memory so they need to be throttled. Back to loops and branches I guess but it was an interesting puzzle.

As one might tell i'm not really that concerned with getting a working solution, more in investigating the ways to fit the peculiarities of the hardware. There's just a lot to write even if i've got a fairly good idea of a workable solution and had all the components necessary :-/

Or maybe ...

Just do everything in a single-loop on the same core?

Yes maybe, hmm, dunno now. Really depends on if there's enough memory for it to fit and whether loading the triangle Nx times vs 1x times is slower than all the comms overheads. Unless the mesh is saturated; I would say no chance.

It's much easier and probably the first step to performance analysis anyway.

I guess I was more lost in dots than I realised when I made the subject (it was for the pic).