termz

Every now and then I think about the sad state of affairs regarding terminal emulators for X11. It's been a bit of a thing for a while - it's how i ended up working at Ximian.

I stopped using gnome-terminal when i stopped working on it and went back to xterm. I never liked rxvt or their ilk and all of the 'desktop environment' terminal emulators are pretty naff for whatever reason.

xterm works and is reliable but with recent (being last 10 years) X Windows System servers the text rendering performance plummeted and even installing the only usable typefaces (misc-fixed 6x13, and 10x20, and sometimes xterm itself) became a manual job. Whilst performance isn't bad on this kaveri box I also use an uber-intel machine with a HD7970 where both emacs and xterm runs like an absolute pig whenever any GL applications are running, and it isn't even very fast otherwise (i'm talking whole desktop grinding to a halt as it redraws exposes at about 1 character column per SECOND). It's an "older" distribution so that may have something to do with it but there is no direct indication why it's so horrible (well apart from the AMD driver but i have no choice for that since it's used for OpenCL dev). I might upgrade it to slackware next year.

Ho hum.

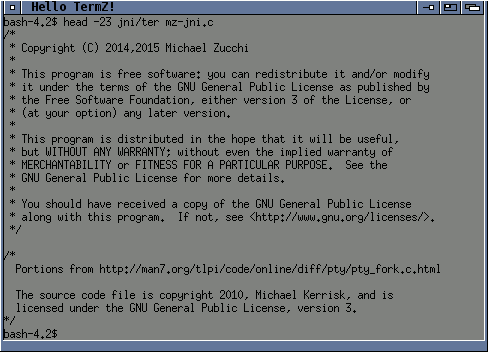

Anyway I started poking last night at a basic xterm knockoff and got to the point of less sort of running inside it and now i'm thinking about ways I might be able to implement something a bit more complete. I'm working in Java and have a tiny bit of JNI to get the process going and handle some ioctl stuff (which seems somewhat easier now than it was in zvt, but portability is not on the agenda here).

zvt

When I wrote ZVT the primary goal was performance and to that end considerable effort was expended on making a terminal state machine which implemented zero-copy and zero-garbage algorithms. zero-copy is always a good thing but the zero-garbage was driven by the very slow malloc on Solaris at the time and my experience with Amiga memory management.

Another part of the puzzle was display and the main mechanism was inspired by some Amiga terminal emulators that used COPPER lists to re-render rows to the screen in arbitrary order without requiring them to be re-ordered in memory (memory bandwidth was a massive bottleneck when using pre 1985-spec hardware in 199x). I used a cyclic double-linked (exec) list of rows and to implement a scroll I just moved a row from the start to the end of the list which takes 8 pointer updates and a memset to clear it (and it also works for partial screen scrolls). By tracking the last row a given one was actually displayed at I could -at-any-point-later- attempt to create an optimal screen-update sequence including using blits for scrolling and minimising glyph redraws to only those that had changed. The algorithm for this was cheap and reliable if a little fiddly to get correct.

This last point is important as it allows the state machine to outpace the screen refresh rate which always becomes the largest bottleneck for terminal emulators in 'toolkit' environments. This is where it got all it's performance from.

new hardware, new approach

Thinking about the problem with current hardware my initial ideas are a little bit different.

I still quite like the linked list storage for the state machine and may go back to it but my current idea is instead to store a full cell-grid for the displayable area. I can still make full-screen scrolling just as cheap using a simple cyclic row trick (infact, even cheaper) but sub-region scrolling would require memory copies - but at the resolution of 4-bytes-per-glyph these are insanely cheap nowadays.

This is the most complex part of the emulator since it needs to implement all the control codes and whatnot - but for the most part thats just a mechanical process of implementing enough of them to have something functional.

I would also approach rendering from an entirely different angle. Rather than go smart i'm going wide and brute-forcing a simpler problem. At any given time - which can be throttled based on arbitrary metrics - I can take a snapshot of the current emulator screen and then asynchronously convert that to a screen display while the emulator continues to run on it's own thread.

For a basic CPU renderer it may still require some update optimisation but given it will just be trivial cell fonts to copy it probably wont be appreciably cheaper to scroll compared to just pasting new characters every time. And obviously this is utterly trivial code to implement.

The ultimate goal (and why the fixed-array grid backing is desirable) would be to use OpenCL or OpenGL (or more likely Vulkan if it ever gets here) to implement the rendering as a single pass operation which touches each output pixel only once. This would just take the raw cell-sized rectangle of the terminal state machine as it's only variable input and produce a fully rendered and styled framebuffer as the result. Basically just render the cells as a low-res nearest-neighbour texture lookup into a texture holding the glyphs. The former is a tiny tiny texture in GPU terms and rendering a single full-screen NN textured quad is absolutely nothing for any GPU. And compared to the gunk that is required to render a full-screen of arbitrary text through any gui toolkit ever it's many orders of magnitude less effort.

Ideally this would only ever exist at full-resolution in the on-screen framebuffer memory which would also make it extremely cheap memory wise.

But at least initially I would be going through JavaFX so it will instead have to have multiple copies and so on. The reason to use JavaFX is for all the auxiliary but absolutely necessary fluff like clipboard and dnd operations. I don't really like tabbed terminals (I mean I want to use windows as windows to multitask, not as a stack to task switch) but that is one way to ameliorate the memory use multiplication this would otherwise create.

So to begin with it would be extremely fat but that's just an implementation detail and not a limitation of the design.

worth bothering?

Still mulling that over. It's still a lot of work even if conceptually it's almost trivial.