About Me

Michael Zucchi

B.E. (Comp. Sys. Eng.)

also known as Zed

to his mates & enemies!

< notzed at gmail >

< fosstodon.org/@notzed >

GNU make and java

Today I had another go at looking at using meta-rules with gnu make to create a 'automatic makefile' system for building java applications. Again I just "had a look" and now the day is all gone.

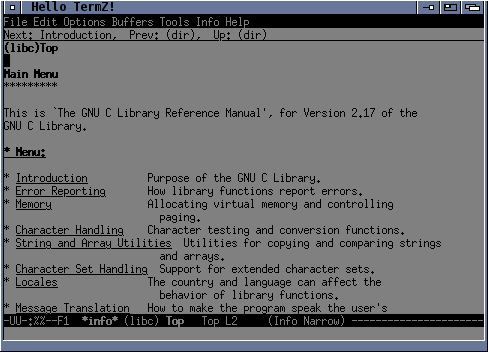

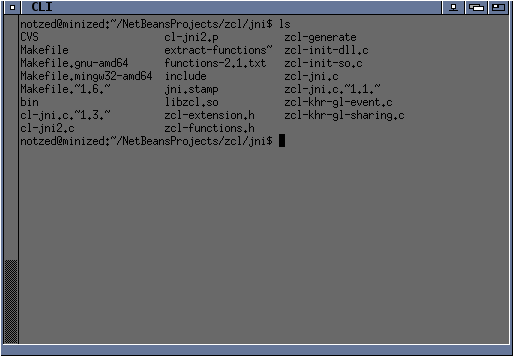

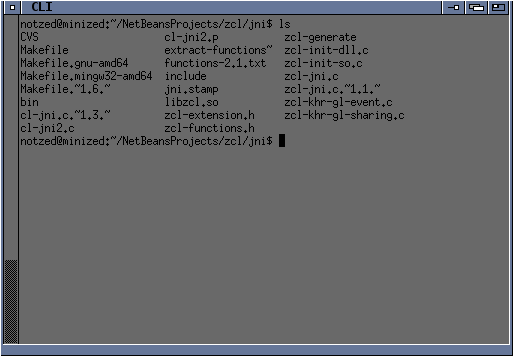

This is the current input for termz.

java_PROGRAMS=termz

termz_VERSION=0.0

termz_DISTADD=Makefile java.make \

jni/Makefile \

jni/termz-jni.c

# compiling

termz_SOURCES_DIRS=src

termz_RESOURCES_DIRS=src

termz_LIBADD=../zcl/dist/zcl.jar

# packaging (runtime)

termz_RT_MAIN=au.notzed.termz.TermZ

termz_RT_LIBADD=../zcl/dist/zcl.jar

# native targets

termz_PLATFORMS=gnu-amd64

# native libs, internal or external. path:libname

termz_RT_JNIADD=../zcl/jni/bin:zcl jni/bin:termz

# Manually hook in the jni build

jni/bin/gnu-amd64/libtermz.so: build/termz_built

make -C jni TARGET=gnu-amd64

clean::

make -C jni clean

include java.make

- make (jar)

-

This builds the class files in build/termz, then the jni libraries via the manual hook. In another staging area (build/termz_dist) it merges the classes and the resource files (stripping the leading paths properly). It uses javapackager to create an executable jar file from this staged tree which includes references to the RT_LIBADD libs (moved to lib/). Finally it copies the RT_LIBADD jar files into bin/lib/ and the native libraries into bin/lib/{platform} so that the jar can be executed directly - only java.library.path must be set.

Not shown but an alternative target type is to use java_JARS instead of java_PROGRAMS. In this case jar is used to package up the class and resource files and in this case no staging is needed.

- make tar

-

This tars up all the sources, resources, and DISTADD into a tar file. No staging is required and all files are taken 'in-place'. The tar file extracts to "termz-0.0/".

- make clean

-

Blows away build and bin and (indirectly) jni/bin. All 'noise' is stored in these directories.

I'm using metaprogramming so that each base makefile can define multiple targets of different types. This is all using straight gnu make - there is no preprocessing or other tools required.

java.make does all the "magic" of course. While it's fairly straightforward ... it can also be a little obtuse and hairy at times. But the biggest difficulty is just deciding what to implement and the conventions to use for each of them. Even the variable names themselves.

There were a couple of messier problems that needed solving although i'd solved the former one last time I looked at this some time ago.

class-relative names

For makefile operation the filenames need to be specified absolutely or relative to the Makefile itself. But for other operations such as building source jars one needs the class-relative name. The easiest approach is just to hard-code this to "src/" but I decided I wanted to add more flexibility than this allows.

The way I solved this problem was to have a separate variable which defines the possible roots of any sources or resources. Depending on what sort of representation I need I can then match and manipulate on these roots to form the various outputs. The only names specified by the user are the filenames themselves.

For example when forming a jar file in-place I need to be able to convert a resource name such as "src/au/notzed/terms/fonts/misc-fixed-semicondensed-6x13.png" into the sequence for calling jar as "-C" "src" au/notzed/terms/fonts/..." so that it appears in the correct location in the jar file.

I use this macro:

# Call with $1=root list, $2=file list

define JAR_deroot=

$$(foreach root,$1,\

$$(patsubst $$(root)/%,-C $$(root) %,\

$$(filter $$(root)/%,$2))) \

$$(filter-out $$(addsuffix /%,$1),$2)

endef

I'll list the relevant bits of the template which lead up to this being used.

# Default if not set

$1_RESOURCES_ROOTS ?= $$($1_RESOURCES_DIRS)

# Searches for any files which aren't .java

$1_RESOURCES_SCAN := $$(if $$($1_RESOURCES_DIRS),$$(shell find $$($1_RESOURCES_DIRS) \

-type d -name CVS -o -name '.*' -prune \

-o -type f -a \! -name '*java' -a \! -name '*~' -a \! -name '.*' -print))

# Merge with any supplied explicitly

$1_RES:=$$($1_RESOURCES_SCAN) $$($1_RESOURCES)

# Build the jar

$$($1_JAR): $(stage)/$1_built

...

jar cf ... \

$(call JAR_deroot,$$($1_RESOURCES_ROOTS),$$($1_RES)) \

...

At this point I don't care about portability with the use of things like find. Perhaps I will look into guilified make in the future (i'm a firm believer in using make as the portability layer as the current maintainer is).

And some example output. The last 2 lines are the result of this JAR_deroot macro.

jar cf bin/termz-0.0.jar -C build/termz . \

-C src au/notzed/termz/fonts/misc-fixed-6x13-iso-8859-1.png \

-C src au/notzed/termz/cl/render-terminal.cl

A lot of this stuff is there to make using it easier. For example if you just want to find all the non-java files in a given directory root and that contains the package names already you can just specify name_RESOURCES_DIRS and that's it. But you could also list each file individually (there are good reasons to do this for "real" projects), put the resources in other locations or scatter them about and it all "just works".

How?

Just looking at the JAR_deroot macro ... what is it doing? It's more or less doing the following pseudo-java, but in an implicit/functional/macro sort of way and using make's functions. It's not my favourite type of programming it has to be said, so i'm sure experts may scoff.

StringBuilder sb = new StringBuilder();

// convert to relative paths

for (String root: resourcerootslist) {

for (String path: resourcelist) {

if (path.startsWith(root+"/")) {

String relative = path.replace("^" + root + "/", "");

sb.append("-C").append(root)

.append(relative);

}

}

}

// include any with no specified roots

resource:

for (String path: resourcelist) {

for (String root: resourcerootslist) {

if (path.startsWith(root+"/")) {

continue resource;

}

}

// path is already relative to pwd

sb.append(path);

}

return sb.toString();

This is obviously just a literal translation for illustrative purposes. Although one might notice the brevity of the make solution despite the apparent verbosity of each part.

Phew.

going native

Native libraries posed a similar but slightly different problem. I still need to know the physical file location but I also need to know the architecture - so I can properly form a multi-architecture runtime tree. I ummed and aahd over where to create some basic system for storing native libraries inside jar files and resolve them at runtime but i decided that it just isn't a good idea for a lot of significant reasons so instead I will always store the libraries on disk and let loadLibrary() resolve the names via java.library.path. As it is still convenient to support multi-architecture installs or at least distribution and testing I decided on a simple naming scheme that places the architecture under lib/ and places any architecture specific files there. This then only requires a simple java.library.path setup and prevents name clashes.

Ok, so the problem is then how to define both the architecture set and the library set in a way that make can synthesise all the file-names, extensions (dll, vs so), relative paths, manifest entries in a practical yet relatively flexible manner?

Lookup tables of course ... and some really messy and hard to read macro use. Oh well can't have everything.

Libraries are specified by a pair of values, the location of the directory containing the architecture name(s), and the base name of the library - in terms of System.loadLibrary(). These are encoded in the strings by joining them with a colon so that they can be specified in a single variable. The final piece is the list of platforms supported by the build, and each library must be present for all platforms - which is probably an unnecessary and inconvenient restriction in hindsight.

This is the bit of code which converts the list of libraries + platform names into platform-specific names in the correct locations. I'm not going to bother to explain this one in detail. It's pretty simple just yuck to read.

#

# lookup tables for platform native extensions

#

# - is remapped to _, so usage is:

#

# $(_$(subst -,_,$(platform))_prefix) = library prefix

# $(_$(subst -,_,$(platform))_suffix) = library suffix

#

_gnu_amd64_prefix=lib

_gnu_amd64_suffix=.so

_gnu_amd32_prefix=lib

_gnu_amd32_suffix=.so

_mingw32_amd64_prefix=

_mingw32_amd64_suffix=.dll

_mingw32_amd32_prefix=

_mingw32_amd32_suffix=.dll

# Actual jni libraries for dependencies

$1_JAR_JNI=$$(foreach p,$$($1_PLATFORMS), \

$$(foreach l,$$($1_RT_JNIADD), \

$$(firstword $$(subst :, ,$$l))/$$(p)/$$(_$$(subst -,_,$$p)_prefix)$$(lastword $$(subst :, ,$$l))$$(_$$(subst -,_,$$p)_suffix)))

Thinking about it now as i'm typing it in a simpler solution is possibly in order even if might means slightly more typing in the calling Makefile. But such is the way of the metamake neophyte and why it takes so long to get anywhere. This is already the 2nd approach I tried, you can get lost in this stuff all too easily. I was thinking I would need some of this extra information to automatically invoke the jni makefile as required but I probably don't or can synthesise it from path-names if they are restricted in a similar fashion and I can just get away with listing the physical library paths themselves.

Simple but restricted and messy to implement:

termz_PLATFORMS=gnu-amd64

termz_RT_JNIADD=../zcl/jni/bin:zcl jni/bin:termz

vs more typing, more flexibility, more consistency with other file paths, and a simpler implementation:

termz_RT_JNIADD=../zcl/jni/bin/gnu-amd64/libzcl.so \

../zcl/jni/bin/mingw32-amd64/zcl.dll \

jni/bin/gnu-amd64/libtermz.so

Ahh, what's better? Does it matter? But nothing matters. Nothing matters.

Ok the second one is objectively better here isn't it?

After another look I came up with this to extract the platform directory name component:

$(lastword $(subst /, ,$(dir $(path))))

make'n it work

One other fairly large drawback of programming make this way is the abysmal error reporting. If you're lucky you get a reference to the line which expands the macro. So it's a lot of hit and miss debugging but that's something i've been doing since my commodore days as a kid and how I usually work if i can get away with it (i.e. building must be fast, i.e. why I find it so important in the first place).

And if you think all of that looks pretty shit try looking at any of the dumb tools created in the java world in the last 20 years. Jesus.

damn work

It seems i hadn't had enough of the terminal after the post last night - i was up till 3am poking at it - basically another whole full-time day. I created a custom terminfo/termcap - basically just started with xterm and deleted shit I didn't think I cared for (like anything mouse or old bandwidth savers that looked like too much effort). But I looked up each obtuse entry and did some basic testing to make sure each function I left in worked as well as I could tell. Despite the documentation again there are a lot of details missing and I had to repeatedly cross checked with xterm behaviour. Things like the way limiting the scroll region works. And I just had a lot of bugs anyway from shoddy maths I needed to fix. I then went to look at some changes and netbeans had been too fat so I went down the rabbit-hole of writing meta makfiles so I could do some small tests in emacs ... and never got that far.

I really needed a proper break from work-like-activities this weekend too, and a lot more sleep. At least I did water the garden, mow the lawn, wash my undies, and run the dishwasher.

opencl termz

I was just going to "try something out" while I waited for the washing to finish ...

... so after a long and full day of hacking ...

The screenshot is from an OpenCL renderer. Each work item processes one output pixel and adds any attributes on the fly, somewhat similar in effect to how hardcoded hardware might have done it. I implemented a 'fancy' underline that leaves a space around descenders. The font is a 16x16 glyph texture of iso-8859-1 characters. I haven't implemented colour but there's room for 16.

On this kaveri machine with only one DIMM (== miserable memory bandwidth) the OpenCL routine renders this buffer in about 35-40uS. This doesn't sound too bad but it takes 3uS to "upload" the cell table input, and 60uS to "download" the raster output (and this is an indexed-mode 8-bit rather than RGBA which is ~2x slower again), but somehow by the time it's all gone through OpenCL that's grown to 300-500uS from first enqueue to final dequeue. Then add on writing to JavaFX (which converts it to BGRA) and it ends up ~1200uS.

I'm using some synchronous transfers and just using buffer read/write so there could be some improvements but the vast majority of the overheads are due to the toolkit.

So I guess that's "a bit crap" but it would still be "fast enough". For comparison a basic java renderer that only implements inverse is about 1.5x slower overall.

But for whatever reason the app still uses ~8% cpu even when not doing anything; and that definitely isn't ok at all. I couldn't identify the cause. Another toolkit looks like a necessity if it ever went beyond play-thing-toy.

I got bored doing the escape codes around "^[ [ ? Ps p" so it's broken aplenty beyond the bits I simply implemented incorrectly. But it's only a couple days' poking and just 1K3LOC. While there is ample documentation on the codes some of the important detail is lacking and since i'm not looking at any other implementation (not even zvt) i have to try/test/compare bits to xterm and/or remember the fiddly bits from 15 years ago (like the way the cursor wrapping is handled). I also have most of the slave process setup sorted beyond just the pty i/o - session leaders, controlling terminals, signal masks and signal actions, the environment. It might not be correct but I think all the scaffolding is now in place (albeit only for Linux).

FWIW a test i've been using is "time find ~/src" to measure elapsed time on my system - after a couple of runs to load everything into the buffer cache this is a consistent test with a lot of spew. If I run it in an xterm of the same size this takes ~25s to execute and grinds big parts of the desktop to a near halt while it's active. It really is abysmal behaviour given the modern hardware it's on (however "underpowered" it's supposed to be; and it's considerably worse on a much much faster machine). The same test in 'termz' takes about 4.5s and you'd barely know it was running. Adding a scrollback buffer would increase this (well probably, and not by much) however this goes through a fairly complete UTF-8 code-path otherwise.

The renderer has no effect on the runtime as it is polled on another thread (in this instance via the animation pulse on javafx). I don't use locks but rely on 'eventual consistency'. Some details must be taken atomically as part of the snapshot and these are handled appropriately.

Right now I feel like i've had my fill for now with this. I'm kinda interested, but i'm not sure if i'm interested enough to finish it sufficiently to use it - like pretty much all my hacking hacked up hacks. Time will be the teller.

termz

Every now and then I think about the sad state of affairs regarding terminal emulators for X11. It's been a bit of a thing for a while - it's how i ended up working at Ximian.

I stopped using gnome-terminal when i stopped working on it and went back to xterm. I never liked rxvt or their ilk and all of the 'desktop environment' terminal emulators are pretty naff for whatever reason.

xterm works and is reliable but with recent (being last 10 years) X Windows System servers the text rendering performance plummeted and even installing the only usable typefaces (misc-fixed 6x13, and 10x20, and sometimes xterm itself) became a manual job. Whilst performance isn't bad on this kaveri box I also use an uber-intel machine with a HD7970 where both emacs and xterm runs like an absolute pig whenever any GL applications are running, and it isn't even very fast otherwise (i'm talking whole desktop grinding to a halt as it redraws exposes at about 1 character column per SECOND). It's an "older" distribution so that may have something to do with it but there is no direct indication why it's so horrible (well apart from the AMD driver but i have no choice for that since it's used for OpenCL dev). I might upgrade it to slackware next year.

Ho hum.

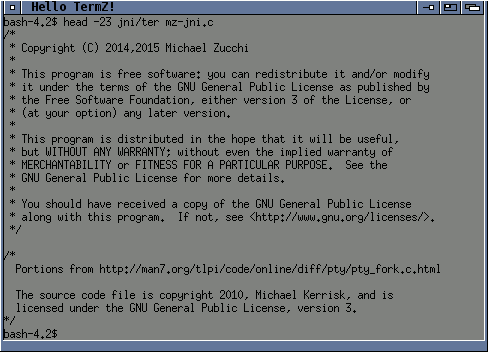

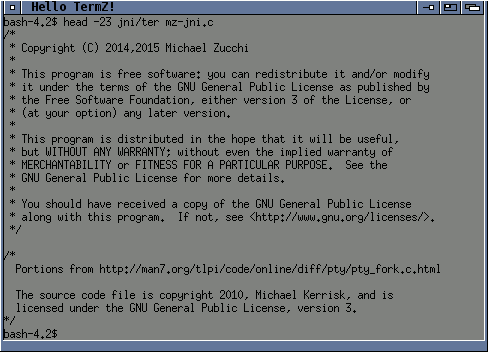

Anyway I started poking last night at a basic xterm knockoff and got to the point of less sort of running inside it and now i'm thinking about ways I might be able to implement something a bit more complete. I'm working in Java and have a tiny bit of JNI to get the process going and handle some ioctl stuff (which seems somewhat easier now than it was in zvt, but portability is not on the agenda here).

TermZ? Glyphs are greymaps extracted directly from the PCF font.

zvt

When I wrote ZVT the primary goal was performance and to that end considerable effort was expended on making a terminal state machine which implemented zero-copy and zero-garbage algorithms. zero-copy is always a good thing but the zero-garbage was driven by the very slow malloc on Solaris at the time and my experience with Amiga memory management.

Another part of the puzzle was display and the main mechanism was inspired by some Amiga terminal emulators that used COPPER lists to re-render rows to the screen in arbitrary order without requiring them to be re-ordered in memory (memory bandwidth was a massive bottleneck when using pre 1985-spec hardware in 199x). I used a cyclic double-linked (exec) list of rows and to implement a scroll I just moved a row from the start to the end of the list which takes 8 pointer updates and a memset to clear it (and it also works for partial screen scrolls). By tracking the last row a given one was actually displayed at I could -at-any-point-later- attempt to create an optimal screen-update sequence including using blits for scrolling and minimising glyph redraws to only those that had changed. The algorithm for this was cheap and reliable if a little fiddly to get correct.

This last point is important as it allows the state machine to outpace the screen refresh rate which always becomes the largest bottleneck for terminal emulators in 'toolkit' environments. This is where it got all it's performance from.

new hardware, new approach

Thinking about the problem with current hardware my initial ideas are a little bit different.

I still quite like the linked list storage for the state machine and may go back to it but my current idea is instead to store a full cell-grid for the displayable area. I can still make full-screen scrolling just as cheap using a simple cyclic row trick (infact, even cheaper) but sub-region scrolling would require memory copies - but at the resolution of 4-bytes-per-glyph these are insanely cheap nowadays.

This is the most complex part of the emulator since it needs to implement all the control codes and whatnot - but for the most part thats just a mechanical process of implementing enough of them to have something functional.

I would also approach rendering from an entirely different angle. Rather than go smart i'm going wide and brute-forcing a simpler problem. At any given time - which can be throttled based on arbitrary metrics - I can take a snapshot of the current emulator screen and then asynchronously convert that to a screen display while the emulator continues to run on it's own thread.

For a basic CPU renderer it may still require some update optimisation but given it will just be trivial cell fonts to copy it probably wont be appreciably cheaper to scroll compared to just pasting new characters every time. And obviously this is utterly trivial code to implement.

The ultimate goal (and why the fixed-array grid backing is desirable) would be to use OpenCL or OpenGL (or more likely Vulkan if it ever gets here) to implement the rendering as a single pass operation which touches each output pixel only once. This would just take the raw cell-sized rectangle of the terminal state machine as it's only variable input and produce a fully rendered and styled framebuffer as the result. Basically just render the cells as a low-res nearest-neighbour texture lookup into a texture holding the glyphs. The former is a tiny tiny texture in GPU terms and rendering a single full-screen NN textured quad is absolutely nothing for any GPU. And compared to the gunk that is required to render a full-screen of arbitrary text through any gui toolkit ever it's many orders of magnitude less effort.

Ideally this would only ever exist at full-resolution in the on-screen framebuffer memory which would also make it extremely cheap memory wise.

But at least initially I would be going through JavaFX so it will instead have to have multiple copies and so on. The reason to use JavaFX is for all the auxiliary but absolutely necessary fluff like clipboard and dnd operations. I don't really like tabbed terminals (I mean I want to use windows as windows to multitask, not as a stack to task switch) but that is one way to ameliorate the memory use multiplication this would otherwise create.

So to begin with it would be extremely fat but that's just an implementation detail and not a limitation of the design.

worth bothering?

Still mulling that over. It's still a lot of work even if conceptually it's almost trivial.

Yep, I was bored.

I made a Workbench2.0 window theme for xfce tonight.

Focused

(yes i realise the depth and zoom buttons are swapped but i'm not re-taking these shots. Oh blast, the bottom-left pixel should be black too.).

Actually why I did this came about in a rather round-a-bout way.

I had to spend all day in Microsoft Windows today debugging some code and as white backgrounds strain my eyes too much I spent some time trying to customise netbeans and the system itself such that it was usable. Netbeans was a copy of the config on another machine plus a theme change and installation of the dejavu fonts. But after a bit of poking around with the 'classic windows' theme I found I could change more than I expected and set it up somewhat amiga-like, as far as that goes (wider borders, flat colours, etc). The theme editor is a pretty dreadful bit of work-experience-kid effort like most of the config windows in that shitstain of an OS shell.

So that got me thinking - it always bothered me that ALL the usable XFCE4 themes have "microsoft windows 95 blue" borders, so i poked around and found the theme I was using (Microcurve) and started randomly editing the XPMs until I ended up with this.

Unfocused

The zoom button is maximise, but the since the depth button doesn't function that way I mapped that to minimise - which will probably take a while to get used to since its no longer familiar and doesn't exactly match it's function. I had to create a new pin button which is definite programmer-art but fits the simple flat design well enough. I already had the close button in the correct spot but decided to drop the menu and shade buttons since I never use them anyway.

Pinned

I did try to have the close and depth buttons right to the edge as they should be but they get cut-off when the window is maximised, messed up if not all buttons are included in the decoration, and it meant I couldn't animate depressing them properly. So I extended the side borders to the top. I made the bottom thicker too - I cant fucking stand trying to hit a stupid 1-pixel high button to resize the windows anyway (particularly when the mouse normally lags as focus changes turning it into a detestable mini-game every time) and it adds a pleasant weight to the windows. The bottom corners are wider as well which also affects the resize handles in a positive way.

Yeah it's still blue ... but at least it's a different blue, of an older and more refined heritage.

PS gimp 2.8 is a fucking pain in the arse to use.

Update: I decided to publish what I have on a Workbench2.0 theme home page. This contains updated screenshots.

Update: Looks like CDE?

Yeah, no.

unusually long arms?

Since getting skinny again i've been forced (against my will!) to at least look into finding some clothes that fit.

On the weekend I went to look at some locally made woolen stuff (expensive!) and the smallest size was still a bit baggy for the thin tshirts (but they are intentionally a rather loose cut). But the jumpers of the same size were just too short in the arms and the shop assistant kindly suggested my arms were unusually long! Hah, maybe, maybe not. I went with a medium jumper and some small tshirts and i'm probably all shopped out for a while after that effort so I wont find out if she was right till I try other clothes. God knows when i'll wear them.

In high school photos I was always at the side in the first standing row and with rows being ordered by height that put me squarely in short-arse territory. When I did work experience at the end of year 11 they kept joking how I was going to "grow up" to be a jocky (funny men!), yet somehow by the time I started uni 2 and a bit years later i'd reached 6'; so maybe i am an odd shape (or it's not me that's odd!).

I had resigned myself to being about 90kg for the foreseeable future at least and I even expected that to be a little optimistic. Losing nigh on 4 1/2 bags of spuds in 9 months seems ... excessive; that was just under 25% of my total mass. I seem to recall measuring myself rather inaccurately at over 1m around the waist at some point, ... and now it's easily under 800mm (those spuds had to fit somewhere I guess). An old mate I worked with before Ximian reckons i'm thinner than when I left to start at Ximian nearly 16 years ago. Yay I guess? FWIW I asked the dr if i was underweight last week and he said no way and even if he just based it on BMI == 20 that's good enough for me.

It certainly makes riding up hills a lot easier. I took the roadie out for a roll last weekend for a 65km round-trip to Port Noarlunga which has a few rises and on the day sported a stiff (and cold!) southerly to head into on the way up. Given its been so long since i've been that far or that hilly (such as it is) it was about the right distance from a cold-start - i was a bit tired when I got home but barely sore the next day (well apart from the arse, it will take a few more trips to get saddle hardened to that seat again). I've also been going to the beach any day it's hot enough and splashing around like a drowning cat-in-a-sack which makes for some solid exercise together with the 1 hour riding required. It's pretty dull though and the 1/2 hour ride home is enough to heat up again but I had to get out of the house.

So apart from the gout i'm probably on my way to being physically in better shape than ever. And on the gout, after a bit of a hiccup of misunderstanding that should be working towards becoming a mostly-non-issue too (albeit with a daily pill).

Pity about the head though, "utterly miserable" pretty much sums that up. But that summation has rarely been very wrong at any time for as far back as I care to remember.

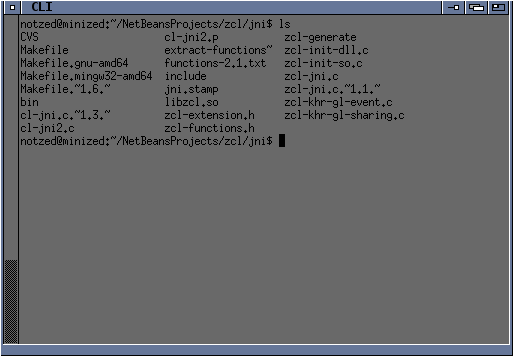

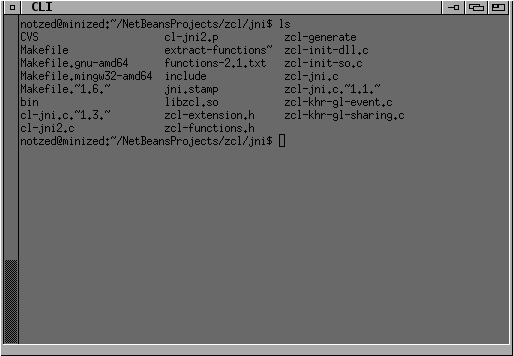

OpenCL 2.1 + java = zcl 0.x?

I noticed Khronos released the OpenCL 2.1 spec recently so I spent this morning updating zcl to include all the functions.

Since I don't have a suitable implementation nothing is tested and there's probably some typos and so on. I found a few small bugs in the enum tables while I was there.

But what took most of the time was the property queries. Each OpenCL object type has one or more query functions but rather than implement them all I use a tagged query function which branches to the correct function entry point at the lowest level but shares all the rest of the code. But then I had to add some specialist variants, and specialisations for return types and overloaded parameters - it started to get unwieldy and a new query type on CLKernel meant it wasn't going to be enough anyway.

So I said fuck that for a joke and just redid the whole mechanism.

For the basic 5-parameter queries I still share most of the code but I now add any type-specific queries separately. To cope with the api and code bloat i distilled the java side interface down to only two entry points for each query:

native <T> T getInfoAny(int type, int ctype, int param_name);

native <T> T getInfoAnyV(int type, int ctype, int param_name);

The first is a scalar query and the second an array one. It just means it now has to box primitive return types for scalar queries which is unlikely to have any measurable performance impact but the Java helpers which wrap the above interfaces in type-friendly calls could always be replaced with native equivalents if it was an issue.

This let me merge some internal jni code and delete a lot of snot and I moved the re-usability to a different layer so that the more specific queries can share most of the code. For example this was the previous set of native interfaces on CLObject, and although this covered the kernel and program specific 6-argument queries like GetProgramBuildInfo() it was getting a bit messy.

native long getInfoLong(int type, long subtarget, int param);

native long[] getInfoLongA(int type, long subtarget, int param);

native int getInfoInt(int type, long subtarget, int param);

native byte[] getInfoByteA(int type, long subtarget, int param);

native <T> T getInfoP(int type, long subtarget, int param, int ctype);

native <T extends CLObject> T[] getInfoPA(int type, long subtarget, int param, int ctype);

native long getInfoSizeT(int type, long subtarget, int param);

native long[] getInfoSizeTA(int type, long subtarget, int param);

... It seemed like a good idea at the time.

The exposed interfaces remain the same (like getInfoString(param), getInfoInt(param), etc).

Given the complete lack of interest and because it needs some testing anyway I wont be releasing a zcl-0.6 just yet.

bloody peecee

I've been having a few issues with my machine lately so as i had nothing better to do yesterday I had a look into fixing it.

First issue I looked at was that I broke OpenGL when i upgraded to the latest catalyst driver. I tried various kernels, even a long-overdue update of slackware (which lead to a another few hour's diversion earlier in the month trying to un-fuckup firefox as much as i could), using the slackware kernel, etc. But finally I used strace and slackpkg to determine it was using a stale .so left over from AMD's previous abomination of an install script so once that was deleted all was well. SVM still wont work in any of my code - which is still baffling as it works from the AMD SDK samples. I should really post something on the AMD forums but i haven't used it for a while.

The other problem has been only half my RAM started showing up a few months ago; the bios shows 8G but linux only sees 4G. This is ok for what i normally use the machine for but netbeans brings it to it's knees if you have a couple of projects open. I thought it was down to a faulty DIMM and If i hadn't forgotten and needing to come home earlier yesterday I might have bought some. But I tried each individually and they seemed to work - passing the linux make test at least. I tried lower speeds, voltages, all sorts of things. It appears a fairly common problem but nobody had much of a solution.

I even upgraded the bios - which was another couple of hour diversion as that cleared the EFI boot record and I had to re-figure out how to re-install it. I used a USB-bootable tool-linux distribution called grml - very impressed - I just dd'd it onto the usb stick, and it even provides an EFI boot record so i could just use efibootmgr to re-add it. I know i've tried puppylinux multiple times before but it never worked so I might get a usb stick just to keep this handy.

The last thing I tried was dusting the sockets and then trying to jiggle the cpu in it's socket - I don't have any thermal paste so I couldn't get at it properly but i wiggled a bit without freeing the heatsink.

Now ... that appeared to work. I did some tests and so on and it seemed to be ok, ... I even wrote a post about it.

But then I had to tinker, so i was playing with the memory speeds - to see if "faster" ram really made any real difference - but I broke the whole machine so i had to power cycle it a few times till the BIOS reset everything, ... and yeah back to 4G of ram. Blast. I swore at it, shut it off, and went to bed early.

On a cold boot I did get 8G out of it but then the system crashed while I was using it, so something is amiss. It could still be the RAM I suppose but at this point i'm more inclined to believe it is the motherboard or PSU. I don't feel like investigating further for the moment and will just see how it goes (i just cold booted and it's 8G again *shrug*).

However, some good did come of all this.

When I was playing with kernels I went to the trouble to actually go through all the config options and fuck off everything I didn't need. It's down to 3.2M packed including my system filesystem with no module so i don't need an initrd, and it boots a bit faster (not that it was any slouch). I'm also trying the fully-preemptive kernel and i'm liking it so far, even under very heavy load the system remains interactive such that you barely notice at all. Hmm, I just noticed the sound mixer is missing, NM.

But the really good bit is now the CPU runs almost cold - i don't know if it's the BIOS upgrade, the kernel customisation, or some BIOS setting that changed (i already had it on 45W TDP because it got too hot), but the difference is marked. Previously any high-load task such as a 'make -j4' would cause the fan to kick in almost immediately and if i didn't also up the case fan speed (manually) fairly promptly the whole desk would take off. I've currently got a kernel build running for a few minutes triggering a load-average of over 8, with the case fan on it's slowest setting; and the CPU fan hasn't throttled up at all.

Maybe the machine is just running a lot slower now - but I can't really tell so what does it matter if it is.

Update: it got unstable so i took one dimm out. Seems ok so far. Maybe it's just the PSU? It seems like the most fragile component and it's almost certainly underpowered anyway (sigh, a poor decision that one). I dunno. I started looking at new cases & psus but if it remains stable albeit in a reduced capacity I'll be in no rush ...

time is an illusion

But so is reality so it doesn't make any difference.

I've got too much of it to fill with boring tedium either way.

Copyright (C) 2019 Michael Zucchi, All Rights Reserved.

Powered by gcc & me!